Mastering Data Driven Tests A Practical Guide

Ever feel like you’re writing the same test over and over again, just with slightly different inputs? That’s a common frustration in software testing, and it’s exactly what data-driven testing is designed to solve.

At its core, data-driven testing is a method that decouples your test logic from the actual data you're testing with. Instead of hardcoding values like usernames and passwords directly into a script, you pull them from an external source—like a spreadsheet, CSV file, or database. This lets you run the same test script with hundreds of different inputs without touching the code.

Understanding Data Driven Tests

Think of it like baking. A traditional, hardcoded test is like having a single, rigid recipe for one chocolate cake. If you want to make a vanilla cake, you need a whole new recipe. What about a strawberry cake? Another new recipe. It’s tedious and incredibly inefficient.

Data-driven testing, on the other hand, is like having one master recipe (your test script) and a pantry full of different ingredients (your test data). You use the same set of instructions but simply swap out the ingredients to create endless variations—chocolate, vanilla, strawberry—all without rewriting the core steps.

The fundamental principle is simple but powerful: separate the "what to do" (test logic) from the "what to use" (test data). This separation is the key to creating more efficient, scalable, and comprehensive automated tests.

How It Works in Practice

So, how does this play out in a real testing scenario?

Instead of writing a unique test for every single user login, you create one reusable script. This script is designed with placeholders, or variables, for things like username, password, and expected_outcome. The actual values for these variables live in your external data file.

Your test automation framework then runs that single script in a loop. On each pass, it grabs a new row of data from your file and plugs it into the script. This lets you test a huge range of scenarios—valid logins, incorrect passwords, empty fields, special characters—all with just one piece of code. It’s a smarter way to work.

To make the distinction clearer, let's compare the two approaches side-by-side.

Traditional Testing vs Data Driven Testing

| Attribute | Traditional Testing | Data Driven Testing |

|---|---|---|

| Data Handling | Test data is hardcoded into the test script. | Test data is stored externally (e.g., CSV, Excel). |

| Maintainability | Difficult. Changing data requires code changes. | Easy. Data can be updated without touching the script. |

| Scalability | Poor. Each new test case needs a new script. | Excellent. Add new test cases by adding data rows. |

| Reusability | Low. Scripts are tied to specific data sets. | High. One script can run with many data sets. |

| Coverage | Limited. It's time-consuming to cover many scenarios. | Extensive. Easily test hundreds of variations. |

As you can see, the data-driven approach offers clear advantages in almost every aspect, leading to more robust and maintainable test suites.

This methodology is quickly becoming a cornerstone of modern software quality assurance. In fact, the global software testing market, which heavily features automated and data-driven tests, grew past $54.68 billion and is on track to exceed $99.79 billion. This boom is directly tied to massive digital transformation efforts, where companies are spending a collective $1.85 trillion to ensure their software is both reliable and efficient. You can dig deeper into these figures by exploring a full research report on software testing.

The Strategic Benefits of Data-Driven Testing

Moving to a data-driven approach isn't just a minor technical adjustment—it's a fundamental shift in how you think about quality. When you separate your test logic from the data it uses, you unlock some serious gains in efficiency, test coverage, and the overall quality of your software. You stop just checking boxes and start building a truly robust validation process.

The first thing you'll notice is a massive jump in test coverage. Think about it: a single, well-written script can run through hundreds, or even thousands, of different scenarios. It methodically plows through various inputs, tricky edge cases, and boundary values. This systematic process is incredibly effective at finding those weird, hidden bugs that manual testing or hardcoded scripts almost always miss.

Drive Efficiency and Reusability

Another huge win is reusability. Instead of writing a brand new test script for every single scenario, your team maintains one core script and just adds more data. This saves an enormous amount of time, both when you're first writing the tests and for all the maintenance that comes later. When the application logic changes, you update one script, not fifty.

This newfound efficiency frees up your QA team to tackle more interesting problems, like exploratory testing or deep performance analysis. They can move from being repetitive scriptwriters to strategic quality engineers. Many of these ideas tie into the broader advantages of automated testing in general, but a data-driven mindset really amplifies the results.

The real power of data-driven testing lies in its scalability. As your application grows, your test suite can expand by simply adding new rows to a data file, ensuring your testing efforts keep pace with development without becoming an unmanageable burden.

Build More Robust and Reliable Software

When you systematically test your application with a wide variety of data, you inevitably build more reliable software. This is especially important for things like validating user inputs, testing API endpoints, or checking complex business rules where a weird value could bring everything crashing down. By getting ahead of these scenarios, you create applications that can handle the messy, unpredictable nature of the real world.

This improved reliability directly impacts user trust and your company's reputation. Fewer bugs making it to production means a smoother user experience, happier customers, and a healthier bottom line. This intense focus on data quality is a big deal across the industry. For example, the North America big data testing market alone hit $645.6 million and is expected to reach $1.18 billion. This growth is fueled by companies realizing they absolutely must ensure data reliability, which you can see in more detail on the big data testing market.

Anatomy of a Data-Driven Test Framework

To really get a feel for how data-driven testing works, it helps to peek under the hood. The best way to think about it is like a modern, automated factory assembly line. Every part has a specific job, but they all work in perfect sync to produce a consistent, high-quality product. A data-driven framework is built on the same principle, with four key components working together to create a powerful testing engine.

First up is the Test Script. This is the core piece of machinery on your assembly line. It holds the fundamental logic—the actual steps your test will perform, like logging into an account, navigating to a product page, or filling out a form. The crucial part here is that the script isn't rigid; it's built with placeholders or variables instead of hardcoded values (like a specific username or password).

Next, you have the Data Source. Think of this as the factory's massive warehouse, stocked with all the raw materials you need. This is where all your test data is stored, completely separate from the test script itself. This separation is what makes the whole approach so powerful.

Choosing Your Data Source

The "warehouse" can take many forms, from a simple spreadsheet to a complex database. Picking the right one often depends on your team's skills, the complexity of your data, and your existing tools.

Here’s a quick look at some common options:

Common Data Sources for Test Automation

| Data Source | Best For | Complexity |

|---|---|---|

| CSV/Excel Files | Small-to-medium datasets, non-technical users, quick setup. | Low |

| JSON/XML Files | Web services, APIs, hierarchical data structures. | Medium |

| Databases (SQL/NoSQL) | Large-scale, dynamic test data; scenarios requiring data integrity. | High |

| YAML Files | Configuration data, human-readable scenarios. | Low-Medium |

Ultimately, the goal is to choose a source that makes it easy for your team to add, update, and manage test cases without ever having to touch the underlying automation code.

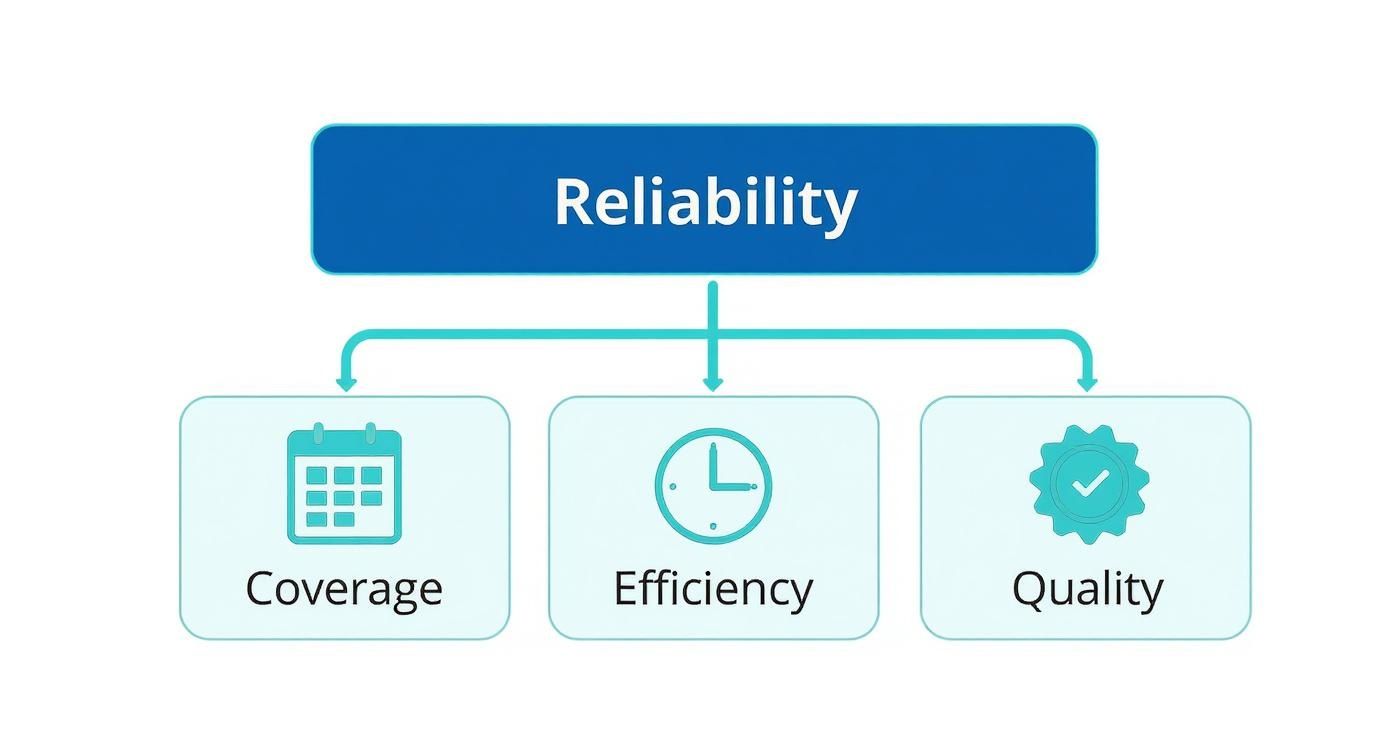

The infographic below highlights how this structure directly boosts software reliability by improving test coverage, efficiency, and overall quality.

As you can see, achieving that higher level of reliability isn't magic; it's the direct outcome of improving these three core testing pillars.

The Engine and the Output

So, how does the data get from the warehouse to the machine? That’s the job of the Data Driver. This is the smart conveyor belt of your operation. It's a bit of code that fetches data from your source—one row or record at a time—and feeds it directly into the test script's variables. It’s the engine that runs the loop, executing the same test script over and over, once for each set of data you've provided.

Finally, we have the Test Results. These are your finished products rolling off the line, each one inspected for quality. As the framework executes each iteration, it logs whether the test passed or failed and, most importantly, which specific line of data was used. This detailed reporting is a lifesaver for debugging, as it pinpoints the exact inputs that caused a problem.

Getting your head around this ecosystem is the first step toward building a truly robust framework. The script, the data, the driver, and the results are all interconnected, forming a scalable system for deep, comprehensive testing.

These components don't operate in a vacuum; they often need to interact with other systems. For a deeper dive into the architectural side, looking at some API documentation for test framework integration can offer some fantastic real-world insights.

A well-architected framework keeps these parts organized and distinct. By separating the "what to test" (the data) from the "how to test" (the script), you give your team the ability to massively expand test coverage without writing a single new line of code. And that, right there, is the entire point of data-driven testing.

How to Implement Your First Data-Driven Test

Making the jump from theory to a real-world data-driven test is actually much easier than it sounds. The big idea is to pull your test values—the usernames, passwords, and search terms—out of your test code and put them into a separate file. This simple change is what unlocks all the power.

The best part? This approach works almost anywhere. Whether you're using Cypress for front-end testing or Selenium for browser automation, the core steps are the same.

Let's walk through it together with a classic example: a user login form. It's the perfect place to start because you naturally need to test lots of different username and password combinations to make sure everything works correctly.

Step 1: Pinpoint a Repetitive Test Case

First things first, look for a test you're already running over and over with just minor tweaks to the data. A login form is a great candidate, but there are plenty of others.

Think about places like:

- Search functionality: How does your app handle different search queries? What about empty searches, special characters, or super long words?

- Form submissions: Are you testing a contact or sign-up form? You need to check how it behaves with good data, bad data, and missing data.

- API endpoint validation: Does your API respond correctly when you send it different request payloads?

Once you've picked one, you've found your "master recipe"—the single set of actions you want to repeat.

Step 2: Externalize Your Test Data

Now it's time to gather your ingredients. Instead of hardcoding values like testuser and password123 directly in your test script, you'll move them to an external data source. A simple CSV (Comma-Separated Values) file is a fantastic way to get started.

Go ahead and create a file called login_data.csv. Inside, you'll set up columns for your inputs and what you expect to happen. Each row will represent one complete test run.

| username | password | expected_outcome |

|---|---|---|

| validuser | correctpass | success |

| validuser | wrongpass | failure |

| invaliduser | anypass | failure |

| correctpass | failure |

Just like that, you've separated your test logic from your test data. This little file now holds every scenario you plan to test, keeping your code clean and your data easy to manage.

Of course, real-world systems often require more complex data. If you need a hand creating a rich, varied dataset, you can learn more about how to generate mock data for any scenario to cover all your bases.

Step 3: Write a Flexible Test Script

With your data ready, the next move is to update your test script so it can read from that external file. You'll replace all the fixed, hardcoded values with variables or placeholders that will get filled in dynamically.

Here’s what that might look like in a simplified pseudo-code example:

// This isn't real code, but it shows the logic

function testLogin(username, password, expected_outcome) {

// 1. Go to the login page

navigateTo('/login');

// 2. Type in the username and password from our variables

enterText('#username-field', username);

enterText('#password-field', password);

clickButton('#submit-button');

// 3. Check if the result is what we expected

if (expected_outcome === 'success') {

assert(pageUrl.includes('/dashboard'));

} else {

assert(elementExists('#error-message'));

}

}

See how the script is now completely generic? It has no idea what specific data it's using—it just knows the sequence of steps to perform. This makes it incredibly reusable.

Step 4: Loop Through the Data

The final piece of the puzzle is connecting your data file to your flexible test script. You just need to add a loop that reads each row from your CSV file and then calls your test function, feeding it the data from that row.

The loop is the engine of your data-driven test. It executes the same test logic repeatedly, once for each row in your data source, turning a single script into a comprehensive test suite.

Your test runner will now execute the testLogin function for every single data set in your file. What was once a rigid, single-purpose test has just been transformed into a powerful and scalable validation tool. It’s that simple.

Best Practices for Effective Data-Driven Testing

Getting your first data-driven test up and running is a great first step, but the real challenge is building a suite of tests that stays strong and scalable over the long haul. The most successful teams I've worked with treat their test data just like they treat their application code—it needs to be clean, well-organized, and kept under version control.

Without that level of care, test suites can quickly devolve into a tangled web of outdated, irrelevant, or duplicated data. The goal is to build a testing foundation that grows with your product, not one that collapses under its own weight.

Maintain Clean and Organized Data

Think of your data sources as the fuel for your data-driven tests. Keeping them in top condition is essential. This means setting clear ground rules and making sure every piece of data has a specific job to do.

Here are a few key strategies that really work:

- Version Control Your Data: Keep your test data files—whether they're CSVs, JSON, or something else—in a Git repository right alongside your test scripts. This gives you a clear history of changes, stops accidental overwrites, and makes sure your data and tests are always in sync.

- Use Descriptive Naming: Name your files and data fields in a way that makes sense to everyone. A file called

invalid_login_credentials.csvis infinitely more helpful thantest_data_2.csv. - Avoid Redundancy: Get rid of duplicate rows and combine similar test cases. A lean, focused dataset is not only easier to manage but also runs much faster.

This structured approach is a core part of a broader set of QA testing best practices that separates the reliable test suites from the chaotic ones.

Treat your test data as a first-class citizen in your development lifecycle. Just like code, it requires reviews, maintenance, and a clear organizational strategy to remain effective.

Design Data for Comprehensive Coverage

Good test data does more than just check off the "happy path." A truly effective dataset is built to push the limits, find those tricky edge cases, and make sure your app handles failure gracefully. You have to be intentional about creating data points that test a wide range of conditions.

Make sure you're including data that covers:

- Boundary Values: Test the absolute limits, like minimum and maximum character lengths or what happens with zero and negative numbers.

- Equivalence Partitions: Group similar inputs together. For example, lump all valid email formats into one category to test them efficiently.

- Error Conditions: Deliberately throw in incorrect data types, empty fields, or special characters to see if your application fails elegantly instead of crashing.

Smart data-driven testing also means using data to optimize your efforts. For example, understanding the power of metrics and data-driven insights can help you decide which test scenarios to focus on based on how real people use your product.

This level of rigor is paying off across the industry. The data warehouse testing market, currently valued at $600 million, is expected to skyrocket to $1.2 billion as more companies prioritize data integrity. You can find more details in this data warehouse testing market report.

Common Questions About Data-Driven Tests

As teams start looking for smarter ways to test, a few questions always seem to surface. The basic idea of separating your test logic from your test data is simple enough, but the real magic happens when you understand the details. Let's dig into some of those common questions to clear up any confusion and get you on the right track.

One of the first things people wonder about is the difference between data-driven and keyword-driven testing. They sound alike and both promise more reusable tests, but they tackle different problems.

Data-Driven vs. Keyword-Driven Testing

Think of data-driven testing like this: you have one single machine designed to do one job, and you're just feeding it different materials. It's perfect for testing a login screen with 100 different username and password combinations. The test steps—navigate to the page, enter username, enter password, click submit—never change. Only the data does.

Keyword-driven testing, on the other hand, is more like building with LEGOs. You create a collection of reusable "keywords" that represent single actions, like login, searchForItem, or addToCart. From there, anyone on the team (even non-coders) can snap these keywords together in different orders to build a complete test. The focus here is on reusing the actions, not just the data.

Here’s a simple way to look at it:

- Data-Driven Testing: One test script, many sets of data. It’s the champion for testing a single feature against a huge variety of inputs.

- Keyword-Driven Testing: Many reusable keywords, assembled into different test scripts. This approach shines when building complex, end-to-end tests in a modular, readable way.

Often, the most powerful strategy is a hybrid one. You could have a keyword like

performLoginand then use a data-driven approach to power that keyword with multiple user credentials. This gives you the best of both worlds—modular tests with exhaustive data coverage.

Managing Complex Test Data

As your test suite expands, so does the data needed to fuel it. If you're not careful, managing all that data can quickly become a massive headache. The goal is to keep your test data organized, relevant, and easy to get to when you need it.

When things get complex, a simple CSV file just won't cut it anymore. Here are a few practical ways to handle large or sensitive datasets:

- Use Data Generation Tools: Instead of manually typing out hundreds of rows of data, let a tool generate realistic mock data for you. This is a lifesaver for performance testing or when you need a high volume of unique user profiles.

- Implement Data Masking: Never, ever use real customer data in your tests. Use data masking or anonymization to protect sensitive information like names, emails, and credit card numbers, all while keeping the data structure realistic.

- Consider a Dedicated Database: For large-scale projects, storing test data in its own database is a game-changer. It offers better organization, security, and the power to easily query complex data sets.

Is It Only for UI Testing?

It's a common myth that data-driven tests are only for user interfaces, like web forms. And while they are a fantastic fit for UI validation, the principle is way more flexible than that. The core concept of separating logic from data can be applied all across your tech stack.

You can, and absolutely should, use a data-driven approach for:

- API Testing: Fire off hundreds of different request payloads to an API endpoint to check its responses, error handling, and schema.

- Database Testing: Verify data transformations and business rules by feeding a function different inputs and checking what happens in the database.

- Performance Testing: Simulate different user loads and behaviors by driving your performance scripts with varied data sets that mimic how people actually use your product.

When you apply this method everywhere, you build a consistent and scalable testing strategy that boosts quality from the back end all the way to the front.

Ready to stop wrestling with test data and start shipping faster? dotMock lets you generate production-ready mock APIs in seconds, allowing you to test every edge case and failure scenario without touching a live environment. Create resilient applications and accelerate your development cycle by visiting the dotMock official website today.