9 Essential QA Testing Best Practices for 2025

In today's fast-paced software development landscape, quality assurance is no longer a final checkpoint but a continuous, integrated discipline. Moving beyond traditional bug detection requires a strategic adoption of proven methodologies that build quality into every stage of the lifecycle. This guide explores nine essential QA testing best practices that modern engineering teams are adopting to accelerate releases, reduce costs, and deliver flawless user experiences.

We will move past surface-level advice and dive into actionable strategies. From implementing a Shift-Left approach to mastering risk-based testing and integrating continuous testing within your CI/CD pipeline, each point is designed to provide clear, practical value. For frontend developers, QA teams, and DevOps engineers alike, understanding these principles is crucial for building resilient, high-quality applications.

Throughout this roundup, we'll demonstrate how to apply these concepts with real-world examples, including how tools like dotMock can streamline complex testing scenarios. You'll learn how to effectively simulate API failures, network latency, and other edge cases without impacting production systems. Let's transform your QA process from a reactive safety net into a proactive engine for innovation and reliability.

1. Shift-Left Testing Strategy

The shift-left strategy is a pivotal practice that integrates testing into the earliest stages of the software development lifecycle (SDLC). Instead of treating QA as a final gatekeeper before release, this approach moves testing activities 'left' on the project timeline, embedding them from the initial requirements and design phases. This proactive methodology is a cornerstone of modern QA testing best practices because it identifies defects early, making them significantly cheaper and faster to resolve.

Major tech companies exemplify the power of this approach. Microsoft's adoption led to a remarkable 60% reduction in escaped defects, while Netflix embeds continuous testing throughout its deployment pipeline to ensure resilience. This methodology fosters a culture of shared quality ownership, where developers, QA engineers, and operations teams collaborate from the start.

How to Implement a Shift-Left Strategy

Adopting a shift-left mindset requires both cultural and technical adjustments. For development teams, this means testing isn't an afterthought but an integral part of their workflow.

- Empower Developers: Provide developers with robust tools for unit and integration testing. Training them on testing techniques ensures they can write more testable code and validate their work effectively.

- Automate Early: Implement static code analysis tools directly within the IDE to catch syntax errors, potential bugs, and stylistic issues before the code is even committed.

- Integrate API Mocking: Use tools like dotMock to simulate API dependencies that are not yet built. This allows frontend developers and QA teams to test their components in isolation without waiting for backend services, accelerating parallel workstreams.

- Enhance Code Reviews: Make test coverage a mandatory part of the peer review process. Ensure new code is accompanied by relevant unit and integration tests, maintaining a high standard of quality.

2. Risk-Based Testing (RBT)

Risk-Based Testing (RBT) is a strategic approach that prioritizes testing efforts based on the probability of failure and the potential business impact of defects. Instead of trying to test everything equally, RBT directs QA resources toward the most critical and high-risk areas of an application. This optimization is a fundamental QA testing best practices because it ensures maximum test coverage on features that matter most to users and the business, delivering the highest return on testing investment.

This methodology, pioneered by experts like Dorothy Graham and Rex Black, is embedded in standards like ISO 29119. For example, a banking application would use RBT to focus intense testing on transaction processing and security functions, while an e-commerce platform would prioritize its payment gateway and inventory management systems. This ensures that the most catastrophic potential failures are addressed first.

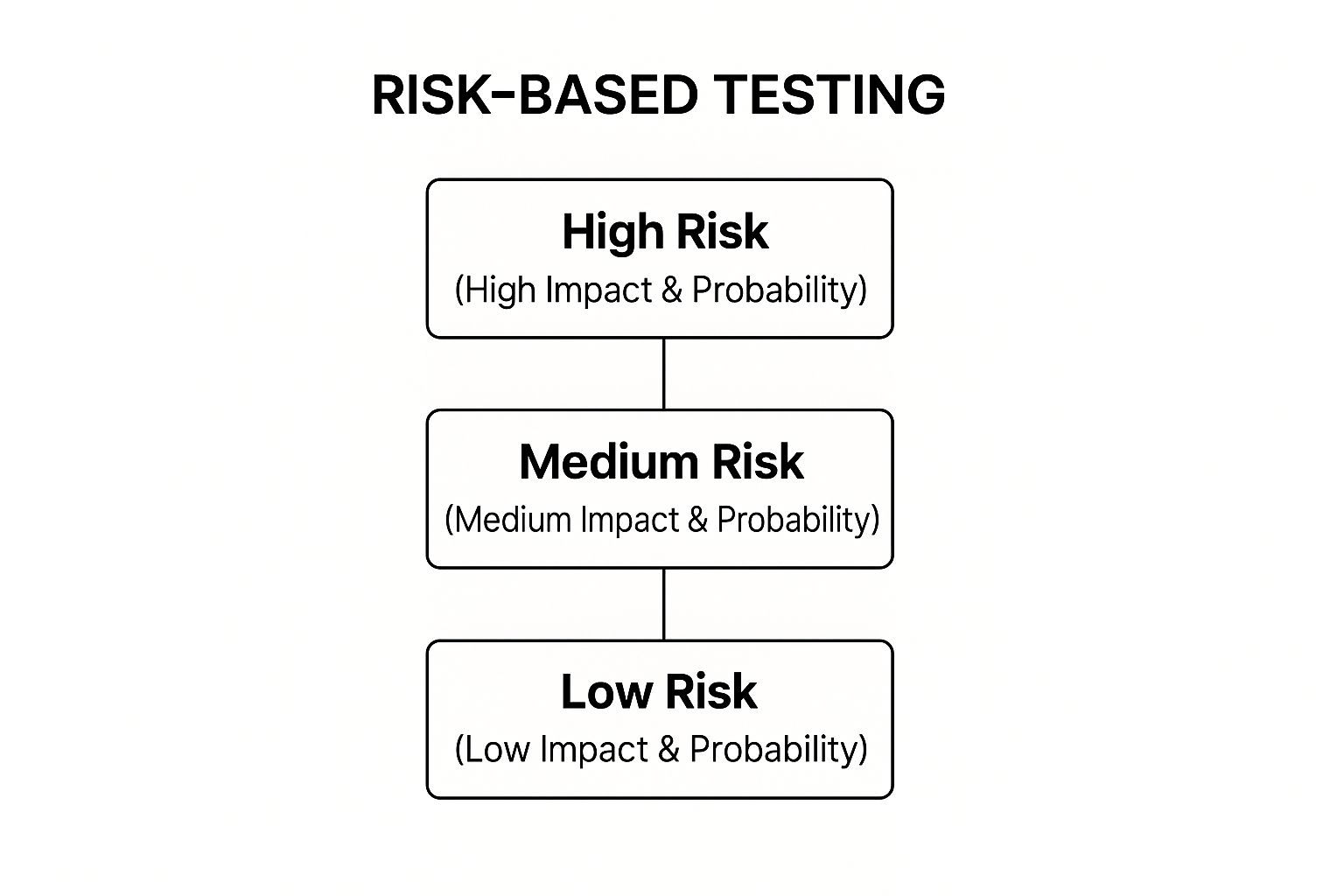

The infographic below illustrates the simple hierarchical model used in RBT to categorize and prioritize testing activities.

This hierarchy helps teams allocate their testing resources logically, assigning the most rigorous and extensive testing to high-risk features and less intensive testing to low-risk areas.

How to Implement Risk-Based Testing

Implementing RBT requires collaboration between QA, development, and business stakeholders to identify and assess risks accurately. The goal is to create a shared understanding of what constitutes a critical system failure.

- Involve Business Stakeholders: Conduct risk identification workshops that include product managers and business analysts. Their input is crucial for understanding the business impact of potential failures.

- Create a Risk Matrix: Develop a standardized scoring system to assess risk based on 'impact' and 'probability'. This creates an objective framework for prioritizing test cases.

- Focus on High-Risk Scenarios: Use tools like dotMock to simulate failure scenarios for critical third-party dependencies, such as a payment gateway failure. This allows you to test the resilience of your highest-risk integrations.

- Review and Adapt: Risk is not static. Regularly revisit your risk assessment, especially after new feature releases or changes in business priorities, to ensure your testing strategy remains aligned.

3. Test Automation Pyramid

The Test Automation Pyramid is a strategic framework that guides the distribution of automated tests across different layers of an application. Popularized by Mike Cohn, this model advocates for a healthy balance: a large base of fast, low-cost unit tests, a smaller middle layer of integration tests, and a very selective top layer of slow, expensive end-to-end (E2E) tests. This structure is a core tenet of effective QA testing best practices as it maximizes test coverage and feedback speed while minimizing maintenance overhead.

Leading tech giants build their quality strategies around this pyramid. Google famously follows a 70/20/10 split (70% unit, 20% integration, 10% E2E), enabling rapid and reliable deployments. Similarly, Netflix applies pyramid principles to ensure the resilience of its complex microservices architecture, focusing on isolated component testing to maintain platform stability. This approach ensures that most defects are caught early at the unit level, where they are easiest to diagnose and fix.

How to Implement the Test Automation Pyramid

Building a stable and efficient test suite requires a disciplined approach that prioritizes the pyramid's structure. This involves empowering developers to build quality from the ground up and being strategic about more complex tests.

- Build from the Bottom Up: Start by creating a strong foundation of unit tests. These tests are isolated, run quickly, and provide immediate feedback to developers, forming the most critical layer of your automation strategy.

- Isolate with Mocking: For integration tests, use tools like dotMock to create mock APIs and simulate dependencies. This allows teams to test service interactions reliably without the flakiness of a full-scale deployed environment, ensuring tests focus only on the integration points.

- Focus E2E on Critical Journeys: Reserve slow and brittle E2E tests for validating critical user workflows only, such as user registration or the checkout process. Over-investing at this level leads to slow feedback and high maintenance costs.

- Review and Rebalance: Regularly analyze your test suite's composition and execution times. Prune redundant or low-value E2E tests and push testing logic down the pyramid wherever possible to maintain an efficient and healthy test portfolio.

4. Continuous Testing in CI/CD Pipelines

Continuous Testing is the practice of executing automated tests as an integral part of the software delivery pipeline. Rather than being a separate phase, testing happens automatically and continuously at every stage, from code commit to production deployment. This approach provides immediate feedback on the business risks associated with a software release, making it a fundamental pillar of modern QA testing best practices and DevOps.

This methodology is essential for companies aiming for rapid and reliable releases. Amazon, for example, deploys code every 11.7 seconds, a feat made possible by embedding continuous testing into its CI/CD pipeline. Similarly, Netflix runs thousands of automated tests for every single deployment to ensure stability and resilience at scale. This practice transforms quality assurance from a bottleneck into an enabler of speed and confidence.

How to Implement Continuous Testing

Integrating continuous testing requires strategic automation and a focus on efficiency. The goal is to get fast, relevant feedback without slowing down the development pipeline.

- Integrate Diverse Test Suites: Automate various types of tests within the pipeline, including unit, integration, and end-to-end tests. Each stage of the pipeline should trigger the appropriate test suite to validate changes progressively.

- Leverage Containerization: Use technologies like Docker to create consistent, ephemeral environments for every test run. This eliminates the "it works on my machine" problem and ensures that tests are reliable and reproducible across all stages.

- Optimize Test Execution: Implement smart test selection to run only the tests relevant to the code changes, significantly reducing execution time. Caching test results and running tests in parallel are also crucial for maintaining a fast feedback loop. To learn more about how this fits into a broader strategy, check out this guide on automated functional testing.

- Create Insightful Dashboards: Develop comprehensive dashboards that provide real-time visibility into test results, code coverage, and performance metrics. This allows teams to quickly identify failures, analyze trends, and make data-driven decisions.

5. Exploratory Testing

Exploratory testing is a powerful, hands-on approach where testers simultaneously learn about the software, design tests, and execute them in a dynamic, unscripted manner. This methodology leverages a tester's curiosity, critical thinking, and domain expertise to uncover defects and usability issues that rigid, scripted tests often miss. It is one of the most effective QA testing best practices for discovering subtle, context-dependent bugs.

This approach emphasizes freedom and investigation over strict adherence to pre-written test cases. Companies like Microsoft use exploratory testing for Windows usability evaluations, while Spotify employs it to discover edge cases in its complex streaming algorithms. The goal is not just to verify existing functionality but to actively explore and challenge the system to reveal its weaknesses.

How to Implement Exploratory Testing

Effective exploratory testing is not random; it's a structured and focused discipline. It requires a strategic framework to guide the tester's efforts and ensure valuable outcomes.

- Use Testing Charters: Create clear, mission-based charters to define the scope and objectives for each testing session. A charter might be, "Explore the checkout process with an expired credit card to verify error handling and user feedback."

- Adopt Session-Based Management: Structure testing into time-boxed sessions (e.g., 60-90 minutes). This helps maintain focus and makes the process measurable, allowing teams to track what was tested and what was found.

- Document Findings Immediately: Encourage testers to use screen recording tools, detailed notes, and screenshots to document their process and any anomalies they uncover. This provides clear, reproducible evidence for developers.

- Combine with Automation: Use exploratory testing to find new scenarios and edge cases. Once a critical bug is found, create an automated regression test for it to prevent its recurrence, creating a powerful feedback loop.

6. Comprehensive Test Data Management

Comprehensive Test Data Management (TDM) is a systematic approach to creating, controlling, and maintaining the data needed for testing. Instead of using haphazard or production-cloned data, TDM ensures that QA teams have access to secure, reliable, and representative data sets. This practice is crucial among modern QA testing best practices because it directly impacts test accuracy, reliability, and compliance with privacy regulations like GDPR.

Effective TDM prevents data-related test failures, improves test coverage, and secures sensitive information. For example, JPMorgan Chase utilizes synthetic data generation to test financial algorithms without exposing real customer data. Similarly, healthcare organizations implement HIPAA-compliant data masking to test applications while protecting patient privacy. This disciplined approach ensures testing is both thorough and responsible.

How to Implement Comprehensive Test Data Management

Implementing TDM involves establishing clear processes and using the right tools to govern the entire test data lifecycle. It shifts the team from ad-hoc data sourcing to a structured, repeatable strategy.

- Classify and Mask Data: Create data classification standards to identify sensitive information. Use data masking, anonymization, or synthetic data generation tools to protect private information while maintaining data integrity for testing.

- Automate Data Provisioning: Implement automated processes to refresh and provision test data on demand. This ensures test environments have current and relevant data, reducing manual effort and delays for QA teams.

- Establish Data Governance: Define clear data retention and cleanup policies for test environments. This prevents data bloat, reduces storage costs, and minimizes the risk of outdated data causing inaccurate test results.

- Use Realistic Data Subsets: Create smaller, targeted, and realistic data subsets from production environments. This speeds up test execution and database refresh times without compromising the quality of the tests. Learn more about how to power your test environments with the right data in our guide to data-driven testing.

7. Defect Prevention and Root Cause Analysis

Defect prevention and root cause analysis is a proactive quality assurance approach that shifts focus from merely finding and fixing bugs to understanding and eliminating their origins. Instead of reacting to issues as they arise, this methodology digs deeper to identify the systemic process flaws, knowledge gaps, or environmental factors that cause defects. This focus on prevention is a fundamental QA testing best practice because it stops bugs before they are ever written, saving immense time and resources.

This practice is heavily influenced by manufacturing principles, such as Toyota's lean production system and Motorola's Six Sigma, which prioritize process improvement to minimize errors. In software, IBM's Defect Prevention Process (DPP) demonstrated how formal root cause analysis could drastically reduce defect injection rates. The goal is to create a feedback loop where every defect becomes a lesson that strengthens the entire development lifecycle.

How to Implement Defect Prevention and Root Cause Analysis

Implementing this proactive strategy involves creating structured processes for analyzing failures and translating insights into actionable process improvements. It requires a cultural commitment to continuous learning and quality ownership across all teams.

- Conduct Formal Postmortems: After a critical bug is resolved, hold a blameless postmortem meeting with cross-functional team members. Use techniques like the "5 Whys" or fishbone diagrams to trace the problem back to its true source.

- Establish a Causal Analysis Team: Create a dedicated group responsible for analyzing defect trends, identifying common root causes, and recommending preventative actions. This ensures a consistent and focused effort.

- Create a Defect Knowledge Base: Document common defect patterns, their root causes, and the solutions implemented. This repository becomes an invaluable resource for training new developers and preventing recurring issues.

- Track Prevention Metrics: Monitor metrics like Defect Injection Rate and Mean Time to Detection. Celebrating improvements in these areas reinforces the value of prevention and encourages teams to maintain their focus on quality.

8. Performance and Load Testing Integration

Performance and load testing integration is a critical practice for ensuring an application can handle expected user traffic without compromising speed or stability. Rather than a one-off check before launch, this approach embeds performance validation throughout the SDLC. It systematically evaluates an application's responsiveness, scalability, and reliability under various stress conditions, making it an essential component of modern QA testing best practices.

Industry leaders rely on this methodology to maintain user trust. Shopify, for instance, conducts extensive load testing to prepare for massive traffic surges during events like Black Friday. Similarly, Netflix's chaos engineering culture continuously tests system resilience at scale, ensuring a seamless streaming experience for millions of users worldwide. This proactive approach prevents costly production failures and protects brand reputation.

How to Implement Performance and Load Testing

Integrating performance testing requires a strategic blend of planning, automation, and continuous monitoring. It ensures that performance is a shared responsibility, not just a final QA task.

- Test Early and Often: Begin performance testing in early development sprints. Use API and service-level tests to identify bottlenecks before they are integrated into the full application, saving significant remediation time later.

- Simulate Realistic Conditions: Utilize production-like data and create test scripts that mimic real user behavior and traffic patterns. This includes testing for various network conditions. For a deeper dive, explore how to test network latency.

- Establish Performance Budgets: Define clear, measurable performance goals (e.g., page load time under 2 seconds, API response time under 200ms). Automate tests to fail builds that violate these thresholds.

- Mock Dependencies: Isolate services for accurate performance measurement by using API mocking tools. dotMock can simulate third-party APIs or internal microservices, allowing you to test your component's performance without external variables or dependencies.

9. Cross-Browser and Cross-Platform Testing

Cross-browser and cross-platform testing is a critical practice that validates an application's functionality, performance, and appearance across a diverse landscape of browsers, operating systems, and devices. In today's fragmented digital world, users access software on countless combinations of platforms, making this validation essential. This approach is a core component of modern QA testing best practices because it guarantees a consistent and reliable user experience for everyone, regardless of their chosen technology.

Leading companies demonstrate the value of this extensive testing. Google ensures its web applications, like Docs and Gmail, perform flawlessly on Chrome, Firefox, Safari, and Edge. Similarly, financial institutions test their banking applications rigorously across mobile and desktop platforms to ensure security and accessibility for all customers. This commitment to wide-ranging compatibility prevents user frustration, protects brand reputation, and maximizes market reach.

How to Implement Cross-Browser and Cross-Platform Testing

A successful strategy involves a mix of manual, automated, and tool-assisted testing to manage the complexity of different environments. The goal is to achieve broad coverage efficiently without overwhelming the QA team.

- Prioritize Based on Analytics: Use user analytics data to identify the most popular browser, OS, and device combinations among your target audience. Focus your primary testing efforts on these high-traffic environments to maximize impact.

- Leverage Cloud-Based Platforms: Employ services like BrowserStack or Sauce Labs to gain instant access to thousands of real devices and browser configurations. This eliminates the need for an expensive in-house device lab and allows for scalable, parallel testing.

- Automate Visual Regression: Implement automated visual regression tests to detect unintended UI changes across different platforms. These tools capture screenshots and compare them against a baseline, quickly flagging inconsistencies in layout, fonts, and colors.

- Test Platform-Specific Interactions: Create dedicated test cases for platform-unique features, such as touch gestures on mobile devices or specific keyboard shortcuts on desktop operating systems. Ensure these native interactions work as expected.

QA Testing Best Practices Comparison

| Testing Strategy | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Shift-Left Testing Strategy | Medium to High - requires cultural shift | Skilled testers and developers; training needed | Earlier defect detection; reduced costs | Agile/DevOps projects; early defect prevention | Accelerates time-to-market; reduces technical debt |

| Risk-Based Testing (RBT) | Medium - requires ongoing risk assessment | Domain experts; stakeholder involvement | Optimized test focus; resource allocation | Critical systems with varying risk levels | Prioritizes critical functions; efficient test effort |

| Test Automation Pyramid | Medium to High - initial infrastructure | Skilled automation engineers; test tools | Fast, reliable feedback; cost-effective tests | Projects with automation goals; CI/CD pipelines | Balances coverage and efficiency; reduces maintenance |

| Continuous Testing in CI/CD | High - requires robust automation suite | Infrastructure for parallel tests; tooling | Rapid defect detection; frequent releases | DevOps pipelines; large-scale automated deployments | Enables rapid releases; consistent quality assurance |

| Exploratory Testing | Low to Medium - depends on tester skill | Skilled testers | Uncovers unexpected defects; adaptive testing | Usability and feature discovery; ad hoc testing needs | Flexible; minimal setup; deep application insight |

| Comprehensive Test Data Management | High - requires data governance and tooling | Infrastructure for data management; compliance | Consistent results; compliance adherence | Regulated industries; complex data scenarios | Maintains data privacy; improves test accuracy |

| Defect Prevention and Root Cause Analysis | Medium - requires process change and training | Cross-functional teams; analysis tools | Reduced defect rates; improved process quality | Continuous improvement cultures; long-term quality focus | Prevents recurring defects; enhances team knowledge |

| Performance and Load Testing Integration | Medium to High - specialized tools needed | Load testing tools; production-like environments | Prevents performance issues; capacity planning | High-traffic or resource-sensitive applications | Improves UX; avoids costly production fixes |

| Cross-Browser and Cross-Platform Testing | Medium to High - extensive device coverage | Multiple device/browser setups or cloud services | Ensures broad compatibility and UX consistency | Web apps; multi-device platforms | Expands market reach; reduces platform-specific bugs |

Building a Culture of Quality: Your Next Steps

Moving from theory to practice is the most critical step in elevating your software quality. The nine QA testing best practices we've explored, from implementing a shift-left strategy to integrating comprehensive performance testing, are not isolated tactics. Instead, they are interconnected components of a holistic framework designed to build a resilient, proactive, and quality-driven engineering culture. The ultimate goal is not just to find bugs, but to prevent them, creating a development lifecycle where quality is a shared responsibility, not an afterthought.

Achieving this high standard of quality requires more than just adopting new processes; it demands a fundamental shift in mindset and tooling. By embracing concepts like the Test Automation Pyramid and continuous testing within your CI/CD pipeline, you transform QA from a final gatekeeper into an integrated partner in a rapid, iterative development cycle. This integration is the key to unlocking true agility without sacrificing stability or user experience.

From Principles to Actionable Strategy

Your journey toward mastering these practices should be incremental and strategic. Don't attempt to overhaul your entire workflow overnight. Instead, identify the most significant bottlenecks in your current process and select the practice that directly addresses that pain point.

- Struggling with late-stage bug discoveries? Start with a Shift-Left Testing initiative. Empower developers with tools that enable early and frequent testing on their local machines.

- Overwhelmed by an unmanageable number of test cases? Implement Risk-Based Testing (RBT) to prioritize your efforts on the most critical functionalities, ensuring maximum impact with your available resources.

- Facing delays due to unavailable or unstable API dependencies? Focus on Comprehensive Test Data Management and service virtualization. This is where tools that provide mock APIs become indispensable, decoupling your frontend and backend teams and eliminating critical dependencies.

Adopting these QA testing best practices is an ongoing commitment to excellence. The value extends far beyond reducing bug counts. It translates directly into enhanced customer satisfaction, a stronger brand reputation, and a more efficient, less frustrated development team. By investing in a robust QA strategy, you are investing in the long-term success and scalability of your product. The path to superior software quality is paved with continuous improvement, strategic implementation, and equipping your teams with the right tools to succeed.

Ready to eliminate API bottlenecks and supercharge your testing efforts? dotMock empowers your teams to instantly create mock APIs, simulate any scenario, and test with confidence. Explore how dotMock can help you implement these QA testing best practices and build more resilient software, faster.