What Is Integration Testing A Practical Guide

Integration testing is all about checking how individual software modules, services, and components work together as a group. It’s that crucial step you take after you’ve tested each part in isolation (unit testing) but before you test the entire application as a whole (system testing).

Think of it like making sure all the different pieces of a complex puzzle actually fit together to create a clear picture.

Understanding The Core Concept

Let’s use an analogy. Imagine you're building a car from scratch. One team builds a perfect engine, another creates flawless wheels, and a third assembles a top-of-the-line transmission. Unit testing is like making sure each of these parts works perfectly on its own. The engine starts, the wheels spin, and the transmission shifts gears on the test bench without a hitch.

But the real test comes when you put them all together. That’s exactly what integration testing does. It’s here that you start asking the important questions:

- Does the engine’s power actually transfer through the transmission to make the wheels turn?

- Do the data formats from the user login service match what the customer profile database expects?

- When a user clicks "Buy," can the shopping cart module talk to the payment gateway API without errors?

Bridging The Gap in Software Testing

Integration testing fills a massive gap in the software development lifecycle. Its focus isn't on a single line of code or the entire application. Instead, its job is to validate the communication and data flowing between different components. This is where you find the tricky defects that only show up when modules start talking to each other.

The primary goal here is to check the interfaces and interactions between software modules. You're hunting for flaws that only appear when they communicate, like data corruption, incorrect API calls, or messy exception handling between services.

Integration Testing vs Other Testing Types

It's easy to get testing types confused, but each has a distinct role. While unit tests are hyper-focused and system tests are broad, integration testing sits right in the middle, looking at how small groups of components collaborate.

| Testing Type | Focus Area | Objective |

|---|---|---|

| Unit Testing | A single, isolated function or component. | Verify that one piece of code works correctly on its own. |

| Integration Testing | The interfaces and communication between 2+ modules. | Ensure that different components can interact and exchange data properly. |

| System Testing | The entire, fully assembled application. | Validate that the complete system meets all specified requirements. |

Each type of testing is designed to catch different kinds of bugs. A solid testing strategy needs all three to build a reliable and robust application.

This level of testing is also different from end-to-end testing, which simulates a complete user journey through the live application. To see how these layers build on each other, you can learn more about what is end-to-end testing. Getting this hierarchy right is the key to catching issues at every stage of development.

Why Integration Testing Is Not Optional

In a world filled with interconnected services and APIs, skipping integration testing is a bit like building a bridge from both sides of a canyon and just hoping the two ends meet in the middle. Each side might be an engineering marvel on its own, but if they don't connect properly, the entire project is a failure. That's the perfect picture of modern software development—we're building complex ecosystems, not single, monolithic blocks of code.

When you neglect this stage, you're not just taking a technical shortcut; you're taking on serious business risks. A user authentication service might pass all its individual tests with flying colors, but what happens if it can't correctly pass user data to the order processing service? Sales stop. These aren't minor bugs; they're catastrophic failures in the application's core workflow.

The fallout from skipping this step can range from merely inconvenient to outright disastrous. Imagine silent data corruption between microservices, where bad information quietly spreads through your system for weeks before anyone notices. Or think about a critical failure in a payment gateway connection that only rears its head under a real-world user load, costing you revenue and customer trust with every failed transaction.

Protecting Your System and Your Users

Modern software is a web of internal services, third-party APIs, and intricate data pipelines. Every single one of those connection points is a potential point of failure. Integration testing is the quality control process designed specifically to stress-test those connections.

By verifying the "handshakes" between different software modules, you're not just finding bugs; you're actively managing risk. This practice is essential for building customer trust and ensuring the stability of your entire system.

The ever-growing complexity of our systems is exactly why the testing market is expanding so quickly. The global software testing system integration market was valued at around USD 12 billion and is on track to hit nearly USD 28 billion by 2032. That staggering growth isn't just a trend; it's a clear signal that the industry understands just how indispensable this testing phase has become. You can discover more about this market growth on DataIntelo.com.

The Strategic Value of Early Detection

At the end of the day, integration testing is a strategic move. Finding a defect here is vastly cheaper and faster to fix than discovering it after your product has already shipped. Post-launch bug fixes don't just cost more in developer hours; they cost you brand reputation and user confidence—assets that are much harder to win back.

Think about the direct business outcomes that come from a solid integration testing strategy:

- Increased System Reliability: You can be confident that all the individual pieces of your application work together as a cohesive whole, drastically reducing the risk of unexpected, system-wide failures.

- Enhanced Customer Trust: A stable and predictable user experience is the bedrock of customer retention. When things just work, people trust your product and your company.

- Reduced Development Costs: Catching major architectural flaws early in the process prevents incredibly expensive and time-consuming rework later on.

For any team serious about shipping high-quality, dependable software, integration testing isn't just a "best practice." It's a fundamental pillar of success.

Choosing Your Integration Testing Strategy

Okay, so you're sold on why integration testing is a must-have. The next big question is how to actually do it. There's no single magic bullet here; the right game plan really depends on your project's architecture, your team's workflow, and how tight your deadlines are. Picking the right strategy means you'll catch bugs efficiently without grinding your development cycle to a halt.

At a high level, the choice boils down to a simple philosophy: do you test everything at once, or do you test in smaller, more deliberate steps?

The All-At-Once Big Bang Approach

The Big Bang strategy is exactly what it sounds like. You wait until every single module is built, then you smash them all together and test the whole system in one go. Think of it like building a car. You get the engine, the chassis, the wheels, and the electronics all finished separately, and only then do you assemble the entire thing and turn the key.

It's a straightforward concept, but it's loaded with risk. If a test fails, good luck finding the source. You're staring at dozens, maybe hundreds, of potential failure points between all the interconnected parts. This makes debugging an absolute nightmare. Honestly, this approach is only really viable for very small, simple applications where things are less likely to get tangled.

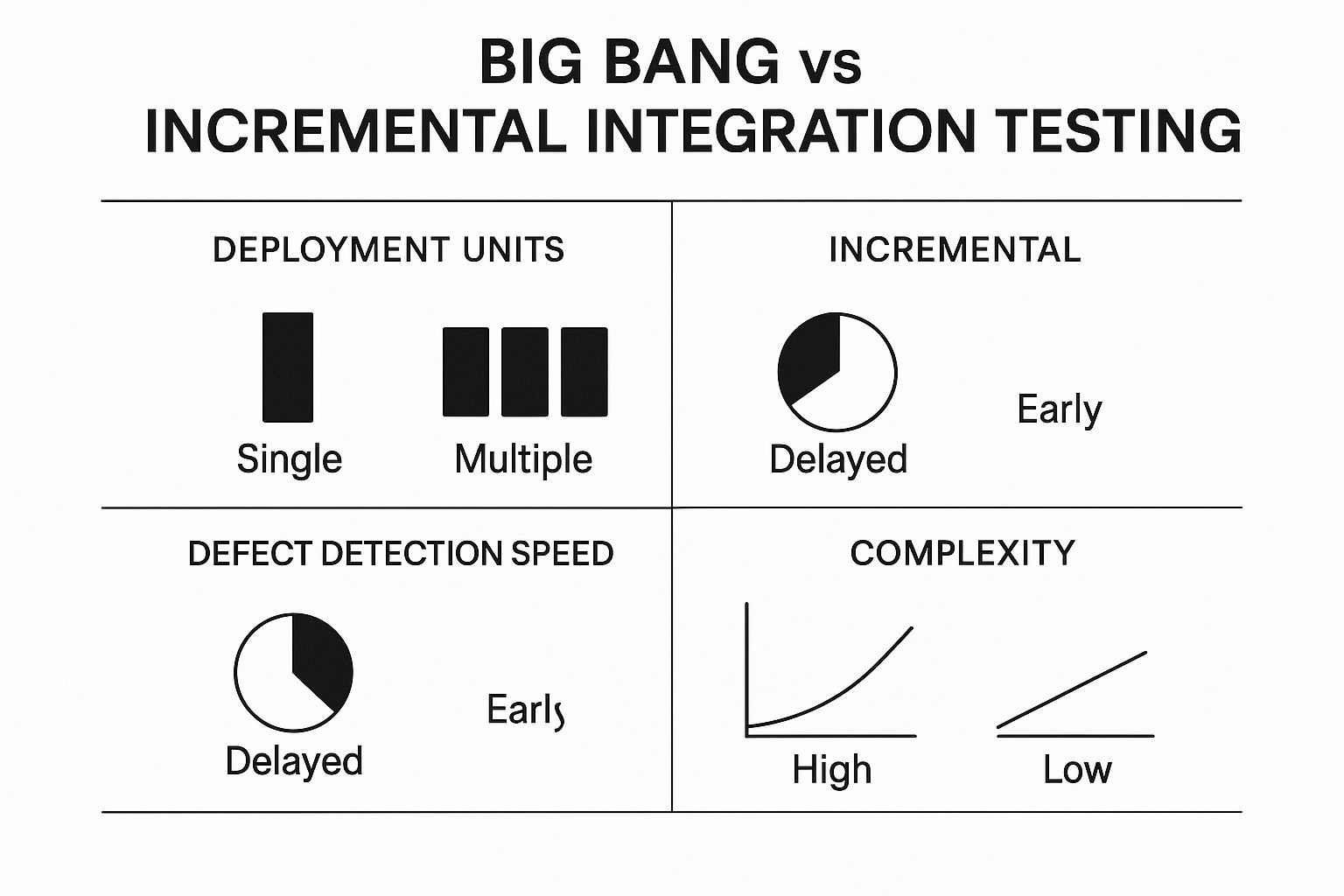

This image gives a great visual breakdown of how the Big Bang approach stacks up against more methodical strategies.

As you can see, the incremental methods provide a huge advantage when it comes to spotting defects early and keeping complexity under control. That's why they're the go-to choice for almost any modern software project.

Incremental Testing A Step-By-Step Method

Incremental testing is a much more systematic and sane approach. Instead of a chaotic "big bang," you integrate and test modules in logical, progressive phases. This lets you catch and isolate bugs much earlier in the process when they're far easier (and cheaper) to fix.

It's a bit like filming a movie. You don't shoot every single scene at once and then try to edit it all together. You shoot scenes in a logical order, making sure each one flows perfectly into the next.

But what if a module you need for a test isn't ready yet? That’s where test doubles come in handy:

- Stubs: These are like stand-in actors. If you're testing Module A, which needs to call the still-unwritten Module B, a stub can pretend to be Module B and provide a canned, predictable response. This allows you to test Module A's logic without waiting.

- Drivers: These are the directors of the test. A driver is a simple piece of code that calls the module you're testing, simulating the instructions it would normally receive from a higher-level component.

By using stubs and drivers, teams can test the connections between modules long before the entire application is built. This creates a much faster feedback loop and allows different parts of the team to work in parallel.

This incremental philosophy branches out into three main strategies.

Top-Down Integration

With this approach, you start testing from the top of your application's architecture—usually the user interface—and work your way down. Any lower-level modules that haven't been built yet are simulated with stubs. It’s a fantastic way to validate the major user workflows and the overall architectural design early on.

Bottom-Up Integration

As you might guess, Bottom-Up integration is the exact opposite. You start with the lowest-level, foundational modules (like database connections or complex algorithms) and work your way up. Here, you use drivers to call the modules being tested and simulate the behavior of the higher-level components. This strategy is perfect for making sure your most critical, core functionalities are rock-solid from the very beginning.

Sandwich Testing The Hybrid Model

Also known as hybrid integration, Sandwich testing gives you the best of both worlds. It’s a pincer movement: one team starts testing from the top down, while another starts from the bottom up, and they meet in the middle.

While it can be a bit more complex to coordinate, this approach can dramatically accelerate testing on large, complex projects. If you want to learn more about the pieces being tested, our guide on what is API testing is a great resource for understanding how these critical interfaces are validated.

Comparing Integration Testing Approaches

To help you decide, here’s a quick summary table that lays out the pros, cons, and best-use cases for each of the strategies we've discussed.

| Approach | Pros | Cons | When to Use |

|---|---|---|---|

| Big Bang | Simple concept, no planning needed. | Extremely difficult to debug, defects found late. | Very small, non-critical projects with low complexity. |

| Top-Down | Validates major workflows early, finds architectural flaws sooner. | Requires writing many stubs, lower-level functionality is tested late. | Projects where the user interface and high-level logic are the top priority. |

| Bottom-Up | Critical low-level modules are tested thoroughly and early. | The overall system workflow isn't validated until the very end. | Applications with complex backend logic or critical foundational services. |

| Sandwich | Combines the benefits of Top-Down and Bottom-Up, speeds up testing. | More complex to plan and manage, can be resource-intensive. | Large-scale, complex projects with distinct UI and backend layers. |

Ultimately, there's no single "best" approach—just the one that fits your team and your project the best. For most modern applications, some form of incremental testing (Top-Down, Bottom-Up, or Sandwich) is almost always the smarter, safer, and more efficient choice.

How Automation Supercharges Your Testing

Trying to rely on manual integration testing in today's fast-paced development cycles is a losing game. It’s like trying to inspect every single part on a high-speed car assembly line by hand—it’s just too slow, incredibly prone to human error, and can't possibly keep up. The moment a developer pushes new code, the connections between system components can shift, rendering yesterday's manual checks completely obsolete.

This is exactly where automation steps in and changes everything.

Automated integration tests take what was once a frustrating bottleneck and turn it into a genuine strategic advantage. Instead of having your team wait days for QA to manually sign off on how components interact, automated tests can run in just minutes. You get immediate feedback, allowing your team to catch bugs almost the second they’re introduced, directly within the CI/CD pipeline.

The Power of Rapid, Repeatable Feedback

The real magic of automation isn’t just about speed; it's about consistency. An automated test script follows the exact same steps with the exact same data, every single time it runs. This level of precision eliminates the classic "it worked on my machine" headache and makes every test a trustworthy health check for your system.

With this consistency, teams can build out a powerful suite of tests that covers all the bases, including:

- Positive Test Cases: Making sure modules talk to each other correctly under normal, everyday conditions.

- Negative Test Cases: Checking that the system handles errors gracefully, like when it receives an invalid API key or a bad request.

- Edge Cases: Pushing the system to its limits with unusual inputs or unexpected loads to see how it behaves.

Automation creates a safety net that gives developers the freedom to innovate without fear. When you know a solid set of integration tests is there to catch any regressions, you can make changes and add features with much more confidence.

Building a Culture of Continuous Quality

Adopting automation is more than just a technical upgrade—it’s a fundamental shift in culture. The global automation testing market was valued at USD 35.52 billion and is projected to skyrocket to USD 169.33 billion by 2034, which shows just how seriously the industry is taking continuous quality. This isn't just a trend; it's a massive investment in baking quality into every step of the development process.

Modern tools, from simple API clients like Postman to advanced service virtualization platforms, make this possible. They help create stable, predictable environments where you can reliably simulate dependencies for testing. You can even dive into specific techniques, like learning how to automate Android testing, to see this in action.

By embedding automated integration testing right into your CI/CD pipeline, quality stops being a final hurdle and becomes a shared responsibility. This proactive mindset ensures you’re not just building things fast, but building them right from the start.

Best Practices For Effective Integration Testing

Great integration testing isn’t just a matter of running tests; it’s about running the right tests in the right way. Having a solid strategy is what separates a chaotic, bug-hunting scramble from a predictable quality assurance process. Following a few key best practices helps your team sidestep common traps and get the most value out of your testing efforts.

This means looking beyond a simple "can these two modules talk to each other?" check. A truly effective approach validates entire business workflows and the user journeys that matter most. Start by drafting a detailed test plan that prioritizes critical interactions, like a user's path from registration to login or the crucial sequence from adding an item to the cart to completing a checkout.

Isolate Dependencies and Maintain Control

One of the biggest headaches in integration testing is managing external dependencies. Think third-party APIs or even internal services that are still under development. If you rely on these live services, you're setting yourself up for flaky tests—tests that fail because of a network hiccup or downtime on the other end, not because of a bug in your code.

This is exactly why test doubles are so crucial. By using stubs and mocks, you can simulate the behavior of these external components. This gives you a stable, controlled environment where you're in charge.

- Stubs are great for simple interactions, as they just return pre-programmed, predictable responses.

- Mocks are a bit smarter; they let you verify that your application is calling the dependency correctly.

When you isolate the system you're testing, you can be confident that a failed test points to a genuine issue in your integration logic, not just external noise.

The core principle is to control your test environment as much as possible. A stable, predictable environment that accurately mirrors production is the foundation for reliable and repeatable integration tests.

Focus on Data and Clear Communication

The quality of your tests is directly tied to the quality of your test data. Always work with clean, realistic data that covers a wide range of scenarios—think valid inputs, invalid formats, and even empty values. Just be sure to avoid using real production data, which can create security risks and lead to unpredictable test outcomes.

Finally, make sure your tests are self-contained. Each test should set up the specific data it needs and then clean up after itself, never leaving a mess for the next test to deal with. This simple discipline prevents cascading failures, where one broken test triggers a chain reaction of failures that makes finding the root cause a nightmare. This is also a key part of validating API interfaces, and you can dive deeper into verifying these connections by reading our guide on what is contract testing.

Answering Common Questions About Integration Testing

If you're just getting into integration testing, you probably have a few questions. That's completely normal. Let's walk through some of the most common ones I hear from developers to help clear things up.

How Is Integration Testing Different From Unit Testing?

This is probably the most frequent question, and it's a great one. The easiest way to think about it is with a simple analogy.

Imagine you're building a car. Unit testing is like making sure the engine runs perfectly on its own, sitting on a test bench. You're checking one isolated part.

Integration testing, on the other hand, is when you actually put that engine into the car, connect it to the transmission, hook up the electronics, and see if it all works together to make the wheels turn. It's not about the individual parts anymore; it's about the connections between them.

When Should You Perform Integration Testing?

The short answer is: after unit testing, but before you test the entire system as a whole.

In a modern CI/CD pipeline, this isn't a one-off stage you hit at the end of a sprint. It's a continuous activity. Automated integration tests should kick off every time new code is committed, especially if it changes how modules talk to each other. This gives you instant feedback and stops integration bugs from ever seeing the light of day in production.

The goal is to catch interaction-based defects early and often. This continuous validation is a cornerstone of agile development, ensuring that new features don't break existing connections between services.

What Are The Biggest Integration Testing Challenges?

Every experienced developer has run into these headaches. They usually boil down to three main culprits:

- Dependency Management: Your tests can become flaky and unreliable when they depend on another team's service or a third-party API that you don't control. If their service goes down, your test fails, even if your code is perfect.

- Environment Parity: It's incredibly difficult—and expensive—to create a staging environment that's a perfect 1-to-1 copy of your production setup. But without it, you can't be fully confident in your test results.

- Complex Debugging: When a test fails across three or four different services, figuring out exactly where the problem started can feel like finding a needle in a haystack.

Managing these challenges, especially unpredictable dependencies, is exactly why a tool like dotMock is so valuable. It lets you create realistic, reliable mock APIs in seconds. This means you can isolate your services for testing, say goodbye to flaky tests caused by external factors, and seriously speed up your development cycle. Start mocking for free today on dotmock.com.