Managing Test Cases Effectively with dotMock

If you're still managing test cases with a patchwork of spreadsheets and stray documents, you're setting yourself up for failure. I've seen it happen time and again—this kind of ad-hoc system inevitably leads to brittle test suites, duplicated work, and flaky tests that become a massive drain on your engineering team.

A structured, centralized system isn't just a nice-to-have anymore. For any team serious about shipping reliable software, it's a core requirement.

Why Your Test Case Management Needs an Overhaul

Let's be real for a moment. Disorganized testing creates far more problems than it ever solves. When your tests are buried in outdated spreadsheets or scattered across random folders, the pain radiates through the entire development lifecycle. The most obvious hit is just how inefficient it is. Engineers burn precious hours hunting for the right test, trying to make sense of cryptic notes, or—worst of all—rebuilding tests that someone else already wrote.

This chaos is a direct cause of a fragile and untrustworthy test suite. A small change in the application can suddenly break dozens of poorly documented tests, trapping your team in a frustrating cycle of constantly fixing tests instead of building new features. This isn't a minor annoyance; it’s a direct hit to your team's velocity.

Moving Past the Chaos

Adopting a structured approach isn’t about being neat for the sake of it. It's about building a foundation of confidence and speed. Once your process for managing test cases is clear, centralized, and repeatable, you start to see real benefits almost immediately.

- Faster Release Cycles: Your team spends less time fighting with test maintenance and more time actually delivering value to users.

- Higher Software Quality: A well-organized test suite gives you much better coverage, which means you catch bugs way earlier in the game.

- Smoother Team Collaboration: Developers and QA finally have a single source of truth to work from, which cuts out a ton of confusion and miscommunication.

A huge part of this evolution is making sure your test cases are grounded in clear and effective acceptance criteria. This defines what success actually looks like and what really needs to be tested.

Good test case management isn't just a QA responsibility. It's a core engineering practice that improves the entire team's speed and confidence, from the first line of code to the final deployment.

The Growing Need for Structure

This move toward structured testing isn't just a trend; it's a fundamental shift in how high-performing teams operate. The numbers back this up. The global Test Management Software Market was valued at USD 1.2 billion in 2024 and is expected to more than double to USD 2.77 billion by 2032, all driven by this critical need for centralized tools.

It’s clear that more and more organizations are looking for better solutions. Bringing in a modern platform like dotMock is how teams can finally get a handle on flaky tests and start building a truly resilient testing culture.

How to Design Scalable API Test Cases in dotMock

If you want to manage your API test cases without losing your mind, you need to design them for scalability right from the start. A test suite that’s easy to navigate is one your team will actually use and maintain. It's about moving beyond generic placeholders and building a structure that can grow cleanly alongside your application.

Let's walk through a real-world example I see all the time: a user authentication API. This single feature touches multiple endpoints and has tons of different scenarios, which makes it a perfect example for showing how to design tests properly. We'll cover everything from a successful login to what happens when a user forgets their password.

Start with Clear Naming and Organization

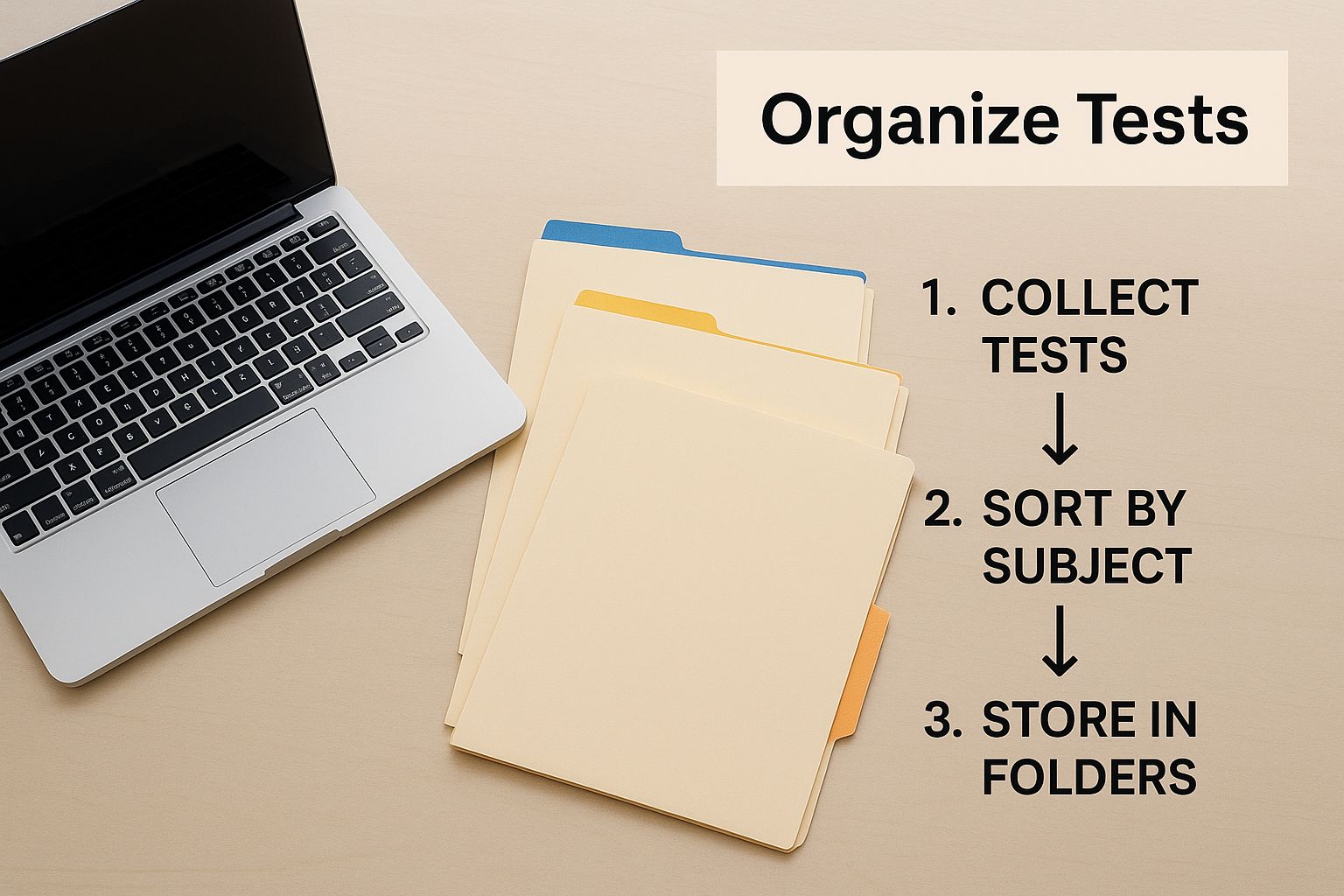

Your first move is to set up a logical folder structure and a consistent naming convention. I know it sounds basic, but this is the bedrock of a scalable test suite. Without it, you’ll quickly end up with a tangled mess that nobody wants to touch.

In dotMock, I always start by creating a top-level folder for each major feature or service. For our example, let's create a folder named User Authentication. Inside that, you can create sub-folders for each endpoint, like /login, /register, and /forgot-password.

A good naming convention should be descriptive enough that you can understand the test at a glance. I'm a fan of this format:

- [HTTP Method][Endpoint][Scenario]

This translates into practical test names like:

POST_Login_Success_ValidCredentialsPOST_Login_Failure_InvalidPasswordPOST_Register_Success_NewUserPOST_Register_Failure_EmailAlreadyExists

With a structure like this, anyone on the team instantly knows what the test does, which endpoint it hits, and the expected outcome. Your test suite essentially becomes self-documenting.

Think of it like a well-organized filing cabinet. When everything has a clear label and a logical place, finding what you need is effortless.

This simple habit of organization ensures your test suite stays manageable and intuitive, even as it balloons in size.

Break Down Tests into Atomic Units

A rookie mistake I often see is creating huge, monolithic tests that try to validate way too much at once. A much smarter approach is to break down complex API interactions into small, atomic test cases. Each one should verify a single piece of functionality. This makes them far easier to debug, reuse, and maintain down the line.

Every single test case should have three distinct parts:

- Preconditions: What state does the system need to be in before the test runs? (e.g., "A user with email '[email protected]' must already exist in the database.")

- Steps: The exact action being performed. (e.g., "Send a POST request to

/loginwith valid credentials.") - Expected Outcomes: The specific, verifiable results. (e.g., "The API must return a 200 OK status code and a valid JWT token in the response body.")

By breaking down tests this way, you’re not just writing tests—you’re creating a library of reusable building blocks. For instance, a test for a successful login can become a precondition for another test that checks a feature only available to authenticated users.

This modular approach becomes incredibly valuable when managing test cases for a REST API. If you're looking for more on this, our guide on how to test REST APIs really gets into the weeds on these foundational principles.

Ultimately, this framework ensures that when a test fails, you know exactly which piece of logic broke. You won't have to spend hours untangling a complex, multi-step script. It’s a small shift in thinking that pays massive dividends.

Here's an example of how you might structure these test cases for our user authentication API.

dotMock Test Case Structure for a User Auth API

| Test Case ID | Test Case Name | Description | Priority |

|---|---|---|---|

| UA-001 | Login_Success_ValidCredentials | Verify a user can log in with a correct email and password. | High |

| UA-002 | Login_Failure_InvalidPassword | Verify a user cannot log in with a valid email but an incorrect password. | High |

| UA-003 | Login_Failure_NonexistentUser | Verify a login attempt fails when the user email does not exist. | Medium |

| UA-004 | Register_Success_NewUser | Verify a new user can successfully register with a unique email. | High |

| UA-005 | Register_Failure_EmailExists | Verify registration fails if the email address is already in use. | Medium |

| UA-006 | ForgotPassword_Success_ValidEmail | Verify a password reset link is sent when a valid email is provided. | Medium |

| UA-007 | ForgotPassword_Failure_InvalidEmail | Verify the system handles a password reset request for a non-existent email. | Low |

As you can see, this structure provides a clear, at-a-glance overview of your test coverage, making it easy for anyone on the team to understand the scope and priority of your API tests.

Keeping Your Test Suites in Sync: Collaboration and Versioning

Let's be honest: a static test suite is a dead test suite. For your tests to have any real value, they need to evolve right alongside your codebase. This is where a major shift in mindset happens—you start treating your test cases like you treat your code, with proper versioning and clear, collaborative workflows.

If your tests don't reflect the current state of your application, they're not just useless; they're dangerous. They create a false sense of security that can lead to major headaches down the road.

In dotMock, we treat test suites as living, breathing assets. It’s like having Git, but for your tests. When a developer pushes a change to an API endpoint, the tests for that endpoint need to be updated. Versioning lets you track every single one of those changes, see who modified what, and even roll back to a previous state if a new test starts causing problems. You get a clear, auditable history of your entire testing effort.

This becomes absolutely critical when you're juggling different environments. The tests you run against staging might be slightly different from what you run in production. Maybe they point to different mock data or cover features that haven't been released yet. Versioning lets you maintain separate branches of your test suite for each environment, all without duplicating your work.

Building a Collaborative Testing Culture

Managing tests effectively isn't a solo mission. It demands a workflow where developers and QA engineers can jump in and contribute without friction. The whole point is to tear down those old silos where developers write code and just toss it over the wall to QA. A shared platform becomes the central source of truth where everyone meets.

When everything is centralized, everyone has visibility. A developer can build a new test case for a feature they’re working on. Right after, a QA engineer can review it, refine it, and add more complex scenarios. This kind of shared ownership makes the feedback loop incredibly fast.

As your project grows, staying organized is key. This is where you need to get smart with organizational tools like tags and custom fields. Trust me, they will become your best friends for filtering and categorizing tests.

- By Feature: Tag tests with things like

auth,payment-gateway, oruser-profileto group them by application functionality. - By Status: Use tags like

in-progress,needs-review, orautomatedto see where each test case is in its lifecycle. - By Environment: Apply tags such as

staging-onlyorproduction-safeto make it obvious which tests are meant for specific deployment targets.

A well-tagged test suite is an easily searchable one. Instead of digging through endless folders, your team can instantly pull up all high-priority tests for the payment gateway that are ready for review. This simple practice makes a huge difference in day-to-day efficiency.

Ultimately, this collaborative approach turns your test suite from a simple checklist into a dynamic knowledge base that actually reflects the state of your application. You can see how to put this into practice by checking out dotMock's collaborative capabilities in our documentation. This shared understanding is how you build a real culture of quality.

Simulating Edge Cases and Real-World Scenarios

Good testing is more than just checking the "happy path." If you want to build truly resilient software, you have to actively hunt down those tricky, hard-to-replicate scenarios that are famous for causing production outages. This is where a powerful mocking tool like dotMock completely changes the game. It helps you shift your entire quality mindset from being reactive to proactive.

Instead of waiting for a real-world dependency to fail—and it will—you can simulate those exact issues on your own terms. This lets you test your application’s resilience in a controlled environment, long before your users ever see the problem. It’s all about asking the tough "what if" questions and getting real, concrete answers.

Crafting Realistic Failure Scenarios

The most dependable applications are the ones built to handle a bit of chaos. With dotMock, you can design test cases that mimic specific, real-world failure modes. We're not talking about generic error testing here; this is about targeted simulations designed to put pressure on your system's known weak points.

Think about a critical third-party payment gateway your service depends on. What happens if it suddenly goes down during peak hours? You can create a mock that returns a 503 Service Unavailable response, letting you see exactly how your application copes. Does it retry the request? Does it queue the transaction for later? Does it show a clear, helpful error message to the user?

Here are a few essential scenarios you should be simulating:

- API Rate Limiting: Set up a mock to return a 429 Too Many Requests error after a specific number of calls. This is a fantastic way to ensure your own rate-limiting logic is working correctly and protecting your services from being overloaded.

- Authentication Failures: What if a user's token expires? Simulate this by having a mock return a 401 Unauthorized response. This helps you confirm that your app correctly redirects users to a login page or prompts them to re-authenticate.

- Server-Side Errors: Test how your application handles unexpected backend issues by mocking a generic 500 Internal Server Error. This is key to making sure your frontend doesn’t crash or, even worse, expose a sensitive stack trace to the user.

The goal here is to move beyond just checking for a

200 OK. Real resilience comes from proving your system can gracefully handle the401s,429s, and503sthat are just a fact of life in any distributed system.

Simulating Unreliable Network Conditions

Let's be honest: in the real world, network connections are far from perfect. Users on mobile devices deal with spotty signals, and services can suffer from high latency. If you're only running tests on a pristine, lightning-fast local network, you're blind to a whole category of potential bugs.

dotMock gives you the power to simulate these poor network conditions with surprising precision. You can introduce artificial delays into your mock API responses to see how your application holds up. For instance, set a mock to take five seconds to respond. Does your application’s timeout fire correctly, or does the UI just freeze up, leaving the user hanging?

This is absolutely crucial for finding performance bottlenecks and making the user experience better. By simulating latency, you can validate your loading states, test cancellation logic, and ensure your app stays responsive even when its dependencies are slow. Taking this kind of proactive approach to network issues helps you find and fix bugs before they ever have a chance to frustrate a real person.

Bringing Your API Tests into the CI/CD Pipeline

Designing great API tests is one thing, but the real magic happens when you weave them into your automation. Running test suites by hand is a decent starting point, but integrating them directly into a CI/CD pipeline is how you achieve genuine development speed and rock-solid confidence. Your tests stop being a manual chore and become an automated quality gate, actively protecting your codebase around the clock.

The whole point is to make API testing an unavoidable, automatic part of your development process. When your test suite runs on every single commit or pull request, you give developers immediate, clear feedback. This approach to managing test cases as part of the pipeline means regressions get caught the moment they happen, stopping bad code from ever touching your main branch.

This isn't just a fleeting trend; it’s a core practice for modern software teams. In fact, automation has already made a huge dent in manual testing. Recent figures show that nearly 46% of development teams have automated at least half of their manual test load, and an impressive 20% have automated over 75% of it. Teams are moving this way for obvious reasons: it's faster, more accurate, and saves a ton of money in the long run. You can dig deeper into the rise of test automation and its impact.

Setting Up a GitHub Actions Workflow

Let’s get practical with an example using GitHub Actions, which is a fantastic and widely-used tool for this job. Our goal is to set up a simple workflow that automatically grabs the latest code, prepares the environment, and then unleashes our dotMock test suite on it.

First, you’ll need to create a YAML file inside the .github/workflows/ directory of your repository. This file is the blueprint that tells GitHub Actions what to do and when to do it.

Here’s a simple workflow configured to run on every new pull request:

name: API Tests

on:

pull_request:

branches: [ main ]

jobs:

run-api-tests:

runs-on: ubuntu-latest

steps:

- name: Check out repository code

uses: actions/checkout@v3

- name: Run dotMock API Tests

run: |

# This is where you'd put the command to run your dotMock suite.

# It could be a CLI command or a simple script.

# For example: dotmock-cli run --suite=auth-api-tests

With this in place, any pull request aimed at the main branch will automatically trigger your dotMock tests. It's that straightforward.

Using Test Results to Make Deployment Decisions

The true value of this setup is in what you do with the results. If a test run fails, it should immediately block that pull request from being merged. This creates a powerful, automated barrier that prevents bugs from sneaking into your production code.

Your CI/CD pipeline becomes the ultimate gatekeeper. A "green" build means the changes are safe to merge and deploy. A "red" build is an immediate, unambiguous signal that something is broken and needs to be fixed before proceeding.

This turns complex go/no-go deployment decisions into a simple, binary outcome. The pipeline's status becomes the single source of truth for code quality. By automating this crucial checkpoint, you liberate your team from the grind of manual verification and empower them to ship features faster and with much more confidence.

Once you have this foundation, you can make your automated checks even more powerful with techniques like data-driven testing in our detailed guide.

Turning Test Results into Actionable Insights

Getting a clean test run with all green checkmarks is a great feeling, but let's be honest—the real learning happens when things break. A failing test isn't just another bug ticket; it's a bright, flashing sign pointing you to exactly where your application is vulnerable. This is where the rubber meets the road in managing test cases: turning raw pass/fail data into a clear plan for making things better.

Tools like dotMock help you go beyond simple metrics. The reporting isn't just about what failed, but why. You can quickly figure out if a bug is due to a simple logic error, a broken API contract, or some weird environmental hiccup. That alone can slash the time you spend just figuring out where to start looking.

Diagnosing the Root Cause of Failures

When a test blows up, the last thing you want to do is start guessing. Was it a real regression? A flaky test? Did the test environment just have a bad day? Your first move should always be to dive into the execution logs and response data dotMock provides.

Start by looking for patterns. If a bunch of tests tied to a single endpoint all start failing right after a new feature is merged, you've got a pretty solid clue that a regression was introduced in that module. It’s less about guesswork and more about solid detective work.

A flaky test—one that passes and fails randomly without any code changes—is pure poison for team morale. If your team can't trust the tests, they'll start ignoring them. Make it a top priority to hunt down and fix these flaky tests to keep confidence high in your quality gates.

Identifying Performance Bottlenecks

Don't forget that your test results are also a treasure trove of performance data. You should be paying close attention to how long each test case takes to run. A sudden jump in the response time for a specific API endpoint is often the first warning sign of a hidden performance bottleneck.

- Track Execution Times: Keep an eye on the duration of your most important API tests over time. If you see a slow, steady increase, it could mean you have a scalability problem brewing long before your users ever notice.

- Analyze Slow Endpoints: Isolate the tests that consistently lag behind the others. These are often indicators of inefficient database queries or business logic that could use a good refactoring.

This kind of proactive monitoring helps you keep your application fast and responsive, which is something every user appreciates.

There's a reason businesses are pouring money into solid testing practices. The global software testing market is expected to reach a staggering $97.3 billion by 2032. It's not uncommon to see big companies dedicating huge chunks of their budget to quality, with 40% spending over a quarter of their IT funds on testing alone. If you want to dive deeper, you can check out some more trends in software testing statistics.

This massive investment really highlights how much value companies place on building reliable, high-performing software. When you turn your test results into real insights, you complete that crucial feedback loop and make your entire testing process a genuine driver of product quality.

Got Questions? We’ve Got Answers.

As you start weaving dotMock into your testing workflow, a few common questions tend to pop up. Let's tackle some of the practical hurdles you might face and how to clear them.

How Do I Keep Tests Clean as My API Evolves?

This is a big one. As your API grows and versions change, your test suites can get messy fast if you're not careful. The trick is to have your test organization mirror your API's structure.

Think of it this way: if you have /v1 and /v2 endpoints, create separate test suites or folders in dotMock for each one, like /v1/tests and /v2/tests. This keeps everything neatly isolated.

I also recommend tagging your tests with something like api-v1 or api-v2. When it's time to launch a new version, just clone the previous suite. You get a solid baseline instantly, and you can then focus on tweaking, adding, or removing tests to match the new API contract. This way, you keep your old tests intact for legacy support while building out the new ones efficiently.

For shared data—things like user credentials or standard payloads—lean heavily on dotMock's environment variables. Storing static data like base URLs and API keys there saves you from the nightmare of hardcoding values. It makes your tests cleaner and way more portable across different environments (like staging vs. production).

My golden rule: Never hardcode data directly into a test. It makes tests brittle and a pain to update. Use environment variables for static data and response chaining for dynamic stuff like auth tokens. Your future self will thank you.

Can I Use dotMock for Performance Testing, or Just Functional?

Great question. While dotMock’s sweet spot is definitely functional and integration testing, it's also a powerful ally for performance testing.

Here’s how: you use it to create stable, predictable mocks of all your external services. This is a game-changer because it lets you isolate the one component you actually want to test.

By making sure all those third-party dependencies respond with consistent times, you get accurate performance metrics for your own service without outside noise. You can even simulate tricky network conditions, like high latency or slow responses, to see how your app holds up under pressure. For a full-blown load test, you'd pair dotMock with a dedicated tool, but for isolating performance bottlenecks, it's invaluable.

Ready to take control of your API testing? With dotMock, you can create production-ready mock APIs in seconds and start building more resilient applications today. Get started for free at dotmock.com.