Automated Interface Testing: Boost Quality & Speed

So, what exactly is automated interface testing? Imagine you have a robot that can act just like a real user on your website or app. This robot clicks buttons, types in forms, and checks that everything on the screen looks and works exactly as it should. That’s essentially what we're doing here—using special software tools to automatically run these checks instead of having a person do it all by hand.

This approach is a game-changer for ensuring your application is consistent, fast, and works perfectly across a jungle of different devices and browsers.

Why Automated Interface Testing Is Essential

In today’s software world, speed and quality aren’t just nice-to-haves; they’re the bare minimum. Teams pushing code multiple times a day in a CI/CD (Continuous Integration/Continuous Delivery) pipeline simply don't have time for manual checks. Every tiny code update could accidentally break a button or mess up the page layout.

Automated interface testing is the safety net that catches these visual bugs and broken features almost instantly. It systematically checks the part of your application that users actually see and touch. This is a world away from manual testing, which, while useful for exploring new features, is just too slow, error-prone, and impossible to scale for the kind of thorough regression testing modern apps demand.

The Core Focus of Automation

The main goal here is to confirm the UI works just as a user would expect it to. We’re not worried about the back-end code or server logic—that's a job for API testing. If you want to learn more about that, our guide on what is API testing is a great place to start.

Instead, automated interface tests tackle the real, practical questions that define the user experience:

- Can a user log in with the right password but get blocked with the wrong one?

- When someone clicks "Add to Cart," does the product actually show up in their cart?

- Does the homepage look right on Chrome, Firefox, and Safari, whether on a big desktop screen or a small phone?

- Do all the dropdown menus and navigation links work correctly?

Manual vs Automated Approaches

Both manual and automated testing aim to find bugs, but they go about it in completely different ways. Manual testing involves a real person sitting down, following a script (or just exploring), and physically interacting with the app. It's great for spotting weird usability quirks that an automated script might not notice.

Automated testing, on the other hand, is built for repetition. It can run thousands of detailed checks with perfect precision, every single time, without getting tired or bored. This is how you get broad test coverage and lightning-fast feedback.

This difference is key. Automation isn't here to replace human testers. It’s here to free them up. By letting scripts handle the tedious and repetitive work, your QA team can focus on the tricky, creative, and high-value testing that truly requires a human touch. The end result is a faster, more reliable process for ensuring quality.

The Building Blocks of a Strong Testing Strategy

To get automated interface testing right, you need a solid foundation. You can’t just jump in and start writing code. Much like building a house, you need a blueprint, the right materials, and a set of tools that all work together. A great automation strategy is built on several key components, each with its own job, but all interconnected to form a powerful, cohesive system.

Think of it as a specialized crew working on an assembly line. Each member has a specific task, and when they all do their part, the final product is flawless. Let's meet the crew.

The Framework Is Your Blueprint

First up, you have the Test Automation Framework. This isn’t a single tool you can download; it's the entire structure—the set of rules, guidelines, and best practices that shape your whole testing process. It's the blueprint for your operation, making sure everything is done consistently and efficiently.

A well-designed framework gives your team a standard way to write tests, manage data, and report results. This makes your entire test suite much easier to maintain and scale as your application grows. Without one, you're heading for chaos—just a messy pile of scripts that will quickly break and become useless.

Test Scripts Are the Instructions

Next, we have the Test Scripts. These are the heart and soul of automated interface testing. Think of them as the specific, line-by-line instructions you give your digital inspector, telling it exactly what to do. Each script is designed to walk through a particular user action or workflow.

For example, a simple login script might have these steps:

- Navigate to the login page.

- Enter a username and password.

- Click the "Login" button.

- Check that the user's dashboard loads correctly.

These scripts are the individual tasks on your assembly line. To be effective, they need to be clear, concise, and focused on a single goal. Engineers often use powerful tools like Selenium, Cypress, or Playwright to write these scripts, which can interact with a web browser just like a real person would.

Test Data Fuels the Engine

A script can’t run on its own; it needs raw material to work with. That's where Test Data comes in. This is all the sample information—like valid usernames, incorrect passwords, product names, or even fake credit card numbers—that your scripts use when they run.

For a login test, the test data would include a set of valid credentials to check for a successful login and a set of invalid credentials to confirm an error message appears. Good test data management is critical for covering different scenarios and edge cases.

This part is absolutely crucial because it lets you see how the application behaves with all sorts of different inputs. By feeding your scripts different data sets, you can test a huge range of conditions without having to write a brand-new script for every single variation.

The Test Runner Executes the Plan

Finally, the Test Runner is the engine that brings it all to life. It takes the framework, scripts, and data, and actually executes the tests. The runner is responsible for kicking things off, managing the order in which tests run, and gathering the results.

It then pulls all this information into a readable report, showing you what passed and, more importantly, what failed. This immediate feedback is what makes automation so valuable in modern, fast-paced development cycles. This reliance on automation is booming across the industry; the global market for automated software testing was valued at USD 84.71 billion and is projected to hit USD 284.73 billion by 2032. That growth is all about the need for speed and quality. You can dig deeper into these trends in this comprehensive automated testing market report.

When you put these four building blocks—framework, scripts, data, and runner—together, you create a systematic and repeatable process for making sure your application's interface works as it should. Understanding how they all fit together is the first step toward building an automation strategy that actually works.

Why Automating Interface Tests Is a Game Changer

Let's be clear: switching to automated interface testing isn't just another item on a tech roadmap. It's a fundamental shift in how you build software—a strategic move that pays off in speed, quality, and your bottom line. It’s about ditching slow, error-prone manual work for a system that's fast, reliable, and built for modern development.

Ultimately, this is about building a more resilient, efficient, and competitive way to get your product into the hands of users.

Faster Feedback, Faster Development

Picture this: a developer pushes new code. In a manual setup, they could be waiting hours—or even a full day—for a QA team to give them the thumbs up or thumbs down. That’s a huge bottleneck.

With automated interface testing, that feedback loop shrinks from days to minutes. A full suite of tests can run automatically, flagging any regressions almost instantly. This means developers can fix problems while the context is still fresh in their minds, which is far more efficient than trying to revisit a problem days later.

This speed changes everything. It unlocks the ability to release smaller updates more frequently and with much greater confidence, knowing your automated tests have already validated all the critical user journeys.

The real magic is how it compresses time. What once took a person days of repetitive clicking and checking is now handled by a script in minutes. This frees up your team to focus on innovation, not verification.

Get it Right, Every Single Time

Even the most dedicated human tester gets tired. Repetitive tasks lead to mistakes—it's just human nature. An automated script, on the other hand, is relentless. It executes the exact same steps with perfect precision every single time, 24/7.

This consistency is a lifesaver for regression testing, where you need to be absolutely sure that a new feature hasn't accidentally broken something else. Automation eliminates the "well, it worked on my machine" excuses and gives your team a single, reliable source of truth on the application's health. The result? A much higher-quality product and far fewer embarrassing bugs reaching your customers.

To really see the difference, let's look at a side-by-side comparison.

Manual vs Automated Interface Testing At a Glance

The table below breaks down the key differences, highlighting why automation has become the standard for high-performing teams.

| Metric | Manual Testing | Automated Testing |

|---|---|---|

| Speed | Slow; limited by human pace. | Extremely fast; runs in minutes. |

| Reliability | Prone to human error and fatigue. | 100% consistent and repeatable. |

| Cost | High long-term labor costs. | Higher initial setup, low long-term cost. |

| Scalability | Very difficult to scale. | Easily scalable across many environments. |

| Feedback Loop | Hours or days. | Minutes. |

| Best For | Exploratory testing, usability checks. | Regression tests, repetitive tasks. |

While manual testing still has its place for things like exploratory or usability checks, automation is the clear winner for building a scalable, reliable testing process.

Achieve Massive Test Coverage, Instantly

How many browser and device combinations can one person realistically test? Not many. An automated system, however, can run your tests across dozens of different environments at the same time. This kind of parallel execution gives you massive test coverage that would be completely impractical—and wildly expensive—to do by hand.

You can instantly confirm that your interface works perfectly on:

- Multiple Browsers: Chrome, Firefox, Safari, and Edge.

- Various Operating Systems: Windows, macOS, and Linux.

- Different Screen Sizes: From massive 4K desktop monitors down to the smallest mobile phones.

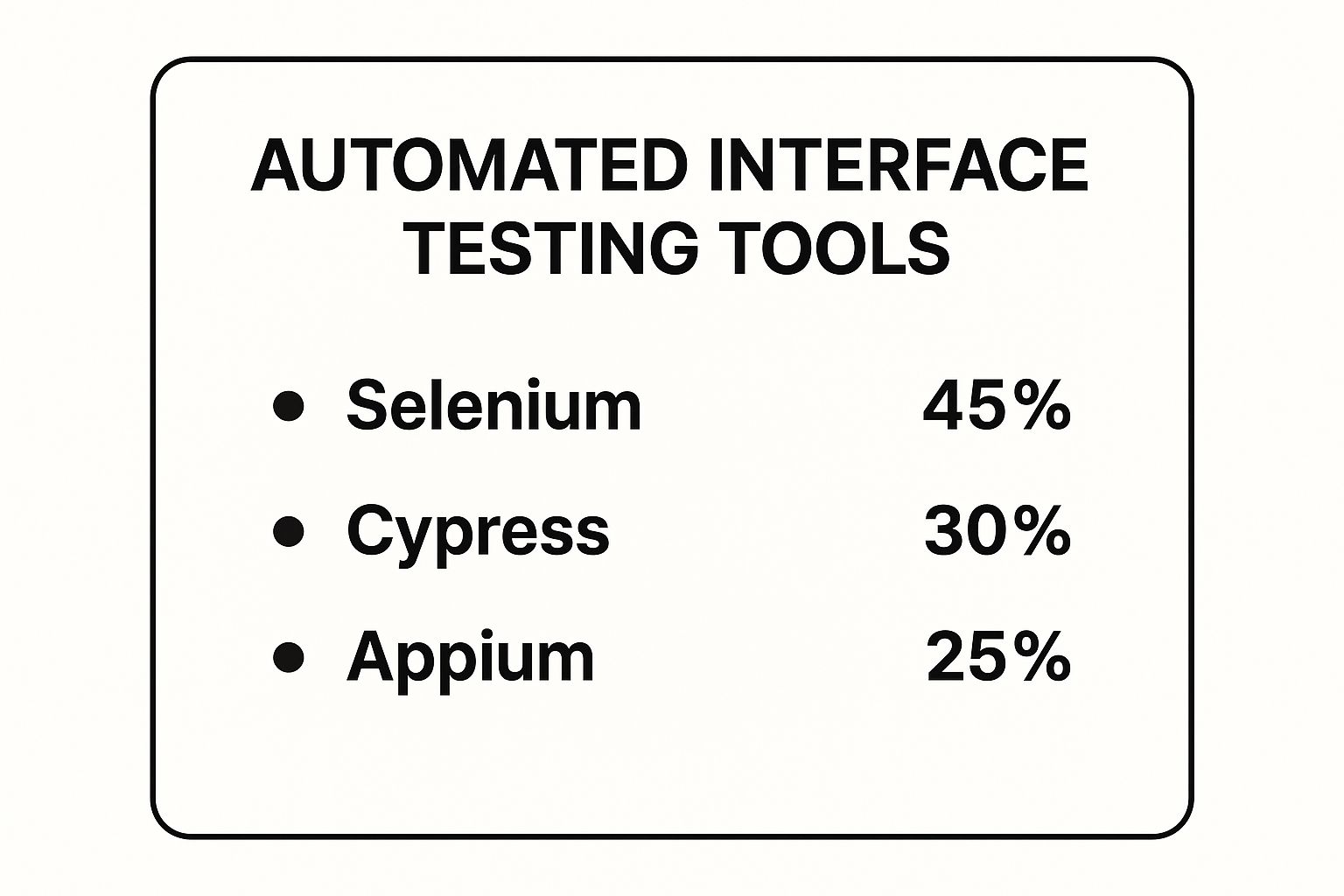

This ensures every user gets a consistent, high-quality experience, no matter what device they’re using. The ecosystem of tools enabling this is mature and growing, with a few clear leaders.

The widespread adoption of tools like Selenium, Cypress, and Appium shows just how central this capability is to modern development, giving teams a rich set of options to achieve comprehensive test coverage.

A Smart Investment with Serious ROI

Sure, there's an upfront cost to setting up a good automation suite. But the long-term savings are enormous. The numbers speak for themselves: the North American automated testing market was valued at USD 33 billion and is projected to hit an incredible USD 122.61 billion by 2033. You can read more about the North American automation testing market growth and the factors driving it.

That explosive growth is happening for a reason. By catching bugs earlier in the cycle, freeing up your team from manual grunt work, and getting features to market faster, automated testing is an investment that pays for itself over and over again.

Proven Practices for Effective Test Automation

Diving into automated interface testing without a solid plan is a recipe for trouble. You wouldn't set sail without a map, right? While the benefits are huge, your success really depends on following proven strategies that keep your efforts from turning into a tangled, unmanageable mess.

These aren't just theories; they're hard-won lessons from countless development teams. Following a few core practices can be the difference between creating a powerful asset and a maintenance nightmare that drains resources.

Pick the Right Tools for Your Team

First things first, you need to choose the right automation tool. The "best" tool is always relative—it completely depends on your application's tech stack and, just as importantly, your team's existing skills. A team full of C# developers will naturally lean toward different tools than a team of JavaScript experts.

Look for tools with solid object recognition and support for all the platforms you're targeting, whether it’s web, mobile, or desktop. Accessibility is also a big deal. Modern low-code or no-code solutions can empower manual testers to jump in and contribute, which expands your automation firepower without needing everyone to be a coding guru.

Be Strategic About What You Automate

One of the most common pitfalls is trying to automate everything. It’s an approach that's not just inefficient but completely unsustainable in the long run. The real goal is to focus your energy where it will have the biggest impact. Start by targeting high-value, repetitive, and absolutely critical user journeys.

So, what makes a good candidate for automation?

- Core Business Workflows: Think user login, registration, shopping cart checkouts, and payment processing. These are the make-or-break paths for your business and they have to work flawlessly, every single time.

- Frequently Used Features: Any functionality that users interact with constantly should be locked down with automated regression tests to catch problems before they spiral.

- Data-Intensive Scenarios: Tests that need tons of different data inputs are a real slog to do by hand but are incredibly simple to automate. You can dive deeper into this with our guide on data-driven testing strategies.

On the flip side, avoid automating tests for features that are still unstable, are rarely used, or require a human eye to judge things like complex UI aesthetics.

A great guideline is the 80/20 rule of test automation. Focus on the 20% of tests that cover 80% of your application's most critical functionality. This keeps you from getting bogged down and ensures you get the maximum value from your efforts.

Write Clean, Maintainable Tests

Treat your test scripts like you treat your application code—they deserve the same level of care. Poorly written tests are brittle, a pain to debug, and become incredibly expensive to maintain over time. The name of the game is creating tests that are independent, reusable, and easy for anyone to understand at a glance.

Stick to these key principles for sanity-saving tests:

- Keep Tests Small and Focused: Each test should verify one specific thing. If a test fails, you should know exactly what broke without having to spend an hour deciphering a massive, complex script.

- Use Reliable Selectors: Don't rely on fragile selectors like complex XPaths that shatter with the smallest UI tweak. Prioritize stable identifiers like unique IDs, names, or accessibility labels whenever possible.

- Make Tests Independent: Every test should set up its own conditions and clean up after itself. When tests depend on the outcome of previous ones, you create a fragile chain that’s a nightmare to manage and debug.

Create a Stable and Isolated Test Environment

A flaky test environment will kill your team's trust in automation faster than anything else. When tests fail because of network glitches, server downtime, or interference from other tests, you end up chasing false alarms and wasting valuable time.

Your test environment has to be stable, consistent, and completely walled off from production. This means using dedicated test databases and, crucially, mocking any external dependencies and APIs. Mocking lets you simulate all sorts of scenarios—like API errors or slow network responses—without needing live services to be up and running. This ensures your tests run reliably and focus only on your application's interface behavior.

For teams looking to truly master this, bringing in specialized automation services can help implement these best practices from the ground up.

How AI Is Changing the Game in Interface Testing

Automated interface testing has long moved past the days of rigid, hand-coded scripts. Now, artificial intelligence is turning our digital inspectors into smart detectives—tools that can learn, adapt, and even anticipate problems before they happen. This isn't just some far-off concept; it's the next practical step in how we build better software, faster.

This shift directly addresses the biggest historical headaches of UI automation: constant maintenance and tests that break at the slightest UI tweak. Instead of a developer having to manually update a script every time a button's ID changes, AI-powered tools can figure out these adjustments on their own. That simple change saves teams an incredible amount of time once spent on tedious rework.

From Brittle Scripts to Self-Healing Tests

Anyone who has worked with traditional automated interface testing knows the pain of test fragility. A developer changes a button's name or moves an element on the page, and boom—a perfectly good test script fails. AI brings a powerful solution to the table: self-healing tests.

Think about a test that, instead of just giving up when it can't find an element, tries to figure out what happened. It uses machine learning to scan the page, identify what likely changed, and locate the element based on other clues like its text, position, or function. It then automatically updates its own locator, essentially "healing" itself for the next run.

This single capability turns a test suite from a constant maintenance chore into a resilient, adaptive asset that works for you, not against you.

Intelligent Visual Testing and Bug Prediction

AI is also giving us a much sharper eye for catching subtle visual flaws that older automation tools would completely miss. Traditional pixel-by-pixel comparisons were always a bit unreliable, but AI-driven visual testing can actually understand the difference between a real bug and a tiny, insignificant rendering difference.

AI can intelligently spot when a layout is broken, an image is missing, or a font is wrong—all while ignoring minor anti-aliasing variations that would have triggered false alarms in the past. This brings a whole new level of precision to maintaining visual quality.

But it gets better. Beyond just finding existing bugs, AI is starting to help us predict them. By analyzing patterns in historical test results and code changes, machine learning models can flag high-risk areas in the application. This helps QA teams focus their limited time and energy where bugs are most likely to pop up, making the whole process smarter.

The rise of AI and Machine Learning (ML) is a clear trend making testing more effective. The AI market, which recently hit USD 243.72 billion, is poised for massive growth. The ML market is on an even steeper trajectory, valued at USD 113.11 billion. These technologies are the engine behind smarter test generation, dynamic script adaptation, and predictive bug analysis. You can find more details about these test automation trends and what they mean for the industry.

AI-Powered Test Generation

Another really exciting development is AI-driven test creation. Instead of someone having to manually script every single user journey, AI tools can explore an application and generate a whole suite of relevant tests automatically.

This is being done in a few powerful ways:

- Crawling the Application: An AI model can navigate an application just like a user would, discovering pages, links, and forms to map out its functionality and build tests to cover what it finds.

- Analyzing User Behavior: By looking at real user session data, AI can pinpoint the most common and critical paths people take through your app, then create automated tests to make sure those key journeys never break.

- No-Code Platforms: Tools like dotMock are at the forefront here, letting teams generate complex tests with simple instructions. This makes automated interface testing accessible to more people on the team, not just specialized engineers.

A Practical Walkthrough: Your First Automated Test with dotMock

Theory is one thing, but seeing a tool in action is where it all clicks. Let's walk through a real-world scenario to see just how simple and fast automated interface testing can be. We'll use dotMock to bring these concepts to life.

Imagine a critical user path on an e-commerce site: a customer logs in, adds an item to their cart, and we need to confirm the cart updates correctly. Doing this by hand is tedious and prone to error. With a tool like dotMock, we can lock in this entire workflow in minutes.

Step 1: Setting Up Your Project

First things first, let's get our workspace ready. Gone are the days of wrestling with complex local setups. With a modern cloud tool like dotMock, you just sign up and create a new project. That’s it.

This gives you a central dashboard—your command center—where every recording, test script, and result will be organized. It’s designed to be clean and intuitive from the get-go. If you want a hand getting started, the dotMock quickstart documentation will get you up and running in less than a minute.

Step 2: Recording the User Journey (Effortlessly)

This is where you see the real power of modern test automation. Instead of writing a single line of code, you just do the thing you want to test. Fire up the dotMock recorder and interact with your app just like any user would.

For our e-commerce example, you’d simply:

- Navigate to your site's login page.

- Type in a username and password, then click "Login."

- Find a product you want and click the "Add to Cart" button.

While you're doing this, the recorder is quietly logging every single interaction in the background—every click, every keystroke, every page load. It sees what you see and captures the sequence perfectly.

The key here is simplicity. The interface is built for clarity, making it easy for anyone on the team to jump in and start recording.

Step 3: Instantly Generating a Test Script

The moment you stop the recording, dotMock works its magic. It takes all those captured actions and instantly translates them into a stable, reusable automated test script. What was once a manual click-through is now a piece of code that can be run on repeat, flawlessly, thousands of times.

This no-code approach is a game-changer. It means team members who aren't developers—like QA analysts, product managers, or business analysts—can build powerful automated tests. This massively expands who can contribute to quality.

Your new script is now a digital inspector, ready to mimic that exact user journey whenever you need it.

Step 4: Adding Assertions to Define Success

An automated test isn't just about repeating actions; it's about verifying results. This is where assertions come in. An assertion is a specific check you add to your script to confirm the application behaved exactly as you expected. It's how you teach the test what "passing" actually looks like.

Back to our e-commerce example, a crucial assertion would be:

- Verify Cart Contents: After the "Add to Cart" click, the script must check that the correct product name and price appear in the shopping cart.

You could also add other checks, like confirming a "Welcome back!" message appears after login or that you landed on the correct product page. Assertions are the hard-and-fast rules that determine if a test passes or fails.

Step 5: Running the Test and Reviewing the Results

With your script and assertions ready, it's time to run the test. You can kick it off with a single click or—even better—integrate it into your CI/CD pipeline so it runs automatically every time a developer pushes new code.

dotMock runs through the steps and gives you a clear, easy-to-read report. You'll get a simple pass or fail status, but more importantly, you'll see detailed logs and screenshots for any step that went wrong. This immediate, visual feedback lets your team find and squash bugs with incredible speed, helping you maintain a perfect user interface.

Common Questions About Interface Test Automation

When teams first start looking into automated interface testing, the same set of practical questions tends to pop up. Getting straight answers to these is the best way to clear up any confusion and get your team started on the right foot. Let's dig into some of the most common ones.

Which Tests Should We Prioritize for Automation?

It’s tempting to want to automate everything, but that's a classic rookie mistake. The smartest approach is to target the high-value, repetitive stuff first. Think about your core business workflows—those are the perfect candidates.

Start with the journeys that absolutely have to work for your users and your business:

- User login and registration.

- Shopping cart and checkout flows.

- Any feature that gets hammered by users all day long.

Beyond that, focus on your regression tests. These are the tests you run over and over to make sure new code hasn't broken something that used to work. Automating them delivers a massive return on your time investment. On the flip side, try to avoid automating tests that are rarely run or those that need a human eye for things like visual appeal and overall usability.

What Is the Biggest Challenge We Will Face?

Hands down, the single biggest headache in automated interface testing is test maintenance. User interfaces are always in flux. A developer tweaks a button, renames a field, or shuffles the layout, and suddenly your test scripts are broken.

This is what we call "test flakiness"—when a test fails not because of a real bug, but because the script can't find what it's looking for anymore. It doesn't take long for flaky tests to destroy your team's confidence in the whole automation suite.

The key to fighting this is to build resilient tests from the start. Use stable locators (like unique IDs), design your tests in modular, reusable chunks, and look into modern tools with AI-powered self-healing features. These can slash your maintenance time.

Do My Testers Need to Be Expert Coders?

Not like they used to. While deep coding skills will always be a plus for navigating really tricky test scenarios, the game has changed. The emergence of low-code and no-code platforms has opened up test automation to the entire QA team, not just the developers.

Many tools now have recorders that watch you perform an action and automatically turn it into a working test script. This empowers manual testers, business analysts, and others to jump in and contribute meaningfully. It fosters a much more collaborative culture where deep product knowledge becomes just as valuable as the ability to write code, ultimately helping the whole team take ownership of quality and ship features faster.

Ready to make your testing faster and more reliable? With dotMock, you can build powerful, no-code automated tests in minutes, not days. See for yourself how easy it is to create resilient tests that catch bugs before your customers do. Start your journey with dotMock today.