Artificial Intelligence in Test Automation: Practical Guide

Artificial intelligence in test automation isn't about replacing testers; it's about giving them superpowers. Think of it as using smart machine learning algorithms to make the entire testing process more resilient, efficient, and just plain intelligent. Instead of blindly following rigid scripts, AI-powered tools can actually adapt to changes in your application, predict where bugs are most likely to hide, and even generate their own test cases. This drastically cuts down on the manual grind and the constant maintenance that plagues so many QA teams.

A Smarter Approach to Software Testing is Here

The whole conversation around software testing is shifting. For too long, QA has been stuck in a world of rigid, brittle automated scripts. If you've been in testing for any length of time, you know the drill: you pour hours into writing tests, only for them to break the moment a developer tweaks a UI element. It's a frustrating cycle of writing, running, and fixing.

This constant maintenance doesn't just eat up time; it creates a serious bottleneck. It slows down development, pulls skilled engineers away from creative, exploratory testing, and drags out the feedback loop. Pretty soon, nobody trusts the automated test suite anymore. This is exactly the problem that artificial intelligence in test automation is designed to fix.

Moving Beyond Simple Scripts

Let's use an analogy to make this clear. Traditional, scripted automation is like a classical musician who can only play a song exactly as it's written. Change one note on the sheet music, and the whole performance grinds to a halt. That’s precisely how most scripted tests work—they follow a hardcoded path, and any small deviation causes a failure.

Now, imagine an AI-powered testing tool as a seasoned jazz musician. The jazz artist knows the melody and structure but has the freedom to improvise and adapt on the fly. If the rhythm shifts or another player throws in a new chord, they don't stop; they adjust and keep the music flowing. AI brings that same level of fluid adaptability to testing, making it far more resilient and intelligent.

By learning from how an application behaves, analyzing historical test data, and observing real user interactions, AI transforms testing. It goes from a reactive, rule-based chore to a proactive, adaptive strategy that anticipates change instead of breaking from it.

Why Now is the Time for AI in Testing

This shift toward AI-driven QA isn't just another industry fad. It’s a necessary evolution to keep up with the breakneck pace of modern software development. As our applications get more complex and release cycles shrink to weeks or even days, the old way of managing test suites just can't keep up. Teams need automation that helps them, not holds them back.

The numbers back this up. In 2023, only about 7% of teams had started using AI in their automation frameworks. Fast forward to 2025, and that number is expected to jump to 16%. That’s more than double in just two years. This rapid adoption shows that the industry is recognizing AI's power to tackle complex testing challenges, from predicting defects to providing smarter analytics. You can dig deeper into these figures in recent test automation statistics.

By bringing AI into their process, organizations are finally starting to overcome the classic limitations of traditional automation. The benefits are clear:

- Less Test Maintenance: AI systems can "self-heal," automatically identifying and adapting to UI changes. This massively reduces the time engineers spend fixing broken scripts.

- Faster Feedback Loops: Intelligent test execution can prioritize the most critical tests based on risk, giving developers much faster feedback on the health of a new build.

- Better Test Coverage: AI is great at spotting user paths and edge cases that a human tester might miss, leading to a more thoroughly tested and robust product.

How AI Is Actually Changing The Testing Game

To really get what artificial intelligence in test automation is all about, you have to look under the hood. This isn't some black box magic—it’s about smart algorithms and machine learning models that completely reshape how we create, run, and maintain tests. Think of it as giving your testing process a kind of digital intuition, letting it learn and adapt on the fly.

At its heart, AI injects intelligence into the entire testing lifecycle. Old-school automation is stuck following a rigid, line-by-line script. An AI-powered system, on the other hand, can spot patterns, grasp the context of what it’s testing, and make smart decisions. This single shift turns testing from a brittle, high-maintenance chore into a resilient and proactive part of development.

Self-Healing Tests: No More Brittle Scripts

One of the most immediate wins with AI is the idea of self-healing tests.

Picture this: you're navigating with a GPS, and suddenly there's a road closure. Your GPS doesn't just freeze and throw an error. It instantly re-routes you. That’s exactly how self-healing tests work. When a developer tweaks a UI element—like changing a button's ID or moving it—a traditional script breaks. The locator it was told to find is gone.

An AI tool is smarter. It understands the element's purpose and other attributes—it knows it’s the "Login" button, regardless of its specific ID. The AI finds the element's new locator, updates the test on its own, and keeps things moving. This one feature wipes out a massive amount of the constant, tedious maintenance that plagues QA teams.

Visual AI Testing: Seeing Your App Like A User

Traditional visual testing was always a bit of a letdown. It relied on pixel-for-pixel comparisons, which are incredibly fragile. This approach would scream "failure" over tiny rendering differences a human would never even notice, yet it could completely miss a huge layout bug if the pixel colors technically matched.

Visual AI testing is a different beast entirely. It works more like the human eye, learning what looks right and what’s broken. Instead of just comparing pixels, it understands layout, structure, and visual hierarchy.

Visual AI can spot subtle but critical bugs that older methods miss—things like overlapping text, misaligned forms, or a button that has vanished completely. It has the smarts to tell a real defect from a minor rendering quirk, which means way fewer false alarms for your team to chase down.

Predictive Analytics: Pinpointing Where The Bugs Will Be

Another game-changer is predictive analytics. Think of it as a weather forecast for your software's quality. By sifting through historical data—past test runs, bug reports, and code changes—machine learning models can predict which parts of your application are most likely to break next.

This lets teams aim their testing efforts where they’ll have the biggest impact. Instead of running a massive, slow regression suite for every tiny change, the AI can suggest a small, targeted set of tests that cover the highest-risk areas. You get faster feedback, and critical bugs get caught much, much earlier.

These breakthroughs are built on specific types of artificial intelligence. If you're curious about the tech behind it all, it's worth understanding the key differences between deep learning vs. machine learning.

A Clearer View: AI vs. Traditional Testing

To really drive home the difference, let’s compare the two approaches side-by-side. The old way of doing things served its purpose, but AI-powered automation operates on a whole new level.

Traditional vs AI-Powered Test Automation A Comparison

| Aspect | Traditional Automation | AI-Powered Automation |

|---|---|---|

| Test Creation | Manual scripting, requires coding expertise. | Can be auto-generated from user journeys or simple inputs. |

| Maintenance | High. Small UI changes frequently break tests. | Low. Self-healing tests adapt to most UI changes automatically. |

| Element Locators | Relies on static, fragile selectors (ID, XPath). | Uses intelligent, multi-attribute locators to find elements. |

| Test Coverage | Limited by what can be manually scripted and maintained. | Can dynamically explore and test new paths, increasing coverage. |

| Bug Detection | Catches known, pre-scripted failure conditions. | Finds unexpected bugs, visual defects, and performance issues. |

| Feedback Loop | Slow, often tied to long regression cycles. | Fast, with prioritized tests focused on high-risk areas. |

This table shows a fundamental shift. We're moving from a system that simply follows orders to one that thinks, adapts, and anticipates problems.

The Market Is Speaking Loud And Clear

This move toward intelligent testing isn’t just a niche trend; it’s a massive market transformation. The software testing automation market is expected to hit around $68 billion by 2025, with the entire software testing market forecasted to reach $109.5 billion by 2027.

This incredible growth points to one thing: AI-driven automation is becoming the standard for any company that wants to ship great software quickly. By weaving machine learning into the QA process, teams are building smarter test suites that are more efficient, more accurate, and far less of a headache to maintain. The end result is simple: better products and happier users.

The Tangible Benefits of an AI-Driven QA Strategy

Bringing artificial intelligence into test automation isn't just a technical upgrade—it’s a business decision with a real, measurable payoff. When you move past the hype, you find that an AI-first approach directly tackles the biggest headaches in software development today: efficiency, maintenance, test coverage, and speed. It shifts QA from being a necessary cost to a genuine value-driver.

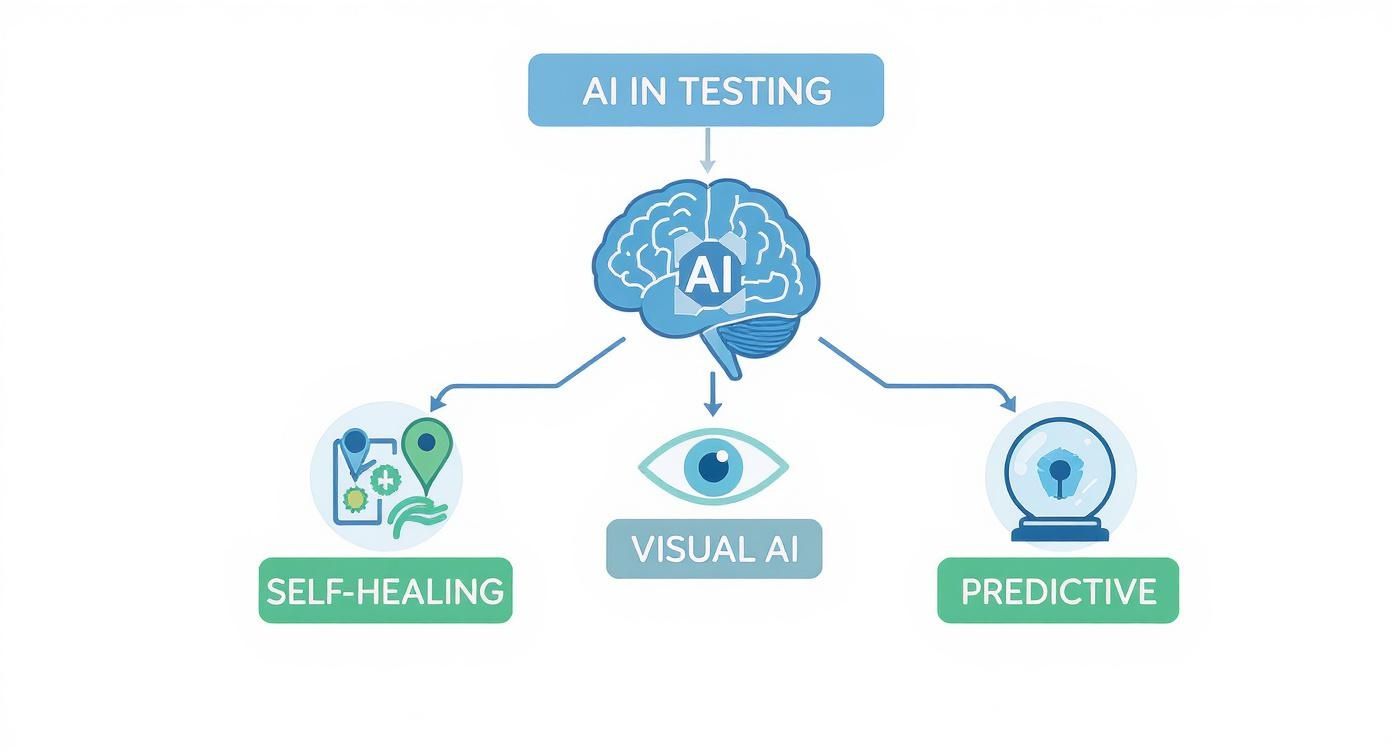

This change is driven by a handful of core AI capabilities. The infographic below shows how features like Self-Healing, Visual AI, and Predictive Analytics are the building blocks of a much smarter testing strategy.

Each of these pillars works in concert to create a more resilient and intelligent quality assurance process, which is where the real-world benefits start to kick in.

Drastically Reduced Test Maintenance

One of the first things you'll notice is the end of constant, soul-crushing test script maintenance. Traditional test scripts are famously brittle. Even a tiny UI change, like renaming a button, can trigger a domino effect of failures, forcing engineers to waste hours fixing tests instead of finding new bugs.

AI-powered self-healing tests put a stop to this. When a button’s ID or XPath changes, the AI is smart enough to find it using other attributes, like its text or position on the page. It then automatically updates the test script on the fly, preventing a false failure and saving a massive amount of engineering time. Your skilled team is now free to focus on what matters—complex exploratory testing and making the user experience better. If you want to dive deeper, you can explore the fundamental benefits of automated testing in our detailed guide.

Increased Efficiency and Team Productivity

AI doesn't just fix tests; it makes building and running them incredibly fast. Modern AI tools can scan an application and generate solid test cases automatically, covering more ground in a few minutes than a human could script in a day. This boost in efficiency lets your team get more done without needing to hire more people.

By taking over the most repetitive and time-sucking QA tasks, AI frees up your engineers to use their expertise where it counts. They can get back to creative problem-solving, usability testing, and other strategic work that requires human insight—the kind of work that actually pushes product quality forward.

The economic impact is undeniable. Already, 46% of testing teams have replaced at least half of their manual testing with automation. On top of that, 36% of teams are reporting a positive return on their test automation investment, with 21% seeing significant gains. The business case is clearly there.

Enhanced Test Coverage

Let's be honest: even the most dedicated human tester can't think of every possible user journey or obscure edge case. It’s just not possible. AI, however, is built for this. By analyzing user behavior and application models, it can uncover weird paths and scenarios that a person would likely miss.

The result is much wider and deeper test coverage. The AI ensures your application is hammered with a massive range of inputs and interactions, digging up hidden bugs in the quiet corners of your software. This improved coverage leads directly to a more stable, reliable, and high-quality product for your users.

Faster Feedback Loops for DevOps

In a modern CI/CD pipeline, speed is the name of the game. The sooner developers get feedback on their code, the faster they can fix issues and push new features. AI is a game-changer here, creating faster and smarter feedback loops.

Instead of running a massive, time-consuming regression suite after every single code change, AI can use predictive analytics to pick and run only the most relevant tests—the ones most likely to be affected by the new code. This gives developers targeted, actionable feedback in minutes, not hours. That kind of speed is absolutely essential for keeping up momentum in a DevOps world. To see how AI fits into a broader quality strategy, check out these quality assurance best practices for AI & SaaS.

Putting AI to Work in Real-World Scenarios

The theory behind artificial intelligence in test automation is great, but its real value clicks when you see it solving actual business problems. Let's move from concepts to concrete reality and look at how different industries are using AI to get over common quality assurance hurdles.

Think of these scenarios as a blueprint for how you can put these powerful tools to work in your own projects. Each one breaks down a specific problem, the AI-powered fix, and the real-world results.

E-Commerce Visual Validation Across Devices

An online retail giant was facing a classic, high-stakes problem. Every time they launched a new sale or product, they had to make sure thousands of images, prices, and banners looked perfect on hundreds of different device and browser combinations. Doing this by hand was impossibly slow. Even worse, their old pixel-matching automation scripts were constantly flagging tiny, irrelevant differences, creating a ton of noise.

Their solution was to bring in an AI-powered visual testing tool. Instead of just comparing pixels one-for-one, this tool's AI was trained to see the page's layout and structure, almost like a human user would.

The result? A staggering 90% reduction in the time they spent on visual regression testing. The AI could instantly spot critical bugs—like a "Buy Now" button getting buried on a specific iPhone model—while smartly ignoring minor rendering quirks. This gave the team the confidence to push new promotions live, knowing the customer experience would be spot-on everywhere.

Fintech Test Data Generation

For a fast-growing fintech app, testing with realistic data was a huge security and privacy headache. They couldn't use real customer data for obvious reasons, but creating fake data by hand was a slog. It rarely covered all the complex financial transactions and tricky edge cases they needed to check.

This is where AI made all the difference. The team started using a tool that generates synthetic test data with machine learning. The AI analyzed the structure of their production data—without ever touching the sensitive info itself—and then created a massive, statistically accurate dataset of user profiles and transaction histories.

This approach not only kept user privacy locked down but also massively improved their test coverage. The AI-generated data included complex scenarios and outliers the team had never even thought of, helping them find hidden bugs in their transaction logic before they could ever affect a real customer.

This is a perfect example of using smart systems to get better testing outcomes. If you're interested in going deeper, exploring the principles of data-driven testing will give you a solid foundation for understanding how data quality shapes test effectiveness.

Intelligent Regression for SaaS Platforms

A major Software-as-a-Service (SaaS) provider with a continuous delivery pipeline was struggling. Their full regression suite had thousands of tests and took hours to run after every single code commit. This created a massive bottleneck for their developers, who were stuck waiting for feedback.

They turned to an AI solution that plugged right into their CI/CD pipeline and version control. The AI used predictive analytics to figure out exactly which parts of the code changed in a new build. Based on that analysis and historical test data, it intelligently picked and prioritized a small, critical subset of regression tests to run first.

The results were immediate and game-changing:

- Faster Feedback: Developers got crucial feedback on their work in under 15 minutes, a massive improvement from the hours they used to wait.

- Early Bug Detection: Critical bugs were caught almost as soon as they were introduced, which made them far easier and cheaper to fix.

- Increased Developer Confidence: With fast, reliable feedback, the dev team could merge and deploy code more often and with way less stress.

These examples make it clear: artificial intelligence in test automation isn't some far-off concept anymore. It’s a practical, powerful solution that is helping companies build better software, faster, right now. By automating visual checks, generating smart data, and prioritizing tests, AI tackles some of the biggest testing challenges and delivers measurable improvements in efficiency, coverage, and speed.

Putting AI Test Automation to Work with DotMock

Alright, let's get down to brass tacks. Moving from theory to practice is where you'll see what artificial intelligence in test automation can really do. The good news? You don't need to rip out your entire QA process and start from scratch. The smartest way to get started is to be strategic and incremental—pick one specific, high-impact problem and solve it first.

The key is to start small. Find a real pain point that’s a constant time-sink for your team. A flaky regression suite is often the perfect candidate. You know the one—it’s that collection of tests everyone dreads running because it spits out a sea of false positives. Tackling this first gives you a low-risk, high-reward project to prove the value of AI right away.

Choosing Your Pilot Project

Before you even think about tools, you need to pick a pilot project that sets you up for a win. Look for a user workflow that is:

- Stable but High-Traffic: Think about something like user login or a search feature. These are critical to the business but don't get redesigned every other week.

- Prone to Minor UI Changes: This is the perfect scenario for AI's self-healing capabilities to really shine when developers make small tweaks to a button or label.

- Well-Understood: Your team already knows exactly how it's supposed to work, which makes it simple to validate the AI-generated tests.

Once you have a target in mind, you can start to see how a modern AI testing tool like DotMock would handle the job.

How AI Tools Like DotMock Actually Work

Imagine you point DotMock at your app's login page. Instead of sitting around waiting for you to write a script, its AI gets to work. First, it "crawls" the application, digging into the Document Object Model (DOM) to understand every single button, form field, and link. It doesn't just see a jumble of code; it sees a user interface with distinct, functional parts.

Then, DotMock creates intelligent locators for each element. This is a huge step up from just grabbing a fragile XPath. It builds a rich profile for the "Login" button based on multiple attributes: its text, its position on the page, its color, and how it relates to other nearby elements. This multi-faceted understanding is the secret sauce behind its self-healing powers.

A Practical Example: The Self-Healing Login Test

Let's walk through a simple login flow to see this in action. A QA engineer might use DotMock’s low-code interface to quickly record the test:

- Navigate to the login page.

- Type "testuser" into the username field.

- Type "password123" into the password field.

- Click the login button.

- Verify that the user dashboard appears.

Behind the scenes, DotMock turns these actions into a stable test script. Now, let’s say a developer pushes an update that changes the login button's ID from id="login-btn-old" to id="login-btn-new".

With a traditional automation script, this tiny change would break the test instantly. The script would look for

login-btn-old, come up empty, and throw an error. This is exactly the kind of thing that wastes countless hours on test maintenance.

But DotMock's AI is much smarter. When it can't find the original ID, it doesn't just give up. It checks its intelligent profile for that button. It sees an element in roughly the same spot, with the same "Login" text, sitting next to the same password field. It correctly concludes this is the button it's looking for, just with a new ID.

The AI then automatically updates its locator for the button on the fly and continues the test, which passes without a hitch. The test has "healed" itself—no human intervention required. This fundamental shift is what turns your test suite from a brittle liability into a truly resilient asset.

If you're ready to see this in a live environment, you can jump right in with our DotMock Quickstart guide. Getting your hands on the tool is the best way to truly grasp how AI in test automation can cut down on tedious maintenance and help your team ship higher-quality software, faster.

Navigating the Real-World Hurdles

Bringing AI into your testing process can be a game-changer, but it’s not a magic wand. If you want to get it right, you have to go in with your eyes wide open to the challenges you'll likely face. The good news is that knowing these hurdles upfront helps you build a solid strategy to overcome them.

One of the first things you'll run into is the learning curve. New tools and different ways of working can feel daunting, and you might even hear whispers from team members worried that AI is coming for their jobs. The best way to handle this is to frame it as upskilling, not replacement. The whole point is to give your talented QA team superpowers, not to show them the door.

Keeping Expectations and Costs in Check

It's absolutely critical to set realistic expectations from day one. Think of AI as an incredibly smart assistant—one that’s brilliant at chewing through repetitive, data-heavy work. But it’s not a substitute for human insight. It doesn't understand the why behind your business logic or the creative, unexpected ways a real person might try to break your app.

Then there's the practical matter of cost. Let's be honest, many AI-powered tools come with a bigger price tag than the open-source frameworks you might be used to. To get the green light, you need to build a compelling business case that focuses on the long-term return on investment (ROI).

Don't just talk about the license fee. Frame the discussion around the total cost of ownership and highlight where AI actually saves money:

- Less Time on Maintenance: How many engineering hours are you currently sinking into fixing flaky, brittle tests? AI can slash that number.

- A More Productive Team: Show how AI frees up your best testers to focus on what humans do best—exploratory testing, usability, and complex edge cases.

- Faster Releases: When you get feedback faster, you can ship faster. That speed to market has real business value.

Suddenly, it's not just a tool expense; it's a strategic investment in becoming faster and better.

Cracking Open the "Black Box"

A perfectly valid concern many people have with AI is the so-called "black box" problem. This is when an AI model does something—like flagging a visual glitch—but you have no idea why it made that call. That lack of transparency can kill trust and make debugging a nightmare.

This is why you should always choose tools that prioritize explainability. The best platforms don't just give you a result; they show their work with detailed logs and visual reports. This gives your team the insight they need to actually trust what the AI is telling them.

At the end of the day, human oversight is non-negotiable. Your team’s domain knowledge is your secret weapon. They need to stay in the driver's seat, setting the strategy and validating the AI’s output to make sure it aligns with your quality standards.

Successfully navigating these challenges is all about finding the right balance. By investing in your people, being realistic about what AI can (and can't) do, and picking transparent tools, you can sidestep the common pitfalls. This approach turns potential roadblocks into manageable steps on your journey to a smarter, more efficient testing process.

Got Questions About AI in Testing? We've Got Answers.

As more and more teams dip their toes into AI-driven test automation, it's natural for questions to pop up. Getting your head around the specifics is the first step toward making it work for you. Let's tackle some of the most common things people ask.

Will AI Make Human QA Testers Obsolete?

Nope, not a chance. Think of AI as a powerful new member of the team, not a replacement for your existing talent. It’s brilliant at handling the mind-numbing, repetitive tasks that eat up so much time—like running thousands of regression tests overnight or checking visual consistency across 50 different device screens.

By taking over that grunt work, AI frees up your human testers to focus on what people do best:

- Exploratory Testing: Digging into the application with creativity and intuition to find those weird, edge-case bugs that scripts always miss.

- Usability Testing: Actually understanding if the user experience feels right, something a machine can't judge.

- Critical Thinking: Pushing back on business logic and asking, "Does this feature actually solve the user's problem?"

AI handles the robotic tasks so humans can focus on the truly intelligent work. It’s a partnership, not a replacement.

What's the Difference Between Machine Learning and AI in Testing?

It’s easy to get these two mixed up, but the relationship is pretty straightforward.

Artificial Intelligence (AI) is the big umbrella term. It’s the broad idea of building smart systems that can perform tasks that typically require human intelligence. It's the destination.

Machine Learning (ML) is the engine that gets us there. It's a specific branch of AI where we feed algorithms massive amounts of data and let them learn to spot patterns and make predictions on their own. Instead of programming rules for every single possibility, the system learns from experience.

You could say that in testing, the AI is the self-driving car (an autonomous testing system). The ML is the complex vision system that has learned to identify road signs and pedestrians to navigate safely.

How Can I Start Using AI in Testing if I Have Zero Experience?

Getting started is less intimidating than you might think. The key is to start small and aim for a quick, meaningful win.

First, find a real headache in your current testing process. Is it a flaky regression suite that breaks with every minor UI change? Or maybe a set of tests that takes hours to run? That's your target.

Next, pick an accessible, low-code AI testing tool. Look for one with features like self-healing tests, as this dramatically lowers the barrier to entry and shows value right away. Run a small pilot project on a less critical feature of your app. This gives your team a safe space to learn the tool and build confidence without risking a major release. From there, you can build on that success one step at a time.

Ready to put an end to constant test maintenance and ship faster? dotMock gives you a powerful, zero-configuration platform to build a truly resilient testing strategy. You can spin up production-ready mock APIs in seconds and simulate any scenario you can think of, ensuring your apps are ready for anything. Explore dotMock and start mocking for free.