API Rate Limits Explained A Practical Guide

At its core, an API rate limit is simply a rule that dictates how many times a user or client can call an API within a certain window of time. Think of it as a bouncer at a popular club—they can only let so many people in per minute to keep the venue from becoming dangerously overcrowded.

This isn't about being restrictive; it's about ensuring a smooth, stable, and fair experience for everyone using the service.

What Are API Rate Limits And Why They Matter

Let's stick with a real-world analogy. Picture a small, popular bakery that can only bake 50 loaves of bread an hour. If one customer comes in and orders 100 loaves, the entire system grinds to a halt. The oven is maxed out, and no one else can get any bread. An API rate limit works the same way for a digital service, setting a clear cap on requests to prevent one user from hogging all the resources.

These limits are a sign of a well-designed API. Without them, a single buggy script or a malicious attack could easily overwhelm a server, slowing it down or crashing it entirely for every other user. It's a fundamental practice used by every major provider, from GitHub to Google, to keep their services online and performant.

The Core Purpose of Rate Limiting

The main reason for implementing API rate limits is to protect the backend infrastructure and manage traffic effectively. This really boils down to three key goals that help both the API provider and the developers who rely on it.

- Ensuring Fair Usage: Rate limits level the playing field. They stop one high-volume user from consuming all the server's bandwidth, which guarantees that every application, whether large or small, gets a fair shot at accessing the API.

- Maintaining System Stability: Each API call uses server resources—CPU, memory, and database connections. A sudden, massive spike in requests can cause servers to buckle, leading to slow response times or even a complete outage. Limits are the first line of defense against this kind of performance collapse.

- Enhancing Security: Many cyberattacks, like Denial-of-Service (DoS) attacks, rely on flooding a server with an overwhelming number of requests. Rate limiting helps shut down these brute-force attempts at the source by simply refusing to process requests from an IP that exceeds the cap.

By managing the flow of requests, API providers can deliver a predictable and reliable service. For developers, this predictability is gold—it lets them build more resilient software because they know exactly what to expect from the API.

A Foundational Component of API Design

It's helpful to see rate limits not as a frustrating barrier, but as a feature of a mature, well-run API. They're a sign that the provider is serious about stability and scale.

For the provider, limits are a crucial tool for managing operational costs and scaling their infrastructure in a predictable way. For the developer consuming the API, they provide clear, documented "rules of the road" to work within.

Learning to respect and work with these boundaries is the first step toward building applications that are good digital citizens. When your code honors an API's rate limits, it contributes to the health of the entire ecosystem. This is the foundational concept you need to grasp before we dive into the different algorithms used to enforce these limits and how to handle them in your own code.

How Common Rate Limiting Algorithms Work

To enforce API rate limits, systems need a clear set of rules—an algorithm—to count requests and decide when it's time to say "stop." While the end result is often the same (that familiar 429 Too Many Requests error), the method used can dramatically affect how an application performs under pressure.

Getting to know these common algorithms is key, as it helps you anticipate how an API will behave and why. Let's break down the mechanics behind four popular strategies using some straightforward analogies.

The Token Bucket Algorithm

Imagine you have a bucket that can hold a maximum of 100 tokens. To make an API request, you have to "spend" one token from that bucket. If the bucket is empty, you simply have to wait.

So how do you get more tokens? A separate process adds new ones to the bucket at a steady rate, maybe 10 tokens per second.

This setup has a fantastic advantage: it allows for short, sharp bursts of traffic. If your bucket is full with 100 tokens, you can fire off 100 requests instantly. After that initial burst, your request rate settles down to the refill rate of 10 per second. This flexibility makes the Token Bucket algorithm perfect for apps with unpredictable traffic—long quiet periods followed by sudden spikes in demand.

The Leaky Bucket Algorithm

Now, picture a different kind of bucket, one with a small hole in the bottom. You can pour requests into this bucket as quickly as you like, but they only get processed as they "drip" out of the hole at a steady, constant pace.

If requests come in faster than they can leak out, the bucket starts to fill up. Once it's full, any new requests are just turned away until there's space again.

This algorithm is all about enforcing a smooth and predictable outbound traffic rate. Unlike the Token Bucket, it doesn't do bursts. No matter how many requests arrive at once, the API processes them at a fixed, controlled speed. This is ideal for services that need to shield downstream resources, like a database that can only handle a certain number of queries per second.

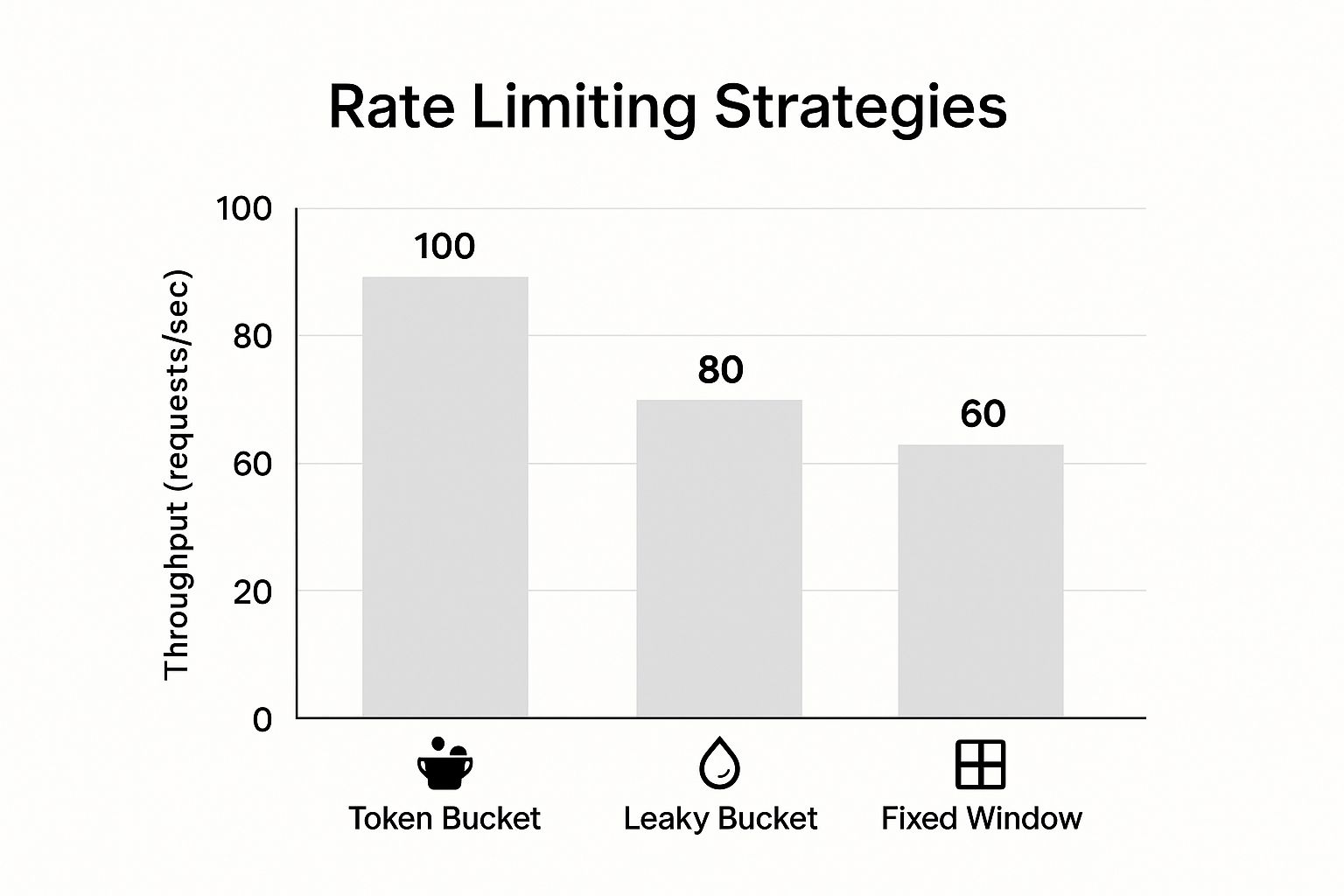

This infographic shows a simplified comparison of how request throughput might look across these different strategies.

As you can see, the Token Bucket allows for those high peaks because of its burst capacity, while the Leaky Bucket keeps things much more consistent.

The Fixed Window Counter Algorithm

This is probably the simplest method to wrap your head around. It works by defining a fixed time window, like one minute, and allowing a specific number of requests within that window, say 1,000 requests.

A counter keeps track of requests from a user. When a request comes in, the system checks if the counter is already over 1,000 for the current minute. If it's not, the request goes through, and the counter ticks up. If it is, the request is rejected. At the start of the next minute, the counter simply resets to zero.

The main drawback here is a problem at the "edge" of the window. A user could sneak in 1,000 requests in the last second of a minute and another 1,000 in the very first second of the next. That’s a 2,000-request burst over two seconds, double the intended limit, which could easily overwhelm a server.

The Sliding Window Log Algorithm

The Sliding Window Log algorithm is a smarter version of the Fixed Window, offering a much more accurate and fair way to count. Instead of a basic counter, this method maintains a log of timestamps for every single request.

When a new request arrives, the system does two quick things:

- It drops any timestamps from the log that are older than the time window (e.g., more than a minute ago).

- It counts how many timestamps are left in the log.

If that count is below the limit, the new request is approved and its timestamp is added to the log. If the count is already at the limit, the request is denied. This approach completely avoids the window-edge problem by always maintaining a precise, rolling count, leading to smoother and more reliable enforcement of API rate limits.

To bring it all together, here’s a quick comparison of the four algorithms we’ve just discussed.

Comparing Common Rate Limiting Algorithms

This table summarizes the core idea, best use cases, and key trade-offs for each of the four main algorithms.

| Algorithm | Core Analogy | Best Suited For | Key Advantage | Potential Drawback |

|---|---|---|---|---|

| Token Bucket | A bucket refilling with tokens to "spend" on requests. | Applications with bursty, unpredictable traffic. | Allows for high-throughput bursts. | Can be more complex to implement than simpler counters. |

| Leaky Bucket | A bucket with a hole, processing requests at a steady drip. | Services that need to protect downstream systems. | Provides a smooth, predictable output rate. | Bursts of traffic are throttled, not accommodated. |

| Fixed Window | A simple request counter that resets every X seconds. | Basic rate limiting where precision isn't critical. | Simple and efficient to implement. | Allows double the rate at the edges of time windows. |

| Sliding Window Log | A log of timestamps for a constantly updating window. | Scenarios requiring precise, fair rate control. | Highly accurate and fair to users. | Can be memory-intensive due to storing all timestamps. |

Each algorithm offers a different way to solve the same problem. Choosing the right one often depends on the specific needs of the API—whether it needs to handle bursts, protect fragile resources, or just enforce a simple, fair usage policy.

Designing Effective API Rate Limits

Crafting smart API rate limits isn't about throwing up a wall; it's about thoughtful architecture. A well-designed system protects your infrastructure from getting hammered while empowering developers to build amazing things on your platform. The real goal is to find that sweet spot between stability, fairness, and a great developer experience.

It all starts with defining clear, transparent usage policies. Developers need to know the rules of the road before they start coding. Ambiguous or hidden limits are a fast track to frustration and buggy applications. Clarity and predictability are your best friends here.

Ultimately, a solid rate limiting strategy is a critical piece of your API's architecture and a major boost to its security. For a broader view, our guide on essential API security best practices shows exactly how rate limiting fits into your overall defense plan.

Establishing Clear and Fair Policies

The bedrock of any good rate limiting system is a policy that developers can actually understand and plan for. This information should be front-and-center in your API documentation, not buried in the fine print somewhere.

First, take a hard look at your application's typical usage patterns. Do you see short, intense bursts of activity, or is it more of a steady stream of requests? Answering this helps you pick the right algorithm—a Token Bucket is great for handling bursts, while a Leaky Bucket is better for smoothing out traffic. The choice depends entirely on the nature of your service and your users' needs.

Next, you have to decide what to limit. Are you tracking requests by IP address, API key, or user account? Each comes with its own pros and cons:

- IP-based limiting is straightforward but can unfairly punish multiple users on a shared network, like a corporate office or a university campus.

- API key-based limiting is the most common and arguably the fairest method. It ties usage directly to a specific developer or application.

- User account limiting gives you a ton of control, letting you set different limits for various subscription tiers.

Communicating Limits Transparently

Once your policy is set, communication is everything. The most elegant way to do this is by sending standard HTTP headers with every single API response. This gives developers real-time, programmatic feedback on where they stand.

The three essential headers are

X-RateLimit-Limit(the total request quota per period),X-RateLimit-Remaining(how many requests are left), andX-RateLimit-Reset(a timestamp for when the quota refreshes).

And when someone inevitably hits their limit, your API should respond with a 429 Too Many Requests status code. What's even more helpful is including a Retry-After header that tells the client exactly how many seconds to wait before trying again. This simple step turns a frustrating error into a clear, actionable instruction.

Implementing Tiered Limits and Quota Increases

A one-size-fits-all limit just doesn't work in the real world. A small startup building a prototype has completely different needs than an enterprise-scale application serving thousands of users. This is where tiered limits shine.

By offering different rate limits for various plans (think Free, Pro, and Enterprise), you can serve a much wider audience. A free tier might have tight limits to prevent abuse, while paid tiers get the high throughput needed to support growing applications.

This is a common model. For example, some analytics APIs have default limits that work for most people but offer higher quotas for customers with high-volume needs. It's also crucial to have a clear, documented process for developers to request a quota increase. Whether it's a simple contact form or an automated dashboard feature, giving users a path to scale ensures their success becomes your success.

Handling Rate Limits Gracefully In Your Application

As a developer, the way your application handles an API's rate limits is a direct measure of its reliability. When you hit a limit, simply crashing or throwing a generic error isn't an option—it creates a jarring experience for your users. The goal is to build resilient, well-behaved software that sees these boundaries coming and handles them with elegance.

This is all about shifting from a reactive to a proactive mindset. Don't just wait around for a 429 Too Many Requests error to pop up. Instead, design your code with the fundamental assumption that you will encounter limits. This approach is key to keeping your application stable and being a good citizen of the API provider's infrastructure.

Implement Smart Retry Logic

When you do hit a rate limit, the absolute worst thing you can do is immediately fire off another request in a tight loop. This creates a "thundering herd" problem that hammers the server, making the very problem the rate limit was designed to prevent even worse.

The industry-standard solution here is exponential backoff. The concept is pretty straightforward: after a failed request, you wait a moment before trying again. If that next attempt also fails, you double the waiting period, and you keep doubling it until the request succeeds.

But there's a catch. If all your clients are backing off on the same schedule, they'll all retry at the exact same time. That’s why you need to add jitter—a small, random amount of time added to each backoff delay. This simple trick staggers the retry attempts from different clients, smoothing out the request load on the API.

Combining exponential backoff with jitter gives your application a graceful way to recover from rate limiting without overwhelming the API. It’s a foundational technique for building robust API integrations.

Reduce Your API Call Footprint

Honestly, the best way to handle api rate limits is to avoid hitting them altogether. This means being deliberate about every single call your application makes and cutting out anything that’s redundant or just plain unnecessary.

Here are two powerful strategies that can dramatically shrink your request volume:

- Caching Responses: Does your app constantly ask for data that rarely changes? If so, set up a local cache. By storing responses for a specific duration (the Time-to-Live or TTL), you can serve data directly from your cache instead of making a fresh API call every single time.

- Using Webhooks: Instead of repeatedly polling an API just to check for new updates, see if it supports webhooks. Think of a webhook as a "reverse API"—the service tells you when something happens, pushing data to your application proactively. This completely eliminates the need for constant checking.

To do this effectively, you have to know what the API offers. Digging into the documentation is essential. For more on that, check out our guide on API documentation best practices to learn how to find these kinds of features.

Respect API-Specific Rules

Finally, you have to play by the rules of the specific API you're using. Every API is different. Some have hard-and-fast caps, while others use more dynamic throttling based on real-time server load.

A great real-world example is Interactive Brokers' TWS API. They limit users to 50 simultaneous historical data requests. But they also layer "soft" limits on top of that to balance system load, and making too many requests can get you temporarily throttled or even disconnected. This shows how platforms often use a nuanced, multi-layered approach to keep their systems stable for everyone.

Bottom line: read the documentation, understand the policies, and build your application to honor them. It's how you ensure your app remains a good citizen in the API ecosystem.

How To Monitor and Test For Rate Limits

Writing solid error handling is only half the battle. You have to be sure it actually works under pressure. That’s where proactive monitoring and simulating failure scenarios come in, ensuring your application can handle API rate limits gracefully when it really counts.

Skipping this part is like building a ship but never testing it in rough seas. You’re just crossing your fingers and hoping it stays afloat when a storm hits. A much better approach is to set up systems that watch your request volume and use tools to safely trigger rate-limit errors in a controlled test environment.

Proactive Monitoring and Alerting

First things first: you need visibility into how your application is using APIs. You can't manage what you don't measure. Integrating logging and metrics is the only way to get a clear picture of your request patterns over time.

By keeping an eye on the X-RateLimit-Remaining header, for example, you can see exactly how close you are to hitting your quota in real time. Feed this data into a monitoring dashboard, and your team gets a clear, live view of your API usage.

But the real magic happens when you set up alerts based on these metrics.

- Threshold Alerts: Configure an alert to fire when

X-RateLimit-Remainingdrops below a certain point, say 20%. This gives you a crucial heads-up long before you actually hit the wall. - Spike Detection: Set up another alert for sudden, unusual increases in API calls. This can help you spot buggy code, runaway loops, or unexpected user behavior before it takes down your service.

With these simple measures in place, rate limiting transforms from an unexpected crisis into a predictable event you can actually manage.

Simulating Rate Limits With API Mocking

Monitoring tells you a storm might be coming, but how do you know your ship is ready? Deliberately overwhelming a live production API is not just a bad idea—it’s reckless. This is where API mocking tools become absolutely essential.

API mocking lets you create a simulated version of an API that you have complete control over. Instead of hitting the real service, you can program your mock to behave in very specific ways, including triggering a rate limit scenario whenever you want.

A mock API gives you a safe, isolated sandbox to test your application's resilience. You can trigger any error condition you need—like a

429 Too Many Requestsresponse—without making a single call to the production server or burning through your real quota.

This is, hands down, the most reliable way to confirm that your retry logic and exponential backoff strategies work exactly as you designed them. Building these failure scenarios is a cornerstone of any modern testing workflow. If you want to dive deeper, check out our guide on how to test REST APIs effectively.

Using a tool like dotMock, you can quickly configure an endpoint to return a 429 error along with a specific Retry-After header. It’s as simple as setting up a mock response.

This level of control is key to validating that your application's error handling and retry mechanisms will perform correctly under pressure, which is ultimately what protects your user experience.

When we talk about API limits, the first thing that usually comes to mind is the classic "requests per minute." But that’s just scratching the surface. A well-designed API uses a much richer set of controls to keep things stable and ensure everyone gets a fair slice of the pie.

Thinking beyond just request counts is key to building applications that don't just work, but are truly resilient. After all, a single API call with a massive payload can strain a server just as much as a hundred tiny ones. That's why API providers get a lot more specific with their rules.

More Than Just How Often You Call

Let's break down three other common types of limits you'll run into. Each one is designed to plug a different kind of hole that could otherwise sink the ship.

- Concurrency Limits: This is all about how many requests you have in flight at the same time. Think of it like opening too many tabs in your browser—eventually, everything grinds to a halt. Concurrency limits stop one client from monopolizing all the server's attention at once.

- Data Payload Limits: This one's simple: it's a cap on how big a single request or response can be. A social media API might cap video uploads at 500 MB, for example. This prevents one huge file from clogging the pipes and tying up the server for ages.

- Temporal Limits: Here, the constraint is on how far back in time you can pull data. An analytics API might give you super-detailed, minute-by-minute data for the last month, but switch to daily summaries for anything older. This is a smart way to stop massively expensive queries from digging through years of historical records.

An API's limits are a direct reflection of its architecture. Once you understand the different ways an API protects itself, you can design your application to work with it, not fight against it.

Seeing These Limits in the Wild

These constraints aren't just abstract concepts; they’re hard at work in the APIs you use every day. A financial data service, for example, might offer a firehose of real-time stock quotes but put strict temporal limits on retrieving decades of historical pricing to avoid bogging down its databases.

Content delivery networks are another great example. Some stats APIs, like the one from Fastly, will give you minutely data for the last 35 days but only hourly data for the last 375 days. It’s a practical trade-off that keeps queries for recent, high-value data snappy while managing the load of pulling older information.

By getting familiar with these different types of limits, you can build much smarter integrations that gracefully handle an API’s boundaries instead of crashing into them.

Frequently Asked Questions About API Rate Limits

Once you start working with APIs, it's not a matter of if you'll encounter rate limits, but when. They're a fundamental part of how modern services operate, but they can be a source of confusion. Let's clear up some of the most common questions that pop up for developers and product managers.

Getting a handle on these concepts isn't just academic; it's about building applications that are robust and dependable. When you understand the rules, you can spend less time chasing down mysterious errors and more time building great features.

What Is The Most Common HTTP Status Code For Rate Limiting?

The industry standard is 429 Too Many Requests. This status code is the API's way of politely but firmly telling you, "Hey, you're calling me too often. Please slow down."

A well-behaved API won't just block you. It will also send back a Retry-After header, which is incredibly useful. It tells you exactly how many seconds you need to wait before trying your request again. While 429 is the one to expect, keep in mind that some older or non-standard APIs might fall back on a 403 Forbidden or even a 503 Service Unavailable, so it's always smart to double-check the docs.

How Do I Find An API's Specific Rate Limits?

Your first and best stop is always the official API documentation. Good documentation will have a clear section labeled "Rate Limits," "Usage Policies," or something similar. This is the definitive source for understanding the rules of engagement.

Beyond the docs, many APIs provide real-time feedback directly in their response headers. You can often see exactly where you stand with every call. Look for these common headers:

X-RateLimit-Limit: The maximum number of requests you're allowed in the current time window.X-RateLimit-Remaining: How many requests you have left before you hit the limit.X-RateLimit-Reset: The exact time (usually as a Unix timestamp) when your quota will reset to full.

Can I Increase My API Rate Limit?

Absolutely. In fact, most API providers plan on it. They typically offer tiered pricing where higher-level plans come with more generous rate limits. If you're on a free plan and your app's usage is growing, upgrading to a paid or enterprise tier is the most straightforward way to get more capacity.

What if you have massive-scale needs that go beyond even the highest public-facing plan? It's time to talk to a human. Reach out to the provider’s sales or support team. They're often able to create custom plans with bespoke limits for high-volume customers. The trick is to be proactive and start that conversation before you're constantly bouncing off the ceiling.

Of course, knowing how to handle a rate limit is one thing; testing it is another. With dotMock, you can spin up a mock API in seconds and configure it to return a 429 Too Many Requests error. This lets you test and perfect your retry logic and error handling in a safe environment, long before your users see a problem. You can get started for free at dotmock.com and start building more resilient software today.