What is Chaos Engineering? Build Resilient Systems Today

Think of chaos engineering as a 'flu shot' for your software. It’s the practice of intentionally creating controlled failures—like temporarily shutting down a server or slowing down a network—to see how your system reacts. The goal isn't to break things for the sake of it, but to find hidden weaknesses before they become real-world outages for your customers.

What Is Chaos Engineering, Really?

At its core, chaos engineering is a disciplined approach to identifying failures before they become full-blown outages. Instead of waiting for something to go wrong, you proactively inject carefully planned faults into your system to test its response. It might sound a little crazy, but it's the best way to build real confidence that your application can withstand unexpected turbulence.

This practice is fundamentally different from traditional quality assurance. While QA focuses on validating expected behavior in a controlled environment ("Does this button do what it's supposed to?"), chaos engineering explores the unexpected behavior of the entire system, often in production ("What happens when the database this button relies on suddenly vanishes?").

The discipline really took off when it was popularized by Netflix around 2010 with their now-famous tool, Chaos Monkey. This tool would randomly terminate production instances, forcing engineers to build services that could survive that kind of random failure. The core idea remains the same today: intentionally introduce failures to uncover vulnerabilities before they ever impact a user.

A Shift from Reactive to Proactive

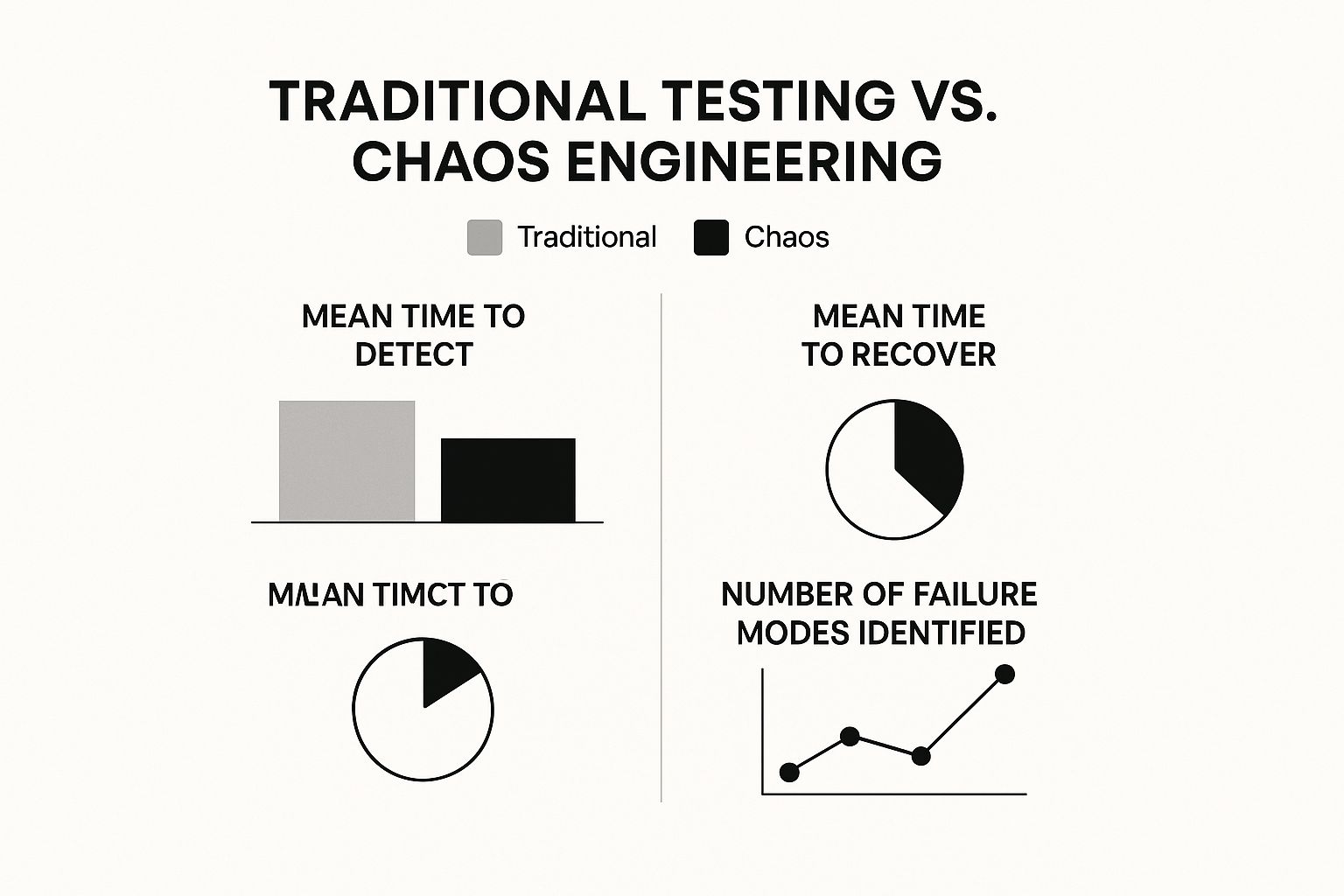

The real difference is the mindset. Traditional testing is often reactive, focusing on known conditions and predictable outcomes. Chaos engineering, on the other hand, is completely proactive. It’s all about preparing for the unpredictable failures that complex, modern systems will inevitably face.

As you can see, a chaos engineering approach typically leads to much faster detection and recovery times. Why? Because teams have already practiced handling these kinds of failures. It’s like a fire drill for your code.

By breaking things on purpose, we build more resilient systems. It’s about embracing failure as a learning opportunity to prevent catastrophic outages down the line.

This shift from avoiding failure to learning from it is what defines modern reliability practices. It allows engineering teams to move from a state of hopeful stability to one of proven resilience.

Chaos Engineering vs Traditional Testing at a Glance

To put it in perspective, let's break down the key differences between these two approaches. While both are essential for quality, they serve very different purposes.

| Aspect | Chaos Engineering | Traditional Testing |

|---|---|---|

| Goal | Discover unknown weaknesses in a complex system. | Verify known functionality and expected behavior. |

| Environment | Production or production-like environments. | Staging, QA, or development environments. |

| Method | Injecting real-world failures (e.g., latency, server failure). | Executing pre-defined test cases and scripts. |

| Focus | System-wide resilience and inter-dependencies. | Individual components or user-facing features. |

| Mindset | Proactive "What if this breaks?" | Reactive "Does this work as designed?" |

Ultimately, traditional testing confirms your system can work, while chaos engineering proves it will work, even when things start to go wrong.

Getting to the Heart of Chaos Engineering: The Core Principles

Chaos engineering might sound like letting a bull loose in a china shop, but it's the exact opposite. It's a highly disciplined and scientific practice. Instead of causing random destruction, it’s about running controlled experiments to find hidden weaknesses before they find your customers. Think of it as a fire drill for your software.

This methodical approach is built on a few key principles that turn potential chaos into predictable confidence.

First, Know What "Normal" Looks Like

Before you can spot something wrong, you have to know what "right" looks like. This is your system’s steady state—a clear, measurable picture of its healthy behavior. It’s not just a feeling; it’s defined by hard numbers.

This baseline is a mix of different metrics:

- System Health: Things like CPU utilization staying below 80% or network latency under a certain threshold, say 50ms.

- Business KPIs: Critical indicators like the number of successful checkouts per minute or how quickly your API responds to requests.

This steady state becomes your benchmark. If an experiment pushes your system out of this state and it can't recover on its own, you’ve just found a vulnerability worth fixing. The way you define this baseline often depends on your system's underlying structure, and it helps to understand common software architecture design patterns to build this kind of predictability.

Make a Specific, Testable Guess

Once you know your baseline, it's time to form a hypothesis. This isn't a wild guess. It's a focused, testable statement about how you believe your system will handle a specific failure. You're basically saying, "I bet that if this breaks, that will happen."

A good hypothesis is incredibly specific. For example:

"If our main database in the

us-east-1region fails, the system will automatically failover to theus-west-2backup within 30 seconds, and customers won't notice a thing."

See how clear that is? It sets an exact expectation and a timeframe, giving your experiment a definitive pass or fail outcome. This is what makes it a real experiment, not just random meddling.

Replicate Real-World Problems

Now for the fun part—actually breaking something. But you're not just pulling random plugs. The goal is to introduce failures that mimic real-world incidents. You're injecting a bit of controlled chaos to see how your system reacts.

Common experiments include:

- Simulating Server Crashes: Taking a virtual machine or container offline.

- Creating Network Lag: Intentionally slowing down the connection between two services.

- Blocking a Dependency: Making a key third-party API temporarily unreachable.

These are the kinds of events that lead to real outages, and you want to trigger them on your own terms.

Always Limit the "Blast Radius"

This is perhaps the most important rule: safety first. You always want to contain the potential impact of your experiment. This is known as the blast radius. The idea is to start small and ensure that if things go sideways, the fallout is minimal.

Never start in production. Begin in a development or staging environment. When you are ready for production, limit the experiment to a tiny slice of traffic, like internal users or just 1% of customers. As you see the system handle these smaller tests successfully, you can gradually widen the blast radius.

This approach ensures that when you do find a problem, it's a valuable lesson, not a four-alarm fire that wakes up your entire customer base.

Why Your Business Can't Afford to Ignore Chaos Engineering

System downtime isn't just a technical headache; it’s a direct hit to your revenue and reputation. Today's applications are complex webs of microservices and cloud infrastructure, riddled with hidden failure points that standard testing just can't find. All it takes is one small component to fail unexpectedly, and the whole house of cards can come tumbling down.

This is more than a minor inconvenience. For most companies, downtime is a financial disaster. A survey from a few years back found that for 98% of organizations, a single hour of downtime costs over $100,000. Think of chaos engineering as preventative medicine for your systems—it helps you find and fix those expensive weak spots before your customers ever see them. You can dig deeper into the real-world financial cost of outages on Gremlin's website.

This proactive mindset fundamentally changes the game. You stop "hoping nothing breaks" and start "proving you can handle it when something does."

It's Time to Stop Relying on Hope

Hope is not a business strategy, especially when it comes to reliability. In the tangled world of cloud-native applications, something will eventually break. It’s not a matter of if, but when. A third-party API will go down. A network connection will lag. A server will crash. Waiting for these things to happen at 2 a.m. or during your Black Friday sale is a recipe for disaster.

Chaos engineering gives you a structured way to replace that hope with hard evidence. By running controlled experiments, you learn exactly how your system behaves under real-world stress. That knowledge is gold. It helps you build smarter failover mechanisms, set up alerts that actually mean something, and train your team to know precisely what to do when a real crisis hits.

Chaos engineering isn't about creating chaos; it's about containing it. It’s the practice of exploring complexity to build confidence and ensure that when a small fire starts, it doesn't burn the whole house down.

This is how you prepare for the "unknown unknowns"—the failure scenarios you haven't even imagined yet.

Build the Kind of Trust That Keeps Customers Around

At the end of the day, the biggest win is the one your customers feel: rock-solid reliability. Every outage, big or small, chips away at their trust. If a customer hits an error while trying to make a purchase or access their data, you might lose them for good.

When you embrace chaos engineering, you're sending a clear message that you take resilience seriously. This commitment pays off in tangible ways:

- Less Downtime: By finding and fixing weaknesses on your own terms, you suffer from fewer and shorter outages.

- Stronger Customer Loyalty: People stick with services they can count on. A reliable platform builds trust and keeps customers coming back.

- Quicker Incident Response: Your team gets real-world practice at spotting and fixing problems, which drastically cuts down your Mean Time to Resolution (MTTR).

- A Real Competitive Edge: The next time a major cloud provider has an outage, your resilient system will stay up while your competitors are scrambling.

Simply put, chaos engineering is a direct investment in the continuity of your business. It turns reliability from a vague goal into something you can measure, prove, and build your company's success upon. It's how you prepare for failure so you can build a system that rarely fails its users.

How Industry Leaders Use Chaos Engineering

The best way to really wrap your head around chaos engineering is to look at how the biggest names in tech use it to solve massive, high-stakes problems. While Netflix is famous for getting the ball rolling, the practice has spread far beyond streaming. It’s now a core part of building reliable systems in industries where any amount of downtime is a complete disaster.

These companies aren’t just randomly pulling plugs. They're running incredibly precise, controlled experiments to make sure their platforms can handle the chaos of the real world—from a sudden flood of users to a critical piece of hardware failing.

Preparing for Peak Demand

Picture a company like Amazon on Black Friday. They know a massive, predictable wave of traffic is coming, and it's going to push their systems to the breaking point. To get ready, their engineers run chaos experiments that mimic that exact scenario.

- Traffic Spikes: They might bombard their servers with artificial traffic to find the weak spots in their auto-scaling configuration.

- Service Latency: They’ll intentionally introduce delays between microservices to see if the checkout process still works smoothly when a background service starts lagging.

- Database Overload: They test what happens when their databases are hit with an impossible number of queries all at once.

By finding these fractures ahead of time, they can reinforce their infrastructure. This ensures the site stays up and running during the most important sales event of the year.

Ensuring Uninterrupted Operations

The ideas behind chaos engineering have taken root in all sorts of industries. By 2020, it was already a standard practice for financial institutions, healthcare providers, and big players like Uber and JP Morgan Chase who needed to guarantee service quality. You can explore more about this industry-wide adoption on steadybit.com.

A major financial firm, for example, might use chaos engineering to make sure its trading platform stays online even if a network failure splits its systems apart. We're talking about preventing millions of dollars in losses—this isn't just a test, it's a core business continuity drill.

Along the same lines, a global shipping company might simulate an entire regional cloud provider going down. This kind of experiment proves their systems can automatically failover and reroute everything through another data center. That's how they make sure packages keep moving on time, without a single point of failure bringing the whole operation to a halt.

Finding and fixing these weaknesses before they impact customers is the whole point. A really smart way teams can do this safely is by simulating API and service failures. We have a great guide that explains more about what is service virtualization. That technique lets you pretend a dependency is unavailable without actually taking it offline, making it a perfect tool to use alongside chaos engineering. It's a key part of how modern teams build software that just doesn't break.

Where to Begin: Common Chaos Experiments

Jumping into chaos engineering doesn't mean you have to start by taking down a whole data center. The best approach is to start small, with simple, controlled experiments that give you immediate, valuable feedback on how your system really behaves.

Think of these initial tests as a menu of options. Each one is designed to mimic a specific real-world problem, allowing you to systematically build a deep understanding of your application's weak points. Remember, every experiment should start with a clear question or hypothesis—this is what turns a simple failure test into a true scientific experiment.

Testing Resource Limits

One of the most frequent culprits behind application outages is resource exhaustion. These experiments are all about pushing your system's resources to their breaking point to see what happens. It's better to find out in a controlled test than during a customer-facing incident.

- CPU Exhaustion: What happens when you intentionally max out the CPU on a server or container? A good hypothesis might be: "If CPU usage spikes to 100%, our monitoring should fire an alert within 2 minutes, and our orchestrator will automatically spin up a new instance to handle the load." This is a fantastic way to validate your auto-scaling and alerting configurations.

- Memory Leaks: Here, you can simulate a runaway process that eats up all available RAM. The goal is to see if your system crashes hard or degrades gracefully. You get to observe how your memory management and garbage collection processes hold up under extreme stress.

These tests are fundamental for truly understanding the performance boundaries of your services.

Simulating Network Failures

In today's world of microservices and distributed architectures, everything is connected by a network. That network is also a massive potential point of failure.

The core question of chaos engineering is often, "What happens when different parts of our system can't talk to each other anymore?" Network failure experiments are designed to give you the answer before it happens for real.

Common experiments include:

- Injecting Latency: This involves artificially slowing down the connection between two services. It's a great way to find hidden timeouts or reveal cascading failures that only pop up when responses are sluggish. If you're new to this, learning how to test network latency is a perfect starting point.

- Packet Loss: In this test, you randomly drop a percentage of network packets traveling between services. This simulates an unstable or choppy network connection and quickly reveals whether your application has the right retry logic and error handling to survive intermittent connectivity.

Inducing Infrastructure Failures

Finally, we get to the classic chaos engineering scenarios made famous by pioneers like Netflix. These experiments test your system's ability to survive the sudden loss of its underlying hardware or virtual resources.

- Terminating an Instance: The concept is simple: randomly shut down a virtual server or a container. Your hypothesis is straightforward: "If an instance suddenly vanishes, the load balancer should instantly stop sending it traffic, and a replacement instance will be launched with zero user impact."

- DNS Unavailability: What if your application can't reach its DNS provider? Temporarily blocking that access will show you if your services are smart enough to use cached DNS lookups or if they simply grind to a halt.

Types of Chaos Engineering Experiments

These experiments are just the beginning. They represent a foundational set of tests that can reveal critical weaknesses in almost any distributed system. The table below breaks down some of the most common experiments, what they simulate, and the kinds of problems they help uncover.

| Experiment Type | Simulates | Potential Weakness Uncovered |

|---|---|---|

| CPU Spike | High traffic, runaway process | Poor auto-scaling rules, inadequate monitoring alerts |

| Memory Exhaustion | Memory leak, inefficient code | Lack of graceful degradation, improper resource limits |

| Network Latency | Network congestion, distant service | Aggressive timeouts, cascading service failures |

| Packet Loss | Unreliable Wi-Fi/cellular network | Missing or ineffective retry logic |

| Instance Termination | Hardware failure, spot instance reclaim | Slow failure detection, stateful dependencies |

| DNS Failure | DNS provider outage, misconfiguration | Lack of DNS caching, hardcoded IP addresses |

By methodically working through these scenarios, you move from hoping your system is resilient to knowing it is. Each successful experiment builds confidence and makes your services stronger.

We're All in This Together: Building a Culture of Resilience

Adopting chaos engineering isn't just about plugging in a new tool. It’s a complete shift in how your team thinks about failure. Honestly, the success of your program has far less to do with the specific software you choose and everything to do with the culture you build. The real goal is to get to a place where discovering a weakness is seen as a victory for everyone, not a finger-pointing exercise.

This all starts with a commitment to blameless postmortems. When an experiment—or a real incident—causes a failure, the conversation has to be about why the system behaved that way, never about who made a mistake. Creating that psychological safety is non-negotiable. It gives engineers the freedom to be curious and hunt for problems without worrying about blame, which turns every failure into a shared lesson.

Start Small, Start Together

So, how do you get your team comfortable with intentionally breaking things? You don't just spring it on them. The best way I've seen this done is by starting with Game Days. Think of these as scheduled, collaborative sessions where the whole team gets together to run a few chaos experiments in a controlled, safe environment.

Game Days are brilliant for a few reasons:

- It takes the fear away. They demystify the process and show that this isn't about cowboys causing random outages; it's a structured, scientific approach.

- It creates shared ownership. Everyone is involved, from planning the experiment to analyzing the fallout. The system's resilience becomes a team responsibility.

- It proves the value, fast. The moment an experiment exposes a real, hidden issue that could have taken the site down, you'll see the lightbulbs go on around the room.

A successful Game Day isn't one where everything goes perfectly. The best ones are when something actually breaks, the team figures out why, and everyone collaborates on a fix. That’s how you get stronger.

Getting Leadership on Your Side

For chaos engineering to stick, it needs to be more than just a pet project for the engineering team. You need buy-in from the top. The key is to frame the conversation around business goals, not technical jargon.

Don't talk about "running experiments." Talk about it as a direct investment in business continuity and protecting the customer experience. Explain that it is drastically cheaper and better for your brand to find these problems ourselves on a Tuesday afternoon than to have your customers find them for you during a major sales event.

When you position it as a strategy for managing risk and safeguarding revenue, you connect the dots for leadership. That’s how you elevate reliability from a purely technical concern to a core value for the entire organization. That’s how you build a real culture of resilience.

Got Questions? Let's Clear Things Up

Even after getting the basics down, a few common questions always pop up when teams start digging into chaos engineering. Let's tackle them head-on.

Is Chaos Engineering Just a Fancy Name for Performance Testing?

Not at all. They’re two completely different disciplines with different goals.

Performance testing is all about measuring how your system handles a heavy but expected workload. You're looking at speed, latency, and how gracefully it handles a surge in traffic.

Chaos engineering, on the other hand, is about what happens when things go wrong unexpectedly. It’s not about speed; it’s about survival.

Here’s a simple way to think about it:

- Performance Testing: "How fast can our race car go on a smooth, predictable track?"

- Chaos Engineering: "What happens if a tire suddenly blows out while the car is going 150 mph?"

One tests for efficiency, the other for resilience against disaster.

Should We Run These Experiments in Staging or Production?

This is a big one. While starting in a staging environment is a sensible first step, the real gold is found in production.

Why? Because your staging environment, no matter how good it is, will never truly mirror the beautiful, chaotic mess of your live production system. It doesn't have the same traffic patterns, the same complex interactions, or the same unpredictable user behavior.

The ultimate goal of chaos engineering is to build unshakable confidence in the system that your customers actually use. You can only get there by carefully testing in production, starting with a very small, controlled "blast radius" to keep things safe.

What Tools Can We Use to Get Started?

The toolkit for chaos engineering has come a long way since Netflix first unleashed Chaos Monkey. Today, you've got some fantastic options to choose from.

Here are a few popular platforms to check out:

- Gremlin: A powerful, enterprise-focused platform that lets you run a huge variety of resilience experiments.

- Chaos Monkey: The classic tool that started it all. It’s still a great, straightforward way to test how your system handles random instance failures.

- LitmusChaos: A great open-source, cloud-native choice that fits perfectly into Kubernetes workflows.

These tools give you the control you need to safely inject failures, observe what happens, and learn from the results. They're the foundation for building a truly resilient engineering culture.

Ready to simulate API failures and build truly resilient applications? dotMock lets you create mock APIs in seconds that simulate timeouts, errors, and network failures, so you can test your system's response without touching production. Get started for free.