Mastering UI Testing Automation for Modern Apps

Automated UI testing is all about using software to automatically run tests that check an application's user interface. Instead of a human manually clicking through every screen, scripts do the heavy lifting, making sure all the visual pieces—buttons, forms, menus, you name it—look right and work as expected. This is absolutely critical for teams that want to move fast, as it flags bugs much earlier and just makes for a better quality product overall.

Why UI Testing Automation Is a Must-Have Today

Let's face it, manual UI testing just can't keep up anymore. We're building complex applications and pushing out releases faster than ever, which means automation has gone from a luxury to a core part of the strategy. The best development teams I know made this switch a long time ago, not just to be more efficient, but to stay competitive. A good automation strategy is your first line of defense, protecting your brand's reputation by catching show-stopping bugs before they ever see the light of day.

The impact on the ground is huge. It saves you from scrambling to fix expensive problems after a launch, speeds up the entire development cycle, and ultimately keeps users happy by delivering a smooth, professional experience. I like to think of it as an insurance policy for both your app's quality and your team's velocity.

The Business Case for Automation

This move toward automation isn't just an engineering fad; it's a massive shift in the market. UI test automation is now a fundamental part of modern software development, with the global market for automation testing expected to hit $68 billion by 2025. This explosive growth is driven by user interfaces that are getting more and more complex and the non-negotiable need to validate them across a dizzying number of devices. It's telling that large companies make up 69% of the market revenue—they've clearly recognized the return on investment.

This image shows a pretty standard automated testing workflow, giving you a visual of how test scripts interact with an application to verify its behavior.

You can see the main parts at play: the test driver, the application itself, and the reporting system, all working together to lock in quality.

It’s More Than Just Finding Bugs

To really get why UI testing automation is so important, you have to look at the broader value of software testing. It's not just about sniffing out defects; it’s about building unshakable confidence in your product.

A strong automation suite doesn't just tell you what's broken; it confirms what’s working perfectly. That continuous validation gives teams the confidence to ship new features faster and innovate without constantly looking over their shoulder for regressions.

This confidence is a direct line to business agility. Instead of sinking entire sprints into manual regression checks, your team can focus on what they do best: building great new features, all while knowing a safety net is firmly in place. We actually dive deeper into the benefits of automated testing in another guide. At its core, this is about moving from a reactive "bug hunt" to a proactive process of assuring quality.

Choosing Your Automation Framework Wisely

https://www.youtube.com/embed/cuHDQhDhvPE

Picking the right framework for your automated UI testing is probably the single most important decision you'll make in this entire process. It’s a choice that ripples through everything else—from how you write your tests to how painful they are to maintain down the line. It's less about finding the tool with the longest feature list and more about finding the one that’s a practical, comfortable fit for your team and your project.

What works for one team won't necessarily work for another. If you're building a complex, React-heavy single-page application, your needs are going to be vastly different from a team that requires bulletproof cross-browser testing for a more traditional website. Modern frameworks are built with these different scenarios in mind.

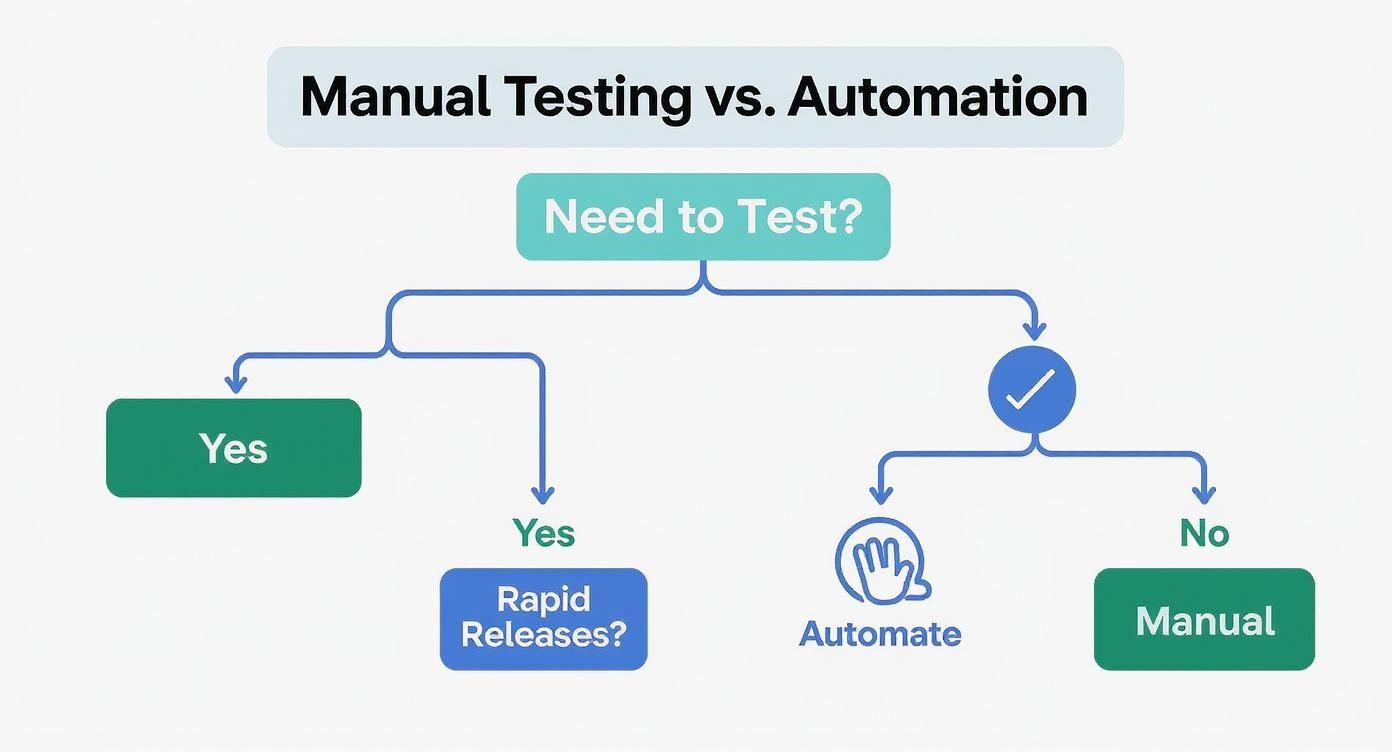

This simple decision tree really drives home how factors like release velocity should push you toward automation.

As you can see, the faster and more frequently you need to ship code, the less sustainable manual testing becomes. Automation isn't just a nice-to-have; it's a necessity for modern development cycles.

Comparing the Top Contenders

The world of UI testing tools is always evolving, but three names consistently pop up in any serious discussion: Selenium, Cypress, and Playwright. Each one comes with its own philosophy and unique set of strengths.

To help clarify the differences, let's put them side-by-side. This table breaks down some of the key features you'll want to consider when making your choice.

Modern UI Automation Frameworks Comparison

| Feature | Playwright | Cypress | Selenium |

|---|---|---|---|

| Primary Architecture | Operates out-of-process via the WebDriver Protocol or Chrome DevTools Protocol. | Runs in the same run loop as your application, providing direct access to the DOM. | Controls the browser remotely using the WebDriver protocol. |

| Cross-Browser Support | Excellent. Chromium (Chrome, Edge), Firefox, and WebKit (Safari). | Good. Chromium-based browsers and Firefox. No WebKit/Safari support. | The best. Supports nearly every browser imaginable, including older versions. |

| Setup & Installation | Very simple. A single npm install command gets you up and running. |

Very simple. Known for its quick, all-in-one installation. | Can be complex, often requiring separate WebDriver executables for each browser. |

| Debugging Experience | Powerful. Offers a Trace Viewer that provides a detailed, step-by-step-visual of the test run. | Top-tier. Famous for its interactive test runner with "time-travel" debugging. | Relies on standard language-specific debuggers, which can be less intuitive. |

| Key Differentiator | Unmatched cross-browser/engine support (especially WebKit) and robust auto-waiting mechanisms. | Exceptional developer experience and an interactive, real-time test runner. | Unparalleled language and browser flexibility; the industry standard for many years. |

| Limitations | Younger ecosystem compared to Selenium. | Cannot control multiple browser tabs or windows in a single test. | Steeper learning curve and less "out-of-the-box" functionality (e.g., no test runner). |

Ultimately, while Selenium remains a versatile workhorse, the battle for modern web apps often comes down to Playwright vs. Cypress. Both offer a far superior developer experience, but Playwright's broader browser support and more flexible architecture give it a slight edge for teams who need to test beyond the confines of a single tab.

Key Factors for Your Decision

A feature comparison table is a great start, but the day-to-day reality of using a tool is what really matters. Here are a few practical things I always tell teams to consider:

- Setup Friction: How fast can you go from zero to a running test? With Playwright and Cypress, you're often just one command away. That low barrier to entry is huge for team adoption.

- Debugging Experience: Tests fail. It's a fact of life. The real question is, how painful is it to figure out why? Cypress has long been the gold standard here with its time-traveling debugger, but Playwright’s trace viewer is incredibly powerful, giving you a full-blown interactive report of every single step, network request, and console log.

- Community and Support: When you get stuck, where do you turn? A big, active community means more blog posts, Stack Overflow answers, and third-party plugins. Selenium has the largest community by virtue of its age, but Playwright's is growing at an incredible pace, backed by Microsoft.

- Built-in Capabilities: Does the framework solve common headaches for you? Modern tools have things like automatic waits baked in, which is a total game-changer. It means the framework waits for an element to be ready before interacting with it, saving you from writing brittle and unreliable

sleep()commands.

The best framework is the one that fits your team's existing skills and your app's architecture. If your team lives and breathes JavaScript and is working on a modern SPA, Playwright or Cypress will feel like a natural extension of their workflow. But if you need to validate functionality across a vast matrix of browsers in multiple programming languages, Selenium’s proven versatility is still hard to beat.

Building a Test Environment That Can Actually Scale

A great ui testing automation suite is worthless if it runs on a shaky foundation. Get your environment right from the start, and you'll save yourself (and your team) a world of pain down the road. It’s all about creating a consistent, reliable setup that everyone can easily use. We’ll be using Playwright for this, a modern tool that makes getting a professional-grade environment up and running much less of a chore.

First things first, you'll need Node.js installed, since that's what Playwright runs on. Once that's ready, you can kick off a new project and add Playwright with a couple of quick commands in your terminal. This process handily scaffolds a basic project for you, giving you a starting point with config files and even some example tests.

While this initial setup gets you moving, you'll need to be more intentional with your organization to build something that can truly grow with your application.

Structuring Your Project for the Long Haul

Tossing all your test files into one big folder might seem fine when you have a handful of tests, but it becomes a nightmare to navigate as your project grows. This is where leaning on established design patterns becomes a lifesaver. The Page Object Model (POM) is basically the industry standard for a reason: it keeps your code clean, reusable, and much easier to read.

The idea behind POM is simple. You create a separate class for each page or major component of your app. That class holds all the locators and methods for interacting with that specific piece of the UI. Your test scripts, in turn, use these high-level methods instead of dealing with raw selectors.

This approach pays off in a few huge ways:

- Fix it Once: If a button's selector changes, you update it in one place—the page object file. No more search-and-replace missions across dozens of test scripts.

- Self-Documenting Tests: Your tests start reading like a story. A line like

loginPage.submitCredentials('user', 'pass')is infinitely clearer than a messy block ofclick()andfill()commands. - Easier Onboarding: New team members can get up to speed faster because the test code is organized just like the application they're looking at.

Think of it like this: your test files should describe what the user is doing, while the page objects handle the nitty-gritty of how to do it.

Getting Smart About Test Data and Configs

Hardcoding things like usernames, passwords, or API endpoints directly in your tests is one of the biggest mistakes you can make. When you need to run tests against a different environment or with a new user, you’re stuck manually changing your code. A truly scalable setup separates the test logic from the test data.

A good practice is to store your test data in external files, like JSON or .env files. This lets you easily swap out credentials for different user roles or product details for various scenarios without touching the tests themselves.

I can't stress this enough: use environment variables for sensitive data and environment-specific URLs. This keeps secrets out of your Git repository and makes your entire suite portable. You can run the same tests locally, in staging, or on a CI server just by changing a variable.

For example, you can tell your Playwright config to pull the base URL from an environment variable. Now, the exact same test suite can be pointed at your local machine or a staging server with zero code changes. As you add more tests, you'll also want to explore how to speed up your feedback loop by running your UI tests in parallel, which can slash your execution time.

And finally, none of this matters if it’s not in version control. Using Git is non-negotiable. It gives you a complete history, makes team collaboration possible, and acts as the single source of truth for your entire automation codebase. This is the bedrock of a professional testing setup.

Writing Resilient and Maintainable UI Tests

Now that your environment is ready to go, it’s time for the fun part: writing automated UI tests that actually deliver lasting value. We're not talking about simple "click a button" scripts here. The real goal is to map out meaningful, end-to-end user journeys that mirror how people actually use your application—think of a complete checkout flow, from adding a product to the cart all the way to seeing the order confirmation page.

Ultimately, you want to build a test suite your team can trust, not one that cries wolf with false alarms every other day.

The biggest enemy of a reliable test suite is brittleness. Tests that shatter every time a developer refactors a component or tweaks a CSS class are worse than having no tests at all. They just create noise, erode confidence, and get ignored. The secret to resilient ui testing automation is all about how you identify elements on the page.

Embracing Stable Selectors

Your first rule of thumb should be to avoid relying on selectors that are likely to change. This means you need to stay far away from things like auto-generated class names (.css-1a2b3c) or overly specific XPath that depends on the exact HTML structure (/html/body/div[1]/main/div[2]/div[3]/button). A single front-end tweak, and your test is toast.

Instead, make the data-testid attribute your best friend. This is where you collaborate with your development team to add unique, test-specific identifiers to key interactive elements. A selector like [data-testid="add-to-cart-button"] is completely disconnected from styling or the DOM structure, making your tests incredibly durable.

Take a look at this example from Playwright's documentation. It shows how their locator API prioritizes user-facing attributes, which is exactly the principle we're talking about.

This screenshot perfectly highlights the best practice: target elements by their role, text, or an explicit test ID. This not only makes your scripts more readable but also far less likely to break when the UI gets a facelift.

Your tests should verify the user experience, not the implementation details. By using

data-testidor other user-facing locators, you're testing what the user sees and interacts with, which is exactly what UI testing is all about. This mindset shift is fundamental to maintainable automation.

Scripting a Real User Flow

Let’s walk through what a simplified checkout flow might look like in a test. A well-written test script should read like a clear set of instructions, describing the user's actions and the expected outcomes at each stage. This makes it self-documenting and easy for anyone on the team to pick up.

A typical flow could be broken down like this:

- Navigate to a product detail page.

- Assert that the product title and price are visible.

- Click the "Add to Cart" button, targeting it with its

data-testid. - Verify that a success notification appears on the screen.

- Navigate to the cart page.

- Assert that the correct item and quantity are now in the cart.

Notice a pattern? Each step involves an action followed by an assertion. An assertion is just a check that confirms the application is in the state you expect it to be. For instance, after clicking "Add to Cart," you absolutely should assert that the cart's item count has updated. Modern frameworks like Playwright have powerful, built-in assertion libraries that make these checks incredibly easy to write.

Writing Clear and Descriptive Assertions

Vague assertions are a common pitfall that can really weaken your test suite. A test that only checks if an element exists is helpful, but it's not very strong. A great test verifies specific content or attributes.

Let's compare two different approaches:

- Weak Assertion:

expect(cartIcon).toBeVisible(); - Strong Assertion:

expect(cartIcon).toHaveText('1');

The second assertion is worlds more valuable. It doesn’t just confirm the cart icon is on the page; it confirms it's displaying the correct number of items. This level of precision is what transforms a basic script into a powerful tool for catching regressions.

For scenarios involving lots of different inputs, you might want to look into creating a solid data-driven test strategy to cover more ground with less code. This helps ensure your assertions are validated against a wide variety of user data, which is fantastic for catching those tricky edge cases you might otherwise miss. Building this habit of writing specific, meaningful assertions is what will ultimately make your UI testing automation efforts successful and sustainable.

Integrating AI and Advanced Testing Techniques

Once you've got the basics down, it’s time to look at what separates a good test suite from a truly great one. Moving past simple scripts means tackling the common culprits behind test failures and slowdowns head-on. These advanced strategies are your ticket to building a ui testing automation suite that's not just reliable but also fast and efficient.

One of the biggest headaches in UI testing is handling asynchronous operations. You know the drill: your app is fetching data or doing something in the background, and your test script barrels ahead. It tries to click an element that hasn't loaded yet, and boom—another flaky, unreliable test failure.

Mastering Asynchronous Operations

The answer here is mastering explicit waits. Forget telling your test to just pause for a few seconds. That’s a major anti-pattern and a recipe for either slow or flaky tests. Instead, you tell the script to wait for a specific condition to be true. For example, you can command it to wait until a "Success!" message appears or a loading spinner vanishes before it proceeds.

This single change makes your tests incredibly resilient. They start reacting to the application's actual state instead of just guessing, which dramatically cuts down on failures caused by slow network connections or backend delays.

Another game-changer for speed and stability is API mocking. Your UI tests should never have to depend on a live backend. That dependency introduces a massive external point of failure. If the API is slow or down for maintenance, your UI tests fail, even if the front end is working perfectly.

API mocking lets you completely isolate your frontend for testing. By faking the backend responses, you gain total control over the test environment. This makes it a breeze to test tricky edge cases like error states or what happens when no data is returned.

This isolation has two huge benefits. First, your tests run way faster because they aren't waiting on real network calls. Second, it makes them 100% deterministic—a non-negotiable for any serious CI/CD pipeline. To get a handle on these advanced methods, you might explore an AI-powered UI testing platform like Testim to see how it can simplify your workflow.

The Rise of AI in Test Automation

Beyond these established best practices, artificial intelligence is really starting to change the game, especially around test creation and maintenance. The use of AI and machine learning in test automation has shot up since 2023, jumping from 7% to 16% in 2025. This isn't just hype; it's driven by AI’s real-world ability to slash manual effort and make test suites more stable.

So, what does AI actually bring to the table?

- Self-Healing Tests: This is a big one. AI-powered tools can automatically figure out when an element's selector has changed and find a new one on the fly. Think of all the time saved not having to fix tests broken by minor UI tweaks.

- Intelligent Visual Regression: Forget simple pixel-by-pixel comparisons that flag every tiny rendering difference. AI can understand the page layout and spot meaningful visual bugs while ignoring the noise.

- Automated Test Generation: Some of the newer tools can even analyze real user sessions to suggest—or even write—new tests covering the most common user journeys. This helps you focus your efforts on what your users are actually doing.

By mixing solid strategies like explicit waits and API mocking with the smarts of AI, you can really level up your testing. You'll spend less time on maintenance and build an automation suite that you can truly depend on.

Got Questions About UI Testing Automation? We've Got Answers

Even with the best plan in the world, you're going to hit some snags when you start automating UI tests. It just comes with the territory. Let's tackle some of the most common questions and sticking points I see teams run into.

You'll find that many of the practical challenges come from the very nature of web applications themselves—the HTML is always changing. If your tests aren't built to handle that, you're in for a world of hurt.

How Do I Handle Dynamic IDs and Changing Selectors?

This is, without a doubt, the most common headache. The secret is to stop relying on selectors that are designed to be unstable, like those ugly, auto-generated IDs or dynamic class names.

The absolute best thing you can do is work directly with your developers. Ask them to add stable, dedicated test attributes to key elements, like data-testid. This gives your tests a permanent, reliable hook to latch onto.

When that’s not on the table, modern testing frameworks give you smarter ways to find elements:

- Locate by Role: Find an element by its accessibility role, like a

buttonorheading. - Locate by Text: Target an element by the actual text a user sees on the screen.

Using these methods ties your tests to what the user experiences, not the constantly shifting code underneath. This makes them far less likely to break every time a developer refactors a component.

What’s the Difference Between UI Testing and End-to-End Testing?

It’s really easy to get these two mixed up, but they have very different jobs. UI testing is all about the visual and interactive parts of the interface. It answers questions like: Does this button look right? Is this form field disabled when it's supposed to be?

End-to-end (E2E) testing, on the other hand, has a much wider view. It validates an entire user journey from start to finish. That includes the UI, but it also dives deep into the backend services, APIs, and databases to make sure everything works together.

A UI test might check that a button's color changes when you hover over it. An E2E test would click that same button and then verify that a new record was actually created in the database. It confirms the whole system is working in concert.

Should I Automate Every Single Manual Test Case?

No. Please, don't do this. Trying to automate 100% of your manual tests is a classic mistake. It creates a massive, slow, and brittle test suite that becomes a nightmare to maintain, and the return on investment plummets.

You have to be strategic. Focus your automation efforts on the high-value scenarios that are absolutely critical to the business.

Good candidates for automation always include:

- Critical User Paths: Things like login, user registration, and the checkout process.

- Regression Suites: The core tests you need to run constantly to ensure new code doesn't break existing features.

- Repetitive, Data-Driven Tests: Workflows you need to check over and over with different sets of data.

Save things like exploratory testing, one-off bug checks, and nuanced usability testing for your human testers. Their intuition and creativity are far more valuable there than any script you could ever write.

Ready to build faster, more reliable tests by isolating your UI from backend dependencies? dotMock lets you create mock APIs in seconds to simulate any scenario, from network errors to slow responses. Get started for free and accelerate your testing today.