A Developer Guide to Manage Test Cases for APIs

If you want to get a real handle on test case management for modern, API-driven apps, you have to ditch the spreadsheets. Seriously. The only way to keep up is to treat your tests like code. This means embracing Git for version control, using API mocks for parallel development, and plugging everything into your CI/CD pipeline for automation. It’s a workflow that gets you faster feedback and, ultimately, a better product.

Why Your API Test Case Strategy Needs a Rethink

Are you still tracking test cases in an Excel sheet or a clunky, outdated tool? If so, you know the pain. It's a recipe for brittle tests, agonizingly slow feedback loops, and bottlenecks that leave your team waiting on backend dependencies. In today's world, where speed and reliability are everything, that old approach is a major liability.

The fundamental issue is the massive gap it creates between testing and development. When your tests live in a silo, separate from the actual code, they fall out of sync almost immediately. They become stale, misunderstood, or worse, completely ignored. This isn't just inefficient; it injects a ton of unnecessary risk into every single release.

The Shift to a Modern Workflow

The solution is to start treating your tests as a first-class citizen of your codebase. When you combine the power of Git, the flexibility of API mocks, and the speed of CI/CD, you build a testing process that’s both resilient and incredibly efficient. This integrated system directly tackles the problems that plague traditional methods.

What does this actually get you?

- Real Version Control: Storing tests in Git means every single change is tracked. You can branch for new features, review test logic right in a pull request, and always have a clear, auditable history. No more "which version of the spreadsheet is the right one?"

- Decoupled Development: API mocks are a game-changer. Tools like dotMock let frontend and QA teams build and test against a simulated API, completely untethered from the backend team's schedule. Those dependency delays? Gone.

- Automated Quality Gates: This is where it all comes together. By integrating your tests into a CI/CD pipeline, they run automatically on every commit. You catch bugs the moment they’re introduced and stop regressions in their tracks.

The industry is already moving this way, and fast. The growth in the software testing market is explosive, and it's almost entirely driven by the push toward automation.

The numbers don't lie. The global software testing market shot past $45 billion in 2023 and is on track to hit $109.5 billion by 2027. The real story here is automation, which is projected to become a $68 billion market by 2025 on its own. This isn't just a trend; it's a clear signal that modernizing your testing strategy is urgent.

To see just how different these two worlds are, let’s compare them side-by-side.

Modern vs. Traditional Test Case Management

| Aspect | Traditional Approach (e.g., Spreadsheets) | Modern Approach (e.g., Git + Mocks) |

|---|---|---|

| Version Control | Manual, error-prone. "Final_v2_final.xlsx" | Fully versioned in Git. Clear history and branching. |

| Collaboration | Siloed. Difficult to track changes and ownership. | Collaborative. Test changes reviewed in pull requests. |

| Development Speed | Creates bottlenecks. Teams wait for dependencies. | Enables parallel work. Mocks decouple teams. |

| Automation | Difficult or impossible to integrate into CI/CD. | Natively integrates with CI/CD for instant feedback. |

| Maintainability | Brittle and high-maintenance. Tests break easily. | Resilient and easy to update alongside source code. |

| Discoverability | Buried in shared drives or legacy tools. | Lives with the code. Easy for any developer to find. |

The takeaway is clear: moving your tests into your code repository isn't just a "nice to have." It's a fundamental shift that directly impacts your team's velocity, collaboration, and the overall quality of your software.

Getting the workflow right is half the battle. The other half is ensuring your team is structured for success. A solid understanding QA team roles and responsibilities is crucial for making a high-quality, automated testing process stick.

Building Your Test Case Repository in Git

Let's get practical. The first real step toward taming your test case chaos is getting them out of scattered documents and into a version-controlled system like Git. When you start treating your API tests just like you treat your application code, you gain all the benefits of collaboration, versioning, and history that spreadsheets just can't touch. The end goal here is to create a single source of truth that lives and breathes right alongside your product's source code.

This whole process kicks off by creating a dedicated directory inside your main project repository. Most teams call it /tests or /qa. Keeping tests this close to the code is a game-changer. Why? Because when a developer cuts a branch for a new feature, they can write or update the corresponding tests on that same branch. This tight coupling means test logic gets reviewed in the same pull request as the feature code, which helps you squash bugs before they ever get merged.

Establishing a Clear Structure

Without a logical folder structure, your test repository will quickly become a messy, unsearchable junk drawer. I've found the most effective strategy is to simply mirror your API's resource structure. If you have endpoints for /users and /orders, your test directory should look exactly like that.

It's intuitive and predictable.

- /tests/users/

- /tests/orders/

- /tests/authentication/

Inside each of these folders, the filenames themselves should tell a story. A good naming convention means anyone on the team can find what they’re looking for without having to hunt around. For example, a test for successfully creating a user could be create_user_success.yml, while a check for a failed login might be login_invalid_credentials.json.

The core idea is simple: a developer should be able to look at the file path and immediately grasp the test's purpose without even opening it. This kind of organizational clarity has a massive impact on a team's efficiency.

Defining Tests in Readable Formats

For API tests, you want formats that are easy for both humans and machines to read. This is where YAML and JSON really shine. They give you a structured way to define every part of a test—from the request payload to the expected outcome—without needing deep programming skills. This approach opens up the test suite to everyone, from QA specialists to product managers.

For example, a simple YAML file could specify the HTTP method, endpoint, headers, request body, and the expected status code. This declarative style keeps the focus squarely on what the test does, not how a complex script executes it. If you want to see this in action, our guide on how to create a test case breaks it down with clear, adaptable examples.

This structured format doesn't just make tests easier to write and review; it makes them far easier to maintain. Better yet, once you have this structure, you can parameterize the files to run the same test logic with dozens of different data inputs. This drastically cuts down on duplicated effort and boosts your test coverage without cluttering your repository. It's a foundational technique for managing test cases at any kind of scale.

Decoupling Development with API Mocking

One of the biggest drags on development speed is a tale as old as time: the frontend team is ready to go, but the backend API they need isn't finished yet. This classic bottleneck forces everyone into a slow, sequential workflow, where progress grinds to a halt while one team waits on another.

The best way I've found to break this cycle is to decouple your development workflows with API mocking. It’s a game-changer.

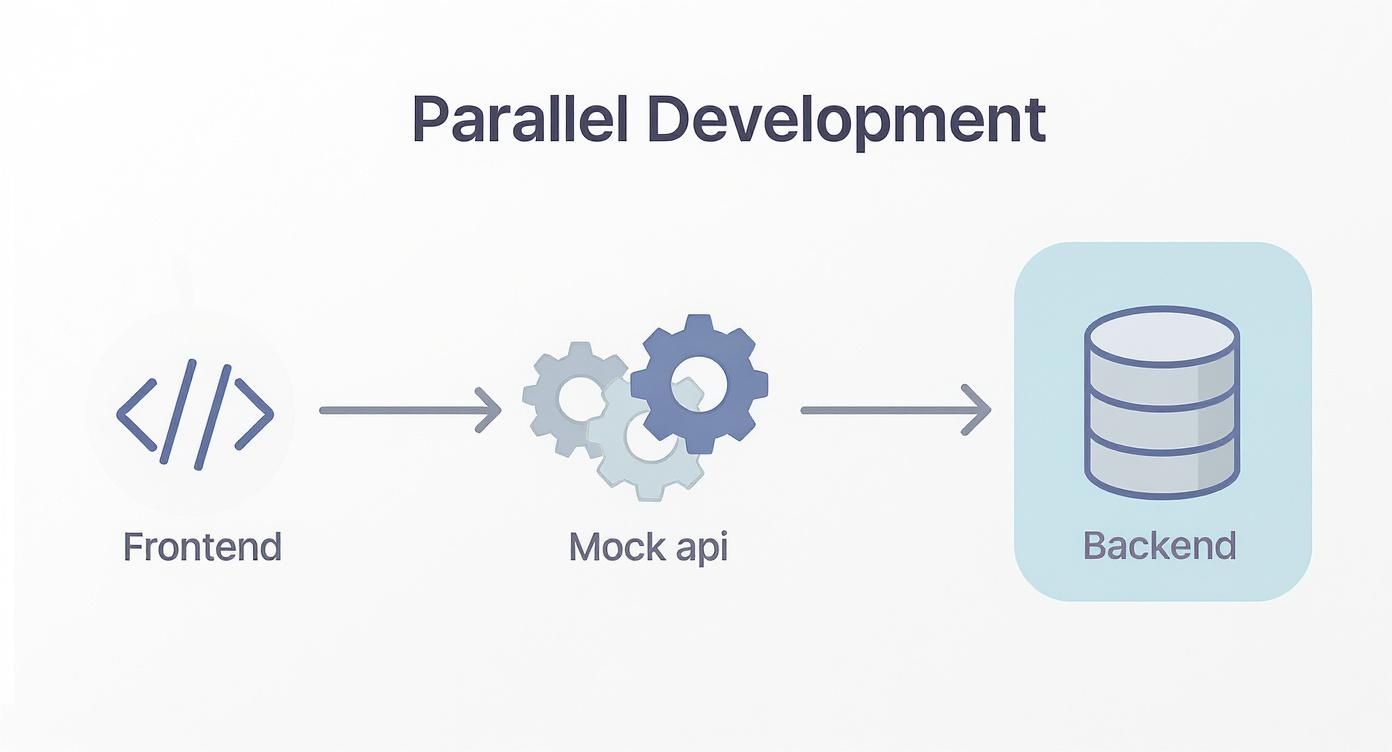

Essentially, API mocking lets you spin up a simulated backend that serves up predefined responses. This gives your frontend developers and QA engineers a stable, predictable endpoint to build and test against—long before the real API is even deployed. It’s the key to unlocking true parallel development and shipping features faster.

Simulating Real-World API Behavior

A good mock server does a lot more than just return a happy-path 200 OK response. If you're serious about testing, your mocks need to replicate the full range of API behaviors you'd see in the wild, both good and bad. This is where a tool like dotMock really shines, giving you the power to craft a scenario for just about anything.

Think about a simple user login flow. Your test plan has to cover way more than a successful login. What happens when a user types the wrong password? What if the server just goes down?

With API mocking, you can set up specific endpoints to simulate each of these states:

- Successful Login (

200 OK): Returns a valid user profile and an auth token. - Invalid Credentials (

401 Unauthorized): Returns an error message like{"error": "Invalid username or password"}. - User Not Found (

404 Not Found): Simulates what happens when the user account doesn't exist. - Server Error (

500 Internal Server Error): Mimics a backend crash so you can see how gracefully your UI handles a total failure.

By building out these mocks, your frontend team can develop the entire user experience for every single path—success modals, error messages, loading spinners—without ever needing the live backend. This isn't just about moving faster; it's about building applications that are far more resilient and user-friendly.

The key is to treat your mocks like a contract. They define the expected behavior of the API, giving every team a stable foundation to build on. That predictability is the bedrock of any solid testing strategy.

Empowering Teams with Parallel Workflows

Once you have a library of mock responses ready, you’ve unlocked the ability for every team to work independently and at the same time. The backend team can keep plugging away at the real API logic, knowing the frontend isn't blocked. Meanwhile, the QA team can start writing and running automated tests against the predictable mock server.

While mocks and stubs are similar ideas, it's worth knowing the subtle but important distinctions, which you can read more about here: mocks vs. stubs in testing.

This parallel workflow completely changes your release cycle. Instead of a linear hand-off process, development and testing happen in tandem. UI bugs get caught and fixed sooner, and integration is much smoother because the frontend was built against a reliable contract from day one. It's a much more agile and proactive way to build software.

Automating Test Execution with CI/CD

Okay, so you’ve got your test cases neatly organized in Git. That’s a solid foundation, but the real payoff comes when you get it automated. Let's be honest, manually running tests is a bottleneck. It’s slow, prone to human error, and just doesn't work once your team and application start to grow.

The goal is to turn that test suite into an automated quality gate right inside your Continuous Integration/Continuous Delivery (CI/CD) pipeline. By wiring your API tests into a tool like GitHub Actions or Jenkins, you build a system that automatically checks every single change. A new commit? A pull request? The test suite runs, giving developers immediate feedback.

Integrating Mocks into Your Pipeline

One of the biggest headaches with automated testing in CI/CD is dealing with external dependencies. If your tests rely on a live staging server, you’re setting yourself up for failure. A network hiccup or a downed service can break the build, even when the code itself is perfectly fine. This is where those API mocks we’ve been talking about become your best friend.

Instead of hitting a real server, you just launch a mock server as part of your CI/CD job. This creates a completely isolated, self-contained testing environment. The pipeline doesn't need to reach out to anything external. It just spins up the mock, runs the tests against its predictable responses, and tears it all down. Simple.

This infographic shows that same parallel workflow concept we discussed earlier, which applies directly to your automated pipeline.

You can see how the frontend and backend teams work independently with the mock API acting as the contract. This same principle makes your CI/CD tests faster and far more reliable. By decoupling your tests from live dependencies, the pipeline can focus only on what it's supposed to: the quality of the new code.

A Practical GitHub Actions Workflow

Getting this running isn't as complicated as it sounds. In GitHub Actions, for example, your workflow file would just need a few key steps.

- Checkout Code: First thing's first, you pull the latest code from the repo.

- Start Mock Server: Then, you add a command to launch your mock API. If you’re using a tool like dotMock, this can be as easy as a single line in your workflow YAML.

- Run API Tests: With the mock running, you just execute your test script. The key is making sure it points all its API calls to the local mock environment (e.g.,

localhost:3000). - Report Results: The test runner does its thing, and the job passes or fails. A failed test should immediately block a PR from being merged.

Think of this as your first line of defense. It's a safety net that guarantees no code that breaks existing features ever makes it into your main branch. This is how you prevent regressions and keep quality high.

This whole approach is fundamental to modern software delivery. If you want to go further on this topic, check out our guide on continuous integration best practices.

The financial upside here is real. Catching bugs early is always cheaper than fixing them in production. In fact, research shows that investing in related Test Data Management (TDM) solutions can cut overall software testing costs by an average of 5-10%. That's because a solid, automated testing process stops expensive problems before they ever get downstream. You can discover more insights about these market trends here. Ultimately, you’re building a system that ensures every single merge is production-ready.

Advanced Strategies for Bulletproof API Tests

Once you've got a solid, automated testing foundation in place, it's time to build a truly resilient test suite. The real world is chaotic and unpredictable, so your tests need to be, too. This means we have to move beyond just checking for simple success or failure states and start actively simulating the messy, imperfect conditions your users will definitely run into.

Advanced strategies are all about mimicking this chaos. For example, your app needs to handle poor network conditions without falling apart. Using a powerful API mocking tool like dotMock, you can configure mock endpoints to introduce artificial latency or even simulate dropped connections. This is how you see exactly how your UI behaves when a response is slow or never arrives, making sure you have proper loading states and timeout handling in place.

Testing Complex User Journeys

Testing isolated endpoints is critical, but it doesn't tell the whole story. Real users interact with your application through multi-step workflows, and your tests absolutely must validate these entire journeys. An effective end-to-end test doesn't just check one API call; it chains several together to replicate what a user actually does.

Think about a checkout process on an e-commerce site. A comprehensive test wouldn't just hit one endpoint. Instead, it would need to:

- Add an item to the cart, verifying the call to the cart service.

- Apply a discount code, validating the interaction with a promotions service.

- Process the payment, checking the call to the payment gateway.

- Confirm the order, ensuring the order service correctly records the transaction.

When you structure tests this way, you're validating the integration between multiple microservices and confirming that the entire business logic works from start to finish. This approach is fundamental when you manage test cases for complex, distributed systems.

Embracing Dynamic and Parameterized Testing

Hardcoding test data is a maintenance nightmare. I've seen teams waste countless hours updating static values in their test files every time the application evolves. The solution is to embrace test parameterization, a technique where you run the same test logic with many different data inputs.

Instead of writing separate tests for a valid user, an invalid user, and a locked-out user, you create one parameterized test. This single test is then fed a dataset containing all three scenarios. This approach dramatically cuts down on redundant code and makes your test suite much leaner and easier to manage.

When you manage test cases with parameterization, you’re not just saving time—you're increasing coverage. It becomes trivial to test dozens of edge-case inputs without bloating your test repository, leading to a much more robust application.

Beyond basic functional tests, your strategy also needs to include testing for resilience against attacks and performance bottlenecks. Proactively addressing security vulnerabilities like ReDoS ensures your API can withstand malicious inputs without crashing. These strategies, used together, build deep confidence in your application's ability to perform reliably under pressure, protecting both your infrastructure and your user experience.

Common Questions About Managing API Tests as Code

Moving your test cases into code is a big shift, especially if your team is used to traditional test management tools. It's a change in mindset, but the payoff in speed and clarity is huge. Let's walk through a few of the questions that always come up when teams make this transition.

How Do You Handle Versioning for the API and Its Tests?

This is actually one of the biggest wins of a code-based approach. When your tests live in the same repository as your application, versioning becomes almost automatic. The key is smart use of Git branches.

Imagine your team is building v2 of a major API. You'd create a v2-feature branch. All the new API code and the tests for that new code live together on that branch. It’s a self-contained unit. This keeps your main branch clean, with tests that only validate what’s currently in production.

Once you’re ready to release, you can use Git tags to create a permanent snapshot. This gives you an exact, auditable record of the application code and its corresponding tests at a specific point in time.

This tight coupling is the whole point. You’ll never again have to guess which version of a test spreadsheet matches which version of the software.

What's the Best Way to Keep Mocks and Real APIs in Sync?

This is a great question, because an outdated mock can cause more problems than it solves. The most reliable way I've seen to handle this is to generate your mocks directly from a shared API contract, like an OpenAPI (Swagger) specification.

This "contract-first" workflow makes your API spec the single source of truth. Whenever the contract gets updated, you just regenerate the mocks. Simple.

For an extra layer of safety, you can add contract testing to your CI/CD pipeline. These are quick, automated checks that confirm your mock server's responses still align with the latest API contract. This completely prevents your mocks from drifting out of sync over time.

Can This Test Management Approach Scale for Large Teams?

Absolutely. In fact, a Git-based workflow scales far better than most traditional tools, especially for distributed teams. Whether you prefer a monorepo or choose to link multiple repositories, the system works.

For bigger organizations, features like GitHub's CODEOWNERS file are incredibly useful. You can assign ownership of different test suites to specific teams, making sure the right people are always reviewing the right changes. The pull request process itself acts as a mandatory peer review for any test modification, which is fantastic for maintaining quality and sharing knowledge.

Ready to stop fighting with outdated tools and start building a modern, automated testing workflow? With dotMock, you can create stable, predictable mock APIs in seconds to decouple your teams, accelerate development, and ship features faster. Get your free mock API running in under a minute.