How to Create a Test Case That Actually Works

At its core, creating a test case is all about documenting clear, repeatable steps to verify if a feature actually works as expected. This means defining any necessary preconditions, outlining the precise actions a user (or the system) takes, and stating exactly what the successful outcome should look like.

Why Great Test Cases Are Your Secret Weapon

Let's get beyond the textbook definitions. A well-crafted test case is so much more than a simple checklist. Think of it as a strategic tool that safeguards your product's quality and acts as a vital communication bridge. When you get it right, it connects developers, QA engineers, and product managers with a single, shared understanding of how a feature is supposed to behave.

That kind of clarity is absolutely essential in modern development. In today's fast-paced Agile and DevOps environments, rapid release cycles are just part of the job. Without clear, repeatable testing protocols, teams are just gambling, risking shipping bugs that kill user trust and lead to expensive, frustrating fixes after launch.

The True Impact on Quality and Collaboration

Effective test cases introduce a much-needed layer of discipline and predictability into the development lifecycle. They force everyone on the team to think critically about the requirements before a single line of code is ever written. This simple act often uncovers ambiguities and potential edge cases early on, which is always cheaper and more efficient than fixing bugs found later.

The benefits are real and immediate:

- Reduced Ambiguity: They get rid of the guesswork. A test case is an exact script for validation, which means the test will be consistent no matter who runs it.

- Improved Traceability: When you link each test case back to a specific requirement or user story, you create a perfect audit trail.

- Enhanced Team Alignment: A developer can read a test case and know precisely how their code is going to be judged. This almost always leads to a better first draft of the code. You can explore a variety of software testing techniques to build out your team's strategy.

A great test case doesn't just find bugs; it prevents them. It becomes the ultimate source of truth for a feature's intended behavior, guiding development and ensuring the final product actually meets user expectations.

Balancing Manual Effort with Automation

Writing test cases manually is still a huge part of quality assurance, but there's no denying the industry is shifting. By 2025, automation is expected to be part of the quality assurance process in 55% of companies, and 77% of organizations are already actively using some form of automated software testing.

Even with this trend, a surprising 48% of enterprises report that relying too heavily on manual testing is slowing down their releases. You can dig into more statistics on this shift in this detailed industry report.

Investing the time up front to write high-quality test cases is one of the smartest moves a team can make. It builds a solid foundation for both robust manual validation and a scalable automation strategy down the road, which is the key to superior product reliability and long-term success.

Deconstructing an Effective Test Case

A truly great test case is like a perfect recipe. It has a clear list of ingredients and a set of simple, unambiguous steps that anyone—from a senior developer to a brand-new QA intern—can follow to get the exact same result every time. If you leave anything out or make a step confusing, you're going to get inconsistent results. And that's how bugs make their way into production.

The whole point is to create a document that stands on its own. No one should have to track you down to ask, "Hey, what did you mean by this?" It should be self-contained, clear, and executable. Let’s break down exactly what goes into a test case that hits that mark.

The Five Pillars of a Solid Test Case

When I'm training new testers, I tell them to focus on five core elements. These aren't just suggestions; they're the absolute essentials that make a test case reliable and valuable. Get these right, and you're building a repeatable process that actually catches issues.

Here's what every solid test case needs:

- Test Case ID: Think of this as a unique serial number, like

TC-LOGIN-001. This little identifier is your best friend for traceability. You’ll use it to link to bug reports in Jira, reference it in test plans, and map it to automated scripts. - Title or Summary: This needs to be short, sweet, and tell you exactly what the test is for. "Verify Successful User Login with Valid Credentials" is perfect. "Login Test" is not—it’s just too vague.

- Preconditions: What needs to be true before you even start the test? This is where you list those things. For example, you might need a specific user account type ("User must have 'Admin' role") or need to be on a certain page ("Tester must be on the main login screen").

- Actionable Steps: This is the heart of the test case—a numbered list of the exact actions to take. Each step should be a clear command. Write it like you're telling someone what to click, type, or observe, leaving no room for guesswork.

- Expected Result: This is your definition of "success." What should happen if everything works perfectly? Be specific. "User is redirected to the dashboard page and a 'Welcome!' message appears" is a great expected result. "It works" is not.

A test case without a crystal-clear expected result is basically useless. It’s the single most important part because it removes all subjectivity and establishes the pass/fail benchmark for that feature.

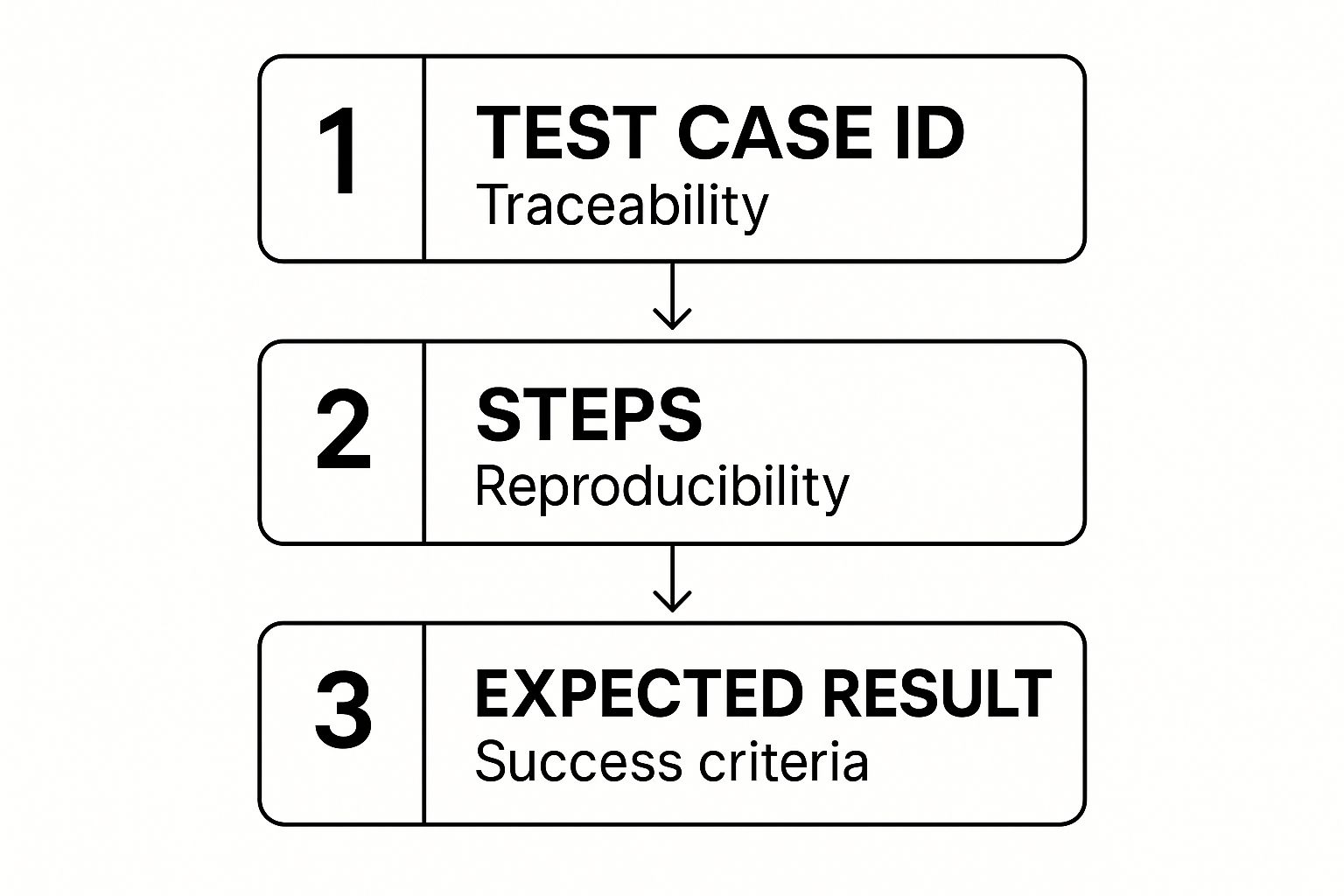

This simple flow is the key to a test case that actually works in the real world.

The unique ID makes it traceable, the steps make it repeatable, and the expected result makes it definitive.

To get a better handle on the components that make up a high-quality test case, this table breaks down each field with a simple, practical example.

Core Components of a High-Quality Test Case

| Component | Purpose | Example |

|---|---|---|

| Test Case ID | A unique code for tracking and reference. | TC-PAY-005 |

| Title | A brief, clear summary of the test's goal. | Verify checkout with an expired credit card |

| Preconditions | The state the system must be in before the test begins. | 1. User is logged in. 2. At least one item is in the shopping cart. |

| Steps | The specific actions the tester must perform, in order. | 1. Navigate to the shopping cart. 2. Click "Proceed to Checkout". 3. Enter an expired credit card number. 4. Click "Submit Payment". |

| Expected Result | The specific outcome that indicates the test passed. | An error message "Card is expired" is displayed. The order is not placed. |

Nailing these components turns your test cases from simple instructions into valuable assets for the entire development lifecycle.

And if you’re working with back-end systems, having a good grasp of API documentation best practices is a huge advantage. It gives you the inside scoop on expected behaviors, parameters, and error codes, making it much easier to write precise and effective test cases.

From Feature to Flawless Test Case

Alright, let's bridge the gap between theory and what you’ll actually do every day. Crafting a solid test case isn't about mindlessly filling out a template. It’s a bit of an art form, really—translating a simple feature request or user story into a concrete, repeatable set of instructions. It all starts with breaking down the requirement to figure out what "done" truly means.

Let’s walk through a classic e-commerce example that every QA engineer has tackled: the "Add to Cart" button. It sounds deceptively simple, but a truly great test plan goes way beyond just the happy path.

Dissecting the User Story

First things first, you have to tear the requirement apart. A typical user story for this feature might sound something like this: "As a shopper, I want to add an item to my shopping cart so that I can purchase it later." From that one sentence, we can pull out a whole host of testable scenarios.

This is where you need to think beyond the obvious. Sure, the main action is clicking the button. But what else is supposed to happen? What could go wrong?

- Positive Scenarios (The Happy Path): This is your bread and butter—what happens when everything goes right. Can a user add a single item? Can they add multiple quantities of the same item?

- Negative Scenarios (What If...): Now you get to try and break it. What if the item is out of stock? What if someone tries to add zero or a negative number of items?

- Edge Cases (The Weird Stuff): These are the unlikely but totally possible situations that often cause the biggest headaches. What happens if a user tries to add 10,000 items? Or what if they just hammer the "Add to Cart" button over and over again?

Brainstorming these scenarios before you write a single step is the secret sauce. This proactive thinking is what elevates a basic check into an effective test that actually uncovers critical bugs before they reach production.

Building the Test Case, Piece by Piece

Now that we have a list of scenarios, let's build a real test case. We'll tackle a negative scenario: trying to add an out-of-stock item.

Test Case ID: TC-CART-003

Title: Verify user cannot add an out-of-stock item to the cart

See how the title is specific and action-oriented? It immediately tells anyone who reads it what the intent is and what the expected outcome should be. No guesswork required.

Preconditions and Precise Steps

Next, we need to set the stage. What has to be true before this test can even start?

Preconditions:

- The user is logged into a valid customer account.

- A product exists in the database with its stock quantity set to 0.

- The user is on the product detail page for that specific out-of-stock item.

These preconditions are non-negotiable; they eliminate ambiguity and ensure any tester knows exactly how to set up the environment. From there, we lay out the steps. They have to be so clear that a brand-new team member could execute them perfectly.

Steps:

- Locate the "Add to Cart" button on the page.

- Observe that the button is disabled or greyed out.

- Attempt to click the "Add to Cart" button.

- Navigate to the shopping cart page to confirm it's empty.

Defining a Verifiable Outcome

This is the most important part: the expected result. A vague statement like "it doesn't add the item" is useless. You have to be precise.

Expected Result:

- The "Add to Cart" button should not be clickable.

- A clear "Out of Stock" message must be displayed near the button.

- The cart icon in the site header should still show a count of 0 items.

- The shopping cart page itself should be empty.

This level of detail guarantees that any tester, manual or automated, will arrive at the same conclusion. For teams ready to scale their quality efforts, this is exactly the kind of well-defined manual case that makes a perfect blueprint for automated functional testing. By following this process, you transform a simple feature idea into a flawless, actionable test case every time.

Writing Test Cases People Actually Want to Use

Anyone can fill in the blanks on a template. The real skill is crafting a test case that’s genuinely useful—not just for you, but for the entire team. A developer shouldn't need a decoder ring to figure out what you're trying to test, and another QA engineer should be able to pick it up a year from now and run it without any confusion.

The best test cases are built with a real human audience in mind. This is the same thinking that goes into building a chatbot that people actually use; the core principle is identical. It all comes down to clarity and designing for the end-user. That means dropping the jargon and writing in a simple, direct style.

Write for Clarity and Reusability

If you want to make an immediate impact, focus on writing with absolute clarity. Each step should be a direct command, leaving zero room for interpretation or guesswork.

- Be Specific: Don't just write "Check user profile." Instead, be explicit: "Click the 'Profile' icon in the top-right corner and verify the user's email address is displayed correctly."

- Avoid Assumptions: Never assume the person running the test knows what you know. If a specific user account with admin rights is needed, state that clearly in the preconditions.

- Keep It Modular: Break down complex flows into smaller, focused test cases. A single, reusable "Verify Successful User Login" test is far more valuable than embedding those same login steps into twenty different test cases.

Think of your test case as a story about how a feature is supposed to work. Make it an easy read. If someone has to tap you on the shoulder for clarification, the test case has already failed, even before the test begins.

This mindset doesn't just help you find bugs. It helps you build a library of reliable, reusable tests that your team can maintain and adapt as the product evolves.

Embrace the Future of Test Creation

The world of software testing is constantly changing. We're seeing a massive shift with the rise of AI in test automation, a market expected to jump from $0.6 billion in 2023 to around $3.4 billion by 2033. This isn't just hype; AI is genuinely helping teams generate test cases, optimize their coverage, and even predict potential issues without tons of manual effort.

But even as these powerful tools become more common, the fundamentals don't change. A clear, well-structured manual test case is the perfect blueprint for an automated script down the line. By getting these best practices right today, you’re not just improving your tests—you’re making the entire development process more efficient.

Bridging Manual Test Cases with Automation

In any fast-moving development environment, a great manual test case does more than just check a box—it's the perfect blueprint for a powerful automated script. Think of it as the bridge between human insight and machine efficiency. This connection is how you scale up your quality assurance without losing the precision that matters most.

The real trick is deciding what to automate. It's a strategic call, because not every test case is a good fit. I always look for the tests that are repetitive, predictable, and cover those critical user paths you absolutely have to check over and over again.

Identifying Prime Automation Candidates

What tests does your team run constantly? The ones that are becoming a real drag? Those are almost always the best place to start with automation. Get them off your team's plate so they can focus on more complex, exploratory work.

Here are the usual suspects that are ripe for automation:

- Regression Tests: These are the big ones. They make sure new code changes haven't secretly broken something that used to work. Running a full regression suite by hand can take days, but an automated suite can knock it out in hours, sometimes even minutes.

- Data-Driven Tests: I love these for automation. Any time you need to test the same workflow with tons of different data—like logging in with 100 different user accounts—automation is a lifesaver. You can dig deeper into effective data-driven testing to see just how much coverage you can gain here.

- Smoke Tests: These are your first line of defense after a new build. They're quick checks to verify that the most critical functions are still standing. Automating them gives you an instant "go/no-go" signal for every single deployment.

The goal isn't to automate everything. The goal is to automate the right things. A balanced strategy uses the raw speed of automation for the grunt work, while saving your team’s brainpower for exploratory and usability testing.

Where Human Intuition Still Wins

While automation is a workhorse for repetitive tasks, it has zero curiosity. It can't replicate that human gut feeling or spot a weird usability issue that wasn't spelled out in a test case.

This is where manual testing, especially exploratory testing, is still king. Scenarios that involve complex UI validation, checking the visual appeal of a new design, or following unpredictable user journeys are where a human tester will always find things a script would miss.

The way we write test cases has changed a lot as automation has become more common. This shift is happening for a good reason. The automation testing market was valued at USD 17.71 billion in 2024 and is expected to hit a staggering USD 63.05 billion by 2032. You can learn more about these market findings on Fortune Business Insights. This incredible growth shows just how much teams are relying on automated suites, all built on the foundation of a well-written manual test case.

Tools like Selenium, Cypress, and Playwright are what help us translate the clear logic of a manual test case into code. When you start with a thoughtfully written manual test, you're handing your developers or automation engineers a clear roadmap. It's the best way to ensure the final script is robust, accurate, and truly tests what the feature is supposed to do.

Common Questions I Hear About Writing Test Cases

As you get your hands dirty writing test cases, a few questions always seem to surface. It doesn't matter if you've been doing this for a decade or you're just starting out; these are the sticking points that come up time and again. Let's clear them up.

How Much Detail is Too Much?

Finding the right level of detail in a test case is a classic balancing act. I've seen it go both ways, and neither is pretty. Too little detail, and you're fielding questions all day. Too much, and you've created a maintenance nightmare.

The sweet spot is reproducibility without ambiguity. A new tester, maybe someone who just joined the team, should be able to pick up your test case and run it without needing to ask you anything. That's the gold standard.

Think about who's going to be reading it. Are you on a small team of seasoned pros who know the product inside and out? You can probably get away with less detail. But if you're working with a mix of junior and senior folks, or if you're outsourcing testing, then more explicit, granular steps are your best friend. My personal rule of thumb: each step should be a single, clear action.

What’s the Real Difference Between a Test Case and a Test Scenario?

Ah, the classic. This one trips up a lot of people. The easiest way to think about it is in terms of hierarchy: scenarios are broad, and test cases are narrow and specific.

Test Scenario: This is the big picture. It’s a high-level description of what needs to be tested, usually tied to a user goal or a feature. For example: "Verify a user can successfully log in." It answers the question, "What are we trying to prove?"

Test Case: This is the nitty-gritty. It’s a set of concrete, step-by-step instructions to test one specific piece of that scenario. For our "Verify a user can successfully log in" scenario, you'd have a whole family of test cases: "Test login with valid credentials," "Test login with an invalid password," "Test login with a locked-out account," and so on.

A single test scenario is often validated by a whole suite of individual test cases, each one covering a specific condition or path through that scenario.

Do I Really Need a Test Case for Every Single Requirement?

In a perfect world, absolutely. Connecting every test case back to a requirement is the bedrock of traceability. This gives you a clear, auditable trail that proves every single feature has been tested. It’s incredibly valuable for quality reports, regulatory compliance, and figuring out what might break when you change something.

But let's be realistic. We don't live in a perfect world. You need to be practical.

Pour your energy into creating rock-solid test cases for all your functional requirements—the things that define what the system must do. For non-functional requirements, like performance or security, you might not use individual test cases in the same way. Instead, you'll likely have a separate performance test plan or a security audit process. The goal isn't necessarily a one-to-one match, but ensuring you have documented coverage for everything that actually matters to the user.

Ready to build better applications without waiting on backend dependencies? With dotMock, you can create production-ready mock APIs in seconds. Simulate any success or failure scenario, from HTTP 500 errors to network timeouts, and empower your team to test resilient software safely. Start mocking for free today and accelerate your development cycle.