A Practical Guide to API Endpoint Testing

When we talk about API endpoint testing, we're essentially checking that every individual URL in your API is doing its job correctly. Think of it as knocking on a specific door and making sure the right person answers in the way you expect. It's about sending a request to an endpoint and verifying it returns the right response, handles data properly, and doesn't fall over when something goes wrong. This focused testing is the bedrock of building reliable and secure software.

Why API Endpoint Testing Is a Business Imperative

Before we get into the nitty-gritty of how to test, it's worth taking a moment to appreciate the why. In today's interconnected world, APIs are the silent workhorses holding our digital experiences together. They're the plumbing behind mobile apps, e-commerce sites, and sprawling microservice architectures. Each endpoint is a critical gateway for data and functionality—and unfortunately, for risk.

Skipping comprehensive API endpoint testing isn't just a technical shortcut; it's a direct business liability. A weak or faulty endpoint can have serious consequences that hit your bottom line and tarnish your reputation.

The Real-World Risks of Poor Testing

Inadequate testing isn't just about finding a few bugs. Every single endpoint represents a potential point of failure or an attack vector that someone could exploit. If you want a deeper look at the fundamentals, our guide on what API testing is and why it matters is a great place to start.

Here's what you're really risking by not testing thoroughly:

- Data Breaches and Security Gaps: An improperly secured endpoint can be an open door to sensitive user data, leading to massive compliance fines and a complete loss of customer trust.

- Revenue Loss from Unreliable Services: Imagine your payment processing or user signup endpoint goes down. Your business operations screech to a halt, and every minute of downtime costs you real money and customers.

- Reputation Damage: A buggy, slow, or unreliable API creates a terrible user experience. In the age of social media, bad news travels fast, and a reputation for being unreliable is incredibly hard to shake.

- Skyrocketing Development Costs: Finding a bug after you've gone live is exponentially more expensive and stressful to fix than catching it early in development. Good testing saves you from those painful, costly fire drills.

The modern application is built on APIs. Treating endpoint testing as an afterthought is like building a skyscraper and neglecting to check if the doors lock. It's a foundational pillar of security, reliability, and trust.

A Growing Attack Surface

As APIs have become more common and complex, they've also become a favorite target for cyberattacks. The scary part? Research predicts that by 2025, a whopping 95% of API attacks will come from authenticated sessions. This means attackers are getting past the front door and then looking for vulnerabilities inside the system.

This trend highlights a major security gap. Many companies don't even have a complete inventory of all their APIs, which is like not knowing how many windows your house has. In fact, only about 10% of organizations have a formal API governance strategy. As your services expand, thorough and automated testing becomes absolutely non-negotiable to defend against these evolving threats.

Building Your API Testing Environment

Before you even think about writing your first test, you need to get your house in order. A truly effective API testing strategy is built on a clean, consistent, and scalable testing environment. This is your foundation—it’s where you'll choose your tools, handle all your configurations, and most importantly, shield your tests from the unpredictable nature of live systems.

Getting this right from the start means your test results will be reliable, fast, and repeatable. To get a broader perspective before we dive into the specifics, this complete API testing tutorial offers a great overview. The aim is to create a setup that’s just as comfortable running on your laptop as it is in your CI/CD pipeline.

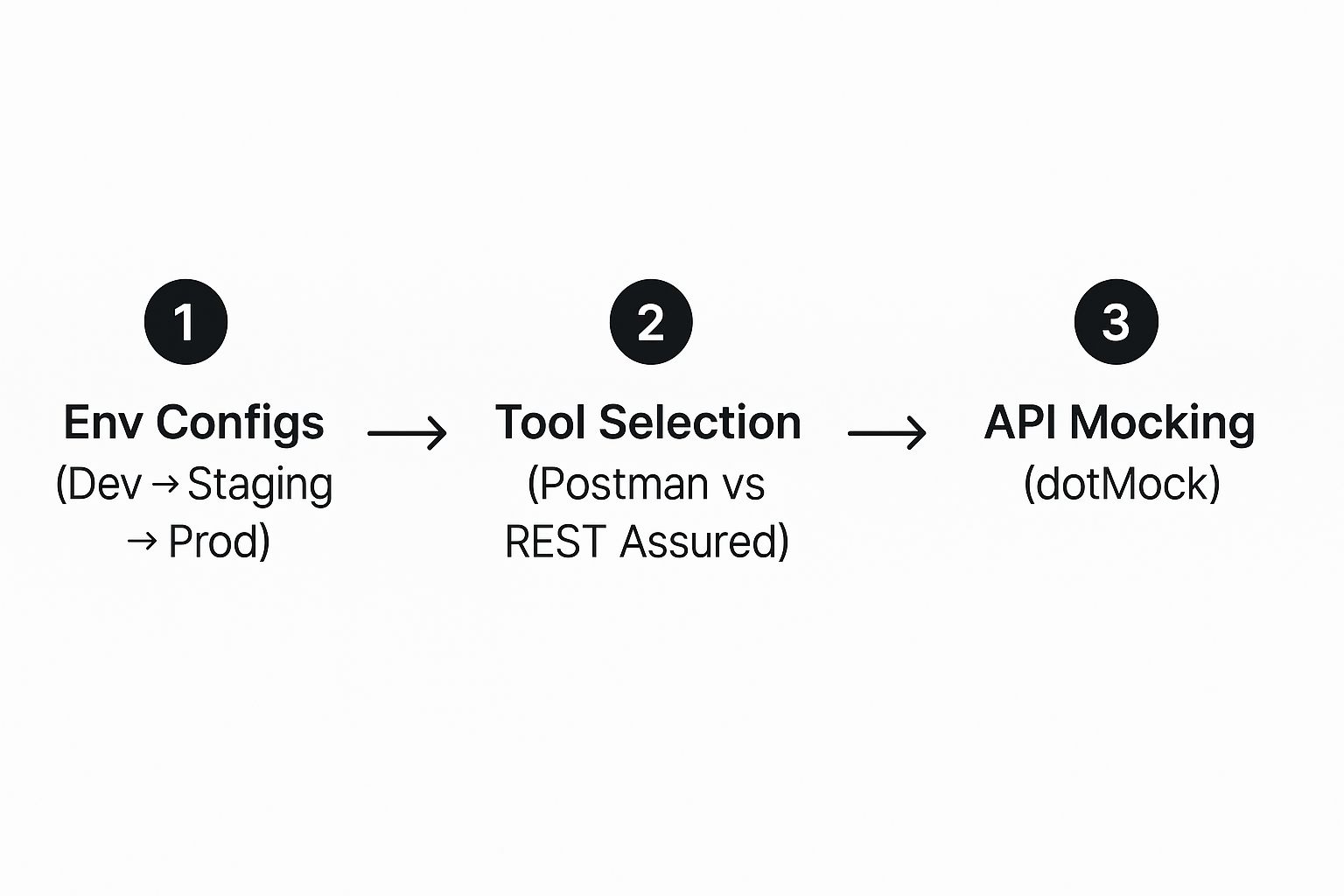

The diagram below maps out the key phases of building out this environment, from picking your tools to bringing in API mocking.

This structured approach helps maintain consistency and gives your team the right tools for both manual and automated testing efforts.

Choosing Your Testing Tools

The tools you pick will define how you work. It’s less about finding one "perfect" tool and more about assembling the right toolkit for your specific needs.

- For Manual & Exploratory Testing: You can't go wrong with tools like Postman or Insomnia. Their user-friendly interfaces are perfect for firing off quick requests, digging into responses, and just generally poking around an API to see how it behaves. I find this essential in the early days of development or when I’m trying to squash a tricky bug.

- For Automated Testing: When it's time to build a regression suite that runs on its own, you need to shift to code. Libraries like REST Assured for Java, Pytest for Python, or Jest for JavaScript let you write tests that live right alongside your application code. These are the tests you'll integrate into your pipeline to act as gatekeepers.

Honestly, most teams I've worked with use a hybrid approach. They'll use Postman for the initial hands-on discovery and then codify those learnings into an automated framework that prevents regressions with every new code commit.

Managing Environment Configurations

Let me be blunt: hardcoding URLs, API keys, or database credentials into your tests is a terrible idea. It’s a security risk, and it makes running the same test suite against different environments—like dev, staging, or production—a complete nightmare. The professional way to handle this is with environment variables.

By pulling out configuration details like base URLs and auth tokens, you create one test suite that can run anywhere. The exact same test can hit your local server or a staging environment just by swapping out a config file. You never have to touch the test code itself.

This simple practice also keeps your secrets out of your Git repository and lets your CI/CD system safely inject the right credentials for whatever environment it's deploying to.

The Critical Role of API Mocking

So, what do you do when the API you're testing relies on another service that’s flaky, slow, or maybe doesn't even exist yet? This is precisely where API mocking saves the day. Instead of making a real network call, your test talks to a mock server that serves up predictable, pre-defined responses.

A tool like dotMock is built for this. It gives you the power to simulate any scenario you can think of:

- The perfect 200 OK success response.

- Client-side errors like a 404 Not Found or a 400 Bad Request.

- Server-side failures like a dreaded 500 Internal Server Error.

- Even network problems like painfully slow responses or connection timeouts.

This technique is a cornerstone of what's often called service virtualization. If you're curious about the broader concept, our guide on what service virtualization is is a great place to start. By mocking these dependencies, your tests run in total isolation. They become faster, more stable, and give you the confidence that your application can handle failure gracefully—without you having to break anything for real.

Designing Test Cases That Uncover Real Bugs

Getting a 200 OK from an API is a nice start, but it's just scratching the surface. Real-world api endpoint testing isn't about proving something works; it’s about figuring out all the ways it can break. You have to put on your detective hat and actively hunt for the subtle flaws and weird edge cases that can take a system down.

This means you need to shift your mindset from just confirming functionality to intentionally trying to break things in a controlled environment. The goal is to design test cases that find bugs before your users do, covering not just the "happy path" but all the messy, unpredictable "unhappy paths" where the most critical issues love to hide.

Beyond the Happy Path

Let's ground this in a real-world example: a POST /users endpoint for new user registration. The happy path is simple. You send a valid name, email, and password, and you get back a 201 Created response. That's your baseline, your first check.

But the real value is in negative testing and exploring those edge cases. What happens when you start to challenge the endpoint's assumptions?

- Duplicate Data: What if someone tries to register with an email that's already in the database? The system should give them a clean

409 Conflicterror, not crash with a500 Internal Server Error. - Invalid Input: What if the password is just two characters long, or the email is missing the "@" symbol? Your API needs to gracefully reject these with a

400 Bad Requestand a helpful error message. - Empty or Null Fields: Sending an empty JSON object

{}ornullvalues for required fields should also trigger a400error, not cause the application to fall over.

A truly robust test suite is really just a collection of "what if" scenarios. Your job is to think of every strange, incorrect, or even malicious way a user (or another service) might talk to your endpoint and confirm that it responds exactly as it should.

To make sure you're covering all your bases, a checklist can be incredibly helpful. It forces you to think systematically about every aspect of the API's behavior.

API Test Case Design Checklist

Here’s a quick checklist to guide you through designing comprehensive test cases for any endpoint.

| Test Category | Key Checks and Scenarios | Example (for a '/users' endpoint) |

|---|---|---|

| Functionality | Does the endpoint perform its core function correctly? (Happy Path) | Send valid user data and expect a 201 Created response with the new user's ID. |

| Input Validation | How does it handle invalid, missing, or malformed data? | Send a request with a malformed email; expect a 400 Bad Request. |

| Boundary Cases | What about inputs at the very edge of acceptable limits? | Send a username with the maximum allowed characters; check for success. Send one with one extra character; expect failure. |

| Error Handling | Does the API return clear, correct, and secure error codes/messages? | Try to register with an existing email; verify a 409 Conflict status code is returned. |

| Security | Is the endpoint properly secured against common vulnerabilities? | Attempt to access the endpoint without authentication; expect a 401 Unauthorized. Check for input sanitization (e.g., <script> tags). |

| Performance | How does the endpoint perform under a normal and heavy load? | Measure the response time with a single request. Simulate 100 concurrent requests and monitor performance. |

This isn't exhaustive, but it's a solid framework to ensure you don't miss the common pitfalls when you're writing your tests.

Validating the Schema and Structure

Schema validation is one of the most fundamental checks you can run. It’s all about making sure the API's response structure is exactly what you expect. If your API contract says the response will have a userId as an integer and an email as a string, your tests need to confirm that.

This check is a lifesaver. It catches those sneaky bugs where a backend change accidentally alters a field's data type, which can instantly break any frontend app that depends on the original format.

- Data Types: Is

userIdstill an integer, or did it suddenly become a string like"123"? - Required Fields: Are all the fields that are supposed to be there actually present in the response?

- Unexpected Fields: Did a new, undocumented field just show up? While sometimes benign, this could be a sign of an unintentional data leak.

Modern tools and frameworks can often automate schema validation against a specification like OpenAPI, making it a powerful and low-effort way to maintain the contract between your services.

Testing Business Logic and Security

This is where you move past the technical structure and start testing the rules that make your application tick. For our user registration endpoint, the business logic is about more than just creating a database record.

- Authorization Checks: Can a user who is already logged in somehow hit the registration endpoint again? They shouldn't be able to.

- Permissions: Let's say you have an admin-only endpoint like

DELETE /users/{id}. You absolutely must write a test to prove that a regular user making that call gets a403 Forbiddenerror. This is non-negotiable for security. - Input Sanitization: How does the API handle special characters or potential script injections? Sending something like

<script>alert('XSS')</script>in a name field should be sanitized and stored safely, never returned as executable code.

These tests are what confirm your API not only works but also operates securely and sticks to the business rules you've defined.

Interestingly, AI is starting to change the game here. In 2025, AI-powered test case generation has become a major trend, enabling the automatic creation of tests from API specifications. These tools can even perform "self-healing" tests that adapt when an API changes. For instance, if an endpoint is renamed from /userProfile to /userDetails, an AI system can update the test logic automatically. For more on this, there are some great comprehensive API testing guides out there.

Simulating Real-World Failures

Finally, remember that your application doesn't live in a perfect bubble. Dependencies fail, networks get laggy, and servers get overloaded. Using a mocking tool like dotMock is crucial for testing these failure scenarios without taking down your actual infrastructure.

You can design specific test cases to simulate what happens when things go wrong:

- Downstream Service Timeouts: If your registration API calls an external email service, what happens when that service is slow? Your endpoint should time out gracefully instead of hanging forever.

- Database Errors: If the database connection drops mid-request, does your API return a proper

503 Service Unavailable? - Third-Party API Failures: If your process relies on a third-party validation service that returns an error, how does your API react and report that back to the client?

By deliberately injecting these failure conditions into your tests, you build resilience right into your application. It’s how you ensure that even when the ecosystem around your service gets messy, your API can handle the failure without a catastrophic meltdown.

Getting Predictable Results with API Mocking

Let's be honest: relying on live, third-party services for your tests is a disaster waiting to happen. It's a classic recipe for slow, flaky, and downright unpredictable results.

What happens when that critical external service goes down for maintenance right before your big release? Or what if you need to see how your app handles a 503 Service Unavailable error? You can't exactly ask another team to crash their servers just for you. This is precisely where API mocking shifts from a "nice-to-have" to an absolutely essential part of modern api endpoint testing.

Instead of hitting a real API, you use a mock server as a stand-in. This controllable substitute can be programmed to return any response you need, whenever you need it. You can finally test your application in total isolation, ensuring your test results reflect the quality of your code, not the stability of someone else's.

How dotMock Creates a Stable Testing World

This is the exact problem tools like dotMock were built to solve. Rather than making a real network call to a service you don't control, your application sends its request to a dotMock endpoint instead. That endpoint then serves up a pre-configured response you’ve defined, giving you complete command over your testing environment.

The benefits are immediate and massive:

- Blazing Speed: Mocked responses are returned instantly. This can shave minutes—sometimes even hours—off your test suite's runtime, which is a game-changer when you're running hundreds or thousands of tests.

- Rock-Solid Stability: Mock servers don’t have random outages or network hiccups. This simple fact eliminates a huge source of flaky tests and false alarms, making your CI/CD pipeline something you can actually trust.

- Total Control: You can simulate any scenario you can dream up without a complicated setup. We're talking perfect successes, specific error codes, and even tricky network failures like timeouts.

By cutting the cord to external dependencies, you also empower your team to work in parallel. Frontend developers can start building against a stable mock API long before the real backend is even finished, which helps crush development bottlenecks. If you want to dive deeper into how this works, a great place to start is understanding the fundamentals of defining APIs and endpoints within the dotMock platform.

Simulating Both Success and Failure

The true power of API mocking isn't just faking a successful response; it's about simulating the entire spectrum of possibilities, especially the messy ones. A resilient application is one that handles failure with grace, and mocking is the safest and most efficient way to put those failure modes to the test.

Let's walk through a few real-world examples.

The "Happy Path" 200 OK Response

This is mocking at its most basic. You set up your mock server to return a successful 200 OK response with a specific JSON payload. This lets you confirm that your application correctly parses a valid response and updates its state or UI as expected.

For a GET /users/123 request, you could configure your dotMock endpoint to always return this:

{

"status": 200,

"headers": {

"Content-Type": "application/json"

},

"body": {

"id": 123,

"name": "Alex Smith",

"email": "[email protected]",

"status": "active"

}

}

Now, your test gets the exact same data every single time it runs. No surprises.

Handling Client Errors: 400 Bad Request and 404 Not Found

What happens when a user tries to load a profile that doesn't exist? Your app should show a friendly message, not just crash and burn. With dotMock, simulating a 404 Not Found response to test this exact scenario takes seconds.

In the same way, you can test your input validation by mocking a 400 Bad Request. This confirms that if your app sends improperly formatted data to an endpoint, it can correctly interpret the error response and give the user helpful feedback, like highlighting the incorrect form fields.

Simulating errors isn't about testing the external API; it's about testing your application's reaction to those errors. This proactive approach to failure testing is what separates a brittle application from a robust one.

Bracing for Server Failures: 500 Internal Server Error

This is one of the most critical scenarios to test, because downstream services will fail eventually. You can configure a dotMock endpoint to return a 500 Internal Server Error or a 503 Service Unavailable on demand.

Doing this forces you to answer some tough but important questions:

- Does my application get stuck on an infinite loading spinner?

- Does it show the user a generic, unhelpful error screen?

- Or does it gracefully tell the user that something went wrong and suggest they try again in a few minutes?

By deliberately injecting these failures into your test environment, you can build and verify true system resilience. You can even simulate more subtle issues like network timeouts or delayed responses to make sure your application stays responsive even when its dependencies are dragging their feet. It's this comprehensive approach that prepares you for the messy reality of distributed systems.

Integrating Your Tests into a CI/CD Pipeline

Writing individual tests is a solid first step, but the real magic happens when you automate them. In a modern development workflow, manual testing just can't keep up. The ultimate goal is to create a seamless, automated feedback loop where your entire test suite runs against every code change. This turns your tests into a vigilant gatekeeper, catching regressions before they ever have a chance to sneak into your main branch.

This is precisely where weaving your API endpoint testing into a Continuous Integration/Continuous Deployment (CI/CD) pipeline becomes a game-changer. It elevates your tests from a folder of scripts into a fundamental part of your development process, guaranteeing that your core branch remains stable and ready for deployment.

Structuring Tests for Your Pipeline

Before you can hit "go" on automation, you need a well-organized test suite. A messy, confusing set of tests will quickly become a major bottleneck in any pipeline, causing more headaches than it solves. A good rule of thumb I've learned is to structure your tests in a way that mirrors your API's own design.

Think about organizing your test files by endpoint or feature. A clear structure might look like this:

tests/users/create_user.spec.jstests/users/get_user.spec.jstests/orders/place_order.spec.js

This simple, logical grouping makes it incredibly easy to locate specific tests, understand your API's test coverage, and—most importantly—debug failures fast. When a pipeline run turns red, you'll know exactly which part of the application is in trouble just by reading the name of the failing test file.

Choosing and Configuring Your CI/CD Platform

Most dev teams today rely on a CI/CD platform like GitHub Actions, GitLab CI, or Jenkins. These tools are the engines of automation; they monitor your code repository for new commits and then kick off a predefined sequence of jobs. This typically includes building the code, running all your tests, and, if everything passes, deploying the application.

You'll define these jobs in a configuration file, such as .github/workflows/main.yml for GitHub Actions. For a really practical walkthrough, this guide on setting up a CI/CD pipeline using GitHub Actions is a great resource.

A standard CI/CD pipeline for API testing generally follows these steps:

- Checkout Code: The pipeline grabs the latest version of the code from your repository.

- Install Dependencies: It sets up the environment by installing all the necessary packages and tools for your test framework.

- Run API Tests: This is the moment of truth where your test command (like

npm testorpytest) is executed. - Report Results: The pipeline provides a clear pass/fail result, blocking a merge or deployment if even a single test fails.

This automated process acts as a crucial safety net, protecting your production environment from bugs.

By making tests a mandatory step in your pipeline, you're building a culture of quality. Developers get immediate feedback on their work, and the entire team gains confidence knowing the main branch is always stable.

Tackling Common Pipeline Challenges

Of course, getting your pipeline humming along isn't always a straight path. Two of the most common hurdles I see teams stumble over are managing test data and wrestling with "flaky" tests—those frustrating tests that fail intermittently for no apparent reason.

Another classic problem is running into API rate limits during high-volume test runs, which can cause false negatives. This is where a good CI/CD process really shines, as it helps you catch these issues early.

Managing Test Data in an Automated World

Your tests need data, but in a stateless CI/CD environment, you can't rely on a persistent database that might have leftover data from a previous run. Everything needs to be clean and predictable.

Here are a few proven strategies for handling test data:

- Seeding Scripts: Kick off your pipeline job by running a script that populates a test database with a known, consistent set of data.

- API-Driven Setup: Use your own API to create the necessary test records before a specific test runs. For instance, you'd call your "create user" endpoint before running tests against the "get user" endpoint.

- Cleanup Steps: Make sure your tests (or a final pipeline job) tear down any data they created, leaving the environment spotless for the next run.

Handling Flaky Tests

A flaky test is the enemy of a good pipeline. It's a test that passes one minute and fails the next, even with no code changes. These are toxic because they destroy trust in your test suite. If developers can't rely on the results, they'll start ignoring failures, defeating the entire purpose of automation.

Flakiness usually stems from a few common culprits:

- Race Conditions: Tests that rely on asynchronous operations finishing in a specific order.

- Network Issues: Depending on live external services is a primary source of unpredictable failures.

- Timing Problems: Tests that expect something to happen within a certain timeframe can fail if the CI runner is under heavy load.

The most effective way to eliminate flakiness is to write deterministic, self-contained tests. This is where API mocking tools like dotMock are invaluable. By mocking external dependencies, you remove network latency and third-party instability from the equation. The end result is a fast, reliable, and trusted feedback loop that lets your team build better software with confidence.

Common Questions About API Endpoint Testing

As you dive into API endpoint testing, you'll inevitably run into some recurring questions. I see them pop up all the time with teams I work with. Getting these sorted out early on helps clear up confusion and makes sure everyone is on the same page about why this testing is so important. Let's walk through a few of the most common ones.

Is API Testing the Same as API Endpoint Testing?

People often use these terms interchangeably, but there's a subtle and useful difference. I like to use a car analogy.

API testing, in the broad sense, is about validating the entire Application Programming Interface. It’s like a full diagnostic on a car—you're checking the engine, the brakes, the transmission, and all the electrical systems to make sure they function together as a whole.

API endpoint testing is a much more focused part of that larger process. Here, you're zooming in on the individual URLs or "endpoints" where the API is accessed. This is like testing just the headlights. Do they turn on? Do the high beams work? Are they aimed correctly? You're laser-focused on one specific function and ensuring it behaves exactly as designed.

How Should We Handle Authentication in Our Tests?

Getting authentication right is non-negotiable for both security and reliable tests. The absolute golden rule here is to never, ever hardcode API keys or tokens into your test scripts. It's a massive security hole waiting to be exploited and a maintenance headache down the road.

A much better approach is to manage credentials as environment variables. A solid test setup should:

- First, make a programmatic call to your login or token endpoint to get a fresh authentication token.

- Stash that token in a variable that lasts for the entire test run.

- Include that token in the

Authorizationheader for every subsequent request that needs it.

Don't just test the "happy path," either. Your tests need to confirm that your security rules are working. For instance, what happens when a standard user tries to hit an admin-only endpoint? They should get a 403 Forbidden error, and your test should verify that.

A mature testing strategy doesn't just check if authentication works; it actively proves that your security model denies access when it should. This is a non-negotiable part of building a secure system.

What Are the Most Important Metrics to Track?

To really understand how your API is doing, you can't just guess. You need to track a few key metrics that give you a clear picture of its health, performance, and reliability.

- Response Time: How long does the API take to answer a request? This directly impacts the user experience. Nobody likes a slow app, and slow APIs are often the culprit.

- Error Rate: What percentage of requests are failing with

4xxor5xxstatus codes? If this number starts climbing, it's one of the first red flags that something is wrong. - Throughput: How many requests can the API handle in a given period, like per second or minute? This tells you about its performance under pressure and helps you find scaling bottlenecks before your users do.

- Test Coverage: What slice of your API endpoints and the business logic behind them is actually covered by your automated tests? This metric helps you spot gaps in your test suite.

Keeping an eye on these numbers over time allows you to catch regressions early, prevent performance issues from creeping in, and make smart, data-backed decisions about where to focus your engineering efforts.

When Should I Use Mocking vs. a Staging Environment?

This is a classic question, and the answer isn't "one or the other." The real answer is that you need both. They play different but equally important roles in a solid testing pipeline.

You should use API mocking for your unit and component tests. The whole point here is to isolate the code you're testing from all its external dependencies. Mocking makes your tests incredibly fast and stable. It also lets you effortlessly simulate specific scenarios, like a network timeout or a 500 server error, without having to actually break a real service.

You'll want a live staging environment for your integration and end-to-end tests. This is your reality check. It's where you confirm that all your different services—your application, your databases, and any third-party APIs—are all playing nicely together in a setup that mirrors production.

A truly mature testing strategy relies heavily on both. You'll have a massive suite of fast, mock-based tests that run with every single commit, giving you instant feedback. Then, you'll have a smaller, more thorough suite of integration tests that run against your staging environment before you even think about deploying a major release.

Ready to eliminate flaky tests and accelerate your development cycle? With dotMock, you can create stable, predictable mock APIs in seconds to test any success or failure scenario. Stop waiting on unstable dependencies and start building more resilient applications today. Get started for free at dotmock.com.