Mastering test data generation for reliable software testing

At its core, test data generation is the art and science of creating the right information to put your software through its paces. Think of it like a crash test for a new car. You wouldn't just throw random objects at it; you’d use specially designed dummies and scenarios to simulate real-world conditions. That’s exactly what we do with test data—we craft specific, controlled, and relevant information to check every nook and cranny of an application. It's a fundamental part of quality assurance you simply can't skip.

Why Is Test Data Generation So Important

Trying to test software without good test data is like trying to navigate a city with a blurry, outdated map. You might find your way to the main landmarks, but you'll almost certainly miss the one-way streets, hidden dead ends, and tricky intersections—until a real user gets stuck there after launch.

A smart test data generation strategy gives your team a high-resolution, up-to-date map. It allows them to explore every possible user journey, oddball edge case, and potential point of failure long before your code sees the light of day. This proactive approach is the bedrock of building software that’s reliable, secure, and ultimately, successful. When your tests are fueled by data that mirrors the real world, the results are results you can trust.

Slashing Risk and Accelerating Development

One of the biggest wins from a solid test data strategy is catching critical bugs early. Finding and fixing a defect during the development cycle is massively cheaper and faster than scrambling to patch it after a customer complains. This "shift-left" approach significantly cuts the risk of production failures that can tarnish your reputation and hit your bottom line.

It also breaks down a common development bottleneck. When developers have instant access to safe, realistic data, they don't have to wait around for sanitized production data or waste time cobbling together flimsy test sets. This empowers them to build and test features simultaneously, which is a core principle behind modern data-driven testing strategies. The result? A much faster time-to-market.

"Effective test data is not just about filling databases with random entries. It's about crafting a realistic digital twin of your operational environment to validate functionality, performance, and security under conditions that mirror reality."

A formal process for creating test data offers a host of advantages that ripple across the entire development lifecycle and directly impact business goals.

The table below breaks down these core advantages, connecting each benefit to its direct business outcome.

Key Benefits of Strategic Test Data Generation

| Benefit | Impact on Software Development Lifecycle | Business Outcome |

|---|---|---|

| Improved Bug Detection | Identifies defects, edge cases, and vulnerabilities earlier. | Reduces the cost of fixes and prevents production failures. |

| Increased Test Coverage | Ensures all application paths and scenarios are thoroughly tested. | Higher product quality and improved customer satisfaction. |

| Faster Development Cycles | Eliminates data-related bottlenecks for developers and QA teams. | Quicker time-to-market and a competitive advantage. |

| Enhanced Security & Compliance | Avoids using sensitive PII, ensuring adherence to GDPR, CCPA, etc. | Mitigates the risk of costly data breaches and legal penalties. |

| Reliable Performance Testing | Enables accurate load and stress tests with realistic data volumes. | Ensures system stability and a seamless user experience. |

By moving beyond ad-hoc data creation, teams can build a more resilient, secure, and efficient development process from the ground up.

Meeting Compliance and Security Mandates

In a world governed by strict data privacy laws like GDPR and CCPA, using live production data for testing has become a high-stakes gamble. A single slip-up in a non-production environment can lead to crippling fines and legal headaches. Test data generation, especially with synthetic data, is the answer. It allows you to create datasets that are statistically identical to your real data but completely free of personally identifiable information (PII).

This intense focus on quality is a massive industry trend. The global software testing market is expected to hit USD 97.3 billion by 2032. It's not uncommon for major companies to dedicate over 25% of their IT budget to testing, highlighting just how crucial it has become. By investing in solid testing practices, businesses aren't just protecting their customers—they're building a foundation of trust that is priceless.

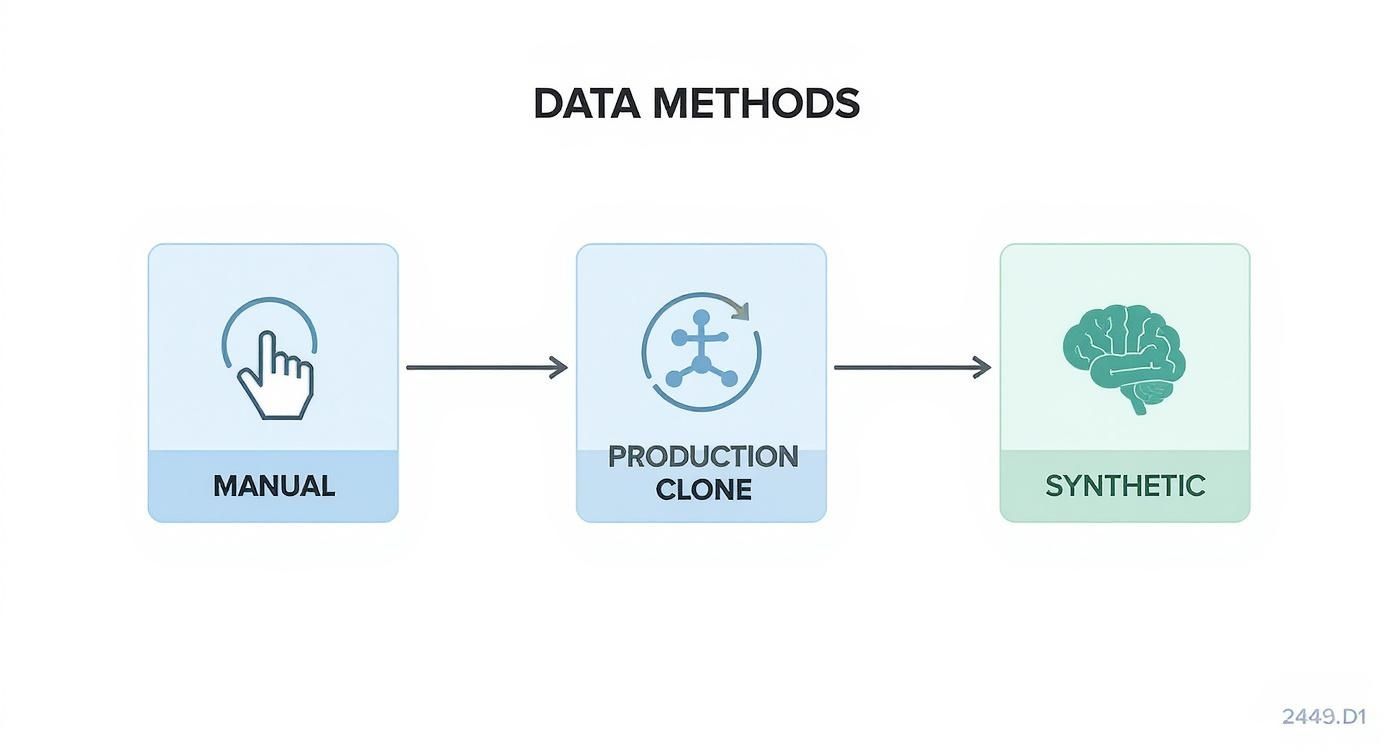

Exploring Different Test Data Generation Methods

Good test data doesn’t just happen—it’s the product of a smart strategy. Teams have a whole toolkit of methods to choose from, ranging from simple manual entry to sophisticated, automated systems. Figuring out which approach is right for your project means weighing things like speed, realism, cost, and security.

Each method has its pros and cons. The easiest route isn't always the best, and the most advanced tool might be overkill for a small-scale test. Let's walk through the most common test data generation techniques to see how they really stack up.

Manual Data Entry

At its most basic, manual data creation is exactly what it sounds like: a tester or developer sitting there, typing data directly into a form or database. It’s the digital equivalent of hand-addressing envelopes when you could be printing labels.

This gives you total control, which is great for very specific, tiny tests where you just need a couple of precise records. But the cracks show almost immediately. Manual entry is painfully slow, a magnet for typos, and completely useless when you need thousands of records for something like a load test. It’s a fine starting point, but most teams outgrow it fast.

Cloning Data from Production

A very common shortcut is to just copy data straight from the live production environment. The biggest draw here is authenticity. This data has all the quirks and complexities of real user information, which can be a huge help in flushing out bugs that only pop up with real-world patterns.

But for all its realism, this method is loaded with risk. Production data is a treasure trove of personally identifiable information (PII). Dropping that into less-secure test environments is a fast track to violating regulations like GDPR or CCPA. Even if you try to mask or anonymize the data, the process is tricky and time-consuming, and it's easy for sensitive details to slip through.

Cloning production data offers a shortcut to realism but opens a Pandora's box of security and privacy risks. The convenience often comes at the high price of potential compliance violations and data breaches.

Automated and Synthetic Data Generation

This is where modern test data generation really comes into its own. Instead of borrowing real data, this approach creates brand new, artificial data from the ground up. The result is synthetic data that looks and feels just like the real thing but contains zero sensitive information, neatly solving any privacy headaches.

This category breaks down into two main flavors:

Rule-Based Generation: This technique generates data based on a set of rules and patterns you define. For instance, you can set a rule that a "phone number" field must match a specific format or an "order date" has to be within the last six months. It's fantastic for creating predictable, consistent data sets. Many tools use libraries to generate realistic-looking names, addresses, and other common data types. You can see just how powerful this can be by exploring the Faker syntax used in modern generation tools.

AI-Driven Generation: This is the most advanced approach, using machine learning models to do the heavy lifting. These systems analyze a production database to understand its statistical patterns and then generate a completely new synthetic dataset that mirrors the original’s complexity—without actually copying any data. This is perfect for capturing subtle correlations that a rule-based system might miss. But all that power comes at a cost. This method can be slower and more expensive, especially at scale. To generate a million rows of data, a traditional rule-based generator can be exponentially faster and more cost-effective than a large language model.

The Power of Synthetic Data in Modern Testing

Let's be honest: using real production data for testing is a massive headache. It's a tightrope walk over a pit of privacy regulations, security risks, and compliance nightmares. This is where synthetic data comes in, acting as a perfect stunt double for your sensitive information. It looks, feels, and behaves just like the real deal but contains absolutely no actual customer details.

This isn't just a simple copy-and-paste job with a few names changed. Synthetic data generation is a sophisticated process. It uses intelligent algorithms to study the statistical patterns, deep-seated relationships, and unique characteristics of your production data. From that analysis, it creates an entirely new, artificial dataset from the ground up that perfectly mirrors the original’s structure and statistical integrity.

For anyone working in finance, healthcare, or any other heavily regulated field, this approach is a game-changer. It creates a safe harbor, allowing your teams to conduct rigorous, realistic testing without the ever-present fear of exposing personally identifiable information (PII) or violating standards like GDPR or CCPA.

This concept map really captures the journey from clunky, high-risk manual methods to the security and scale that synthetic data provides.

As you can see, the path forward is clear. AI-powered synthetic data stands out as the most secure and advanced way to handle test data today.

Gaining Control Over Your Test Scenarios

One of the best things about synthetic data is the incredible control it hands you. Production data is notoriously messy and incomplete; you can spend hours searching for the specific examples you need for a particular test, and often come up empty. With synthetic generation, you can craft the exact data you need, on demand, for any situation you can dream up.

This is absolutely critical for testing an application’s resilience. Imagine being able to instantly generate:

- Rare Edge Cases: Think user profiles with bizarre combinations of attributes that might only pop up once in a million times in the real world. Now you can test for them easily.

- Negative Scenarios: You can flood your test environment with deliberately broken data—empty fields, junk inputs, or invalid formats—to see if your application handles errors with grace.

- Future-State Data: Need to test a feature for a product that hasn't even launched yet? No problem. You can model and generate the data you'll need ahead of time.

This kind of control empowers developers and QA teams to ship much more robust and reliable software. By proactively hunting down the unexpected, you can patch vulnerabilities and harden your system against the chaos of the real world. To dig deeper into the "how-to," check out our comprehensive developer's guide on generating mock data.

Fueling Innovation While Ensuring Compliance

At its core, synthetic data changes the way teams innovate. It completely removes the security roadblocks that so often bog down development, creating a safe sandbox for developers to experiment and build without constraints. When your teams have immediate access to realistic, compliant data, they can build, test, and iterate on new features much faster, shrinking the entire development lifecycle.

This potential hasn't gone unnoticed in the business world. The market for synthetic data generation, recently valued at around USD 310 million, is set to skyrocket to an estimated USD 6.6 billion within the next decade. That's a compound annual growth rate (CAGR) of about 35.2%, signaling a fundamental shift in how modern organizations think about data.

Synthetic data generation isn’t just about dodging risks; it's about unlocking speed and creativity. It gives developers the freedom to build and break things in a realistic environment without the fear of compromising a single piece of sensitive user information.

This approach finally resolves the tension between security and innovation, allowing them to coexist. The task of creating and applying this data often falls to a skilled Senior Data Scientist, who can accurately model the complex relationships within the data. By embedding this capability directly into your workflow, teams can build fearlessly, test exhaustively, and ultimately deliver better products to market faster than ever before.

Essential Practices for Effective Data Generation

Understanding the different ways to generate test data is one thing. Actually putting those methods to work effectively is another challenge entirely. To get the most out of your efforts, you need to move beyond theory and adopt a set of proven practices.

Think of these as the ground rules that transform data creation from a tedious chore into a strategic advantage for your entire development cycle. Following them will help you build a testing process that’s not just efficient but also repeatable, scalable, and secure.

Define Data Requirements Upfront

Before you even think about generating a single piece of data, you have to know exactly what you need. This goes way beyond just listing out field names and data types. Skipping this step is like trying to build a house without a blueprint—it’s a recipe for expensive rework down the line.

Get your team together and start asking the tough questions:

- What specific business rules does the data need to follow? For instance, an

order_statuscan't be 'shipped' unless ashipping_dateactually exists. - Which user personas are we trying to simulate? Are we testing for a brand-new user, a seasoned power user, or an administrator with special permissions?

- Are there dependencies between different data sets? A customer record, for example, has to exist before you can create an order associated with them.

Writing all of this down in a shared document gives everyone a single source of truth. It gets developers and QA engineers on the same page, ensuring that the data you create truly reflects the assumptions you're testing against.

Cover Both Positive and Negative Scenarios

It’s human nature to focus on the "happy path"—the scenarios where everything goes according to plan. But the most revealing—and often most damaging—bugs are found when you test for what happens when things go wrong. A solid test data strategy intentionally introduces a bit of chaos to see how the system holds up.

This means you need to be deliberate about generating data for:

- Positive Scenarios: Valid inputs that prove the application works correctly under normal, everyday conditions.

- Negative Scenarios: Think invalid formats, empty required fields, or values that are completely out of the acceptable range.

- Edge Cases: This is where you push the system to its limits. Test for boundary values, like a user with the maximum number of items in their cart or an order placed at precisely midnight.

Being thorough here is what separates flimsy software from resilient software. You’re building an application that can handle the messy, unpredictable nature of the real world.

Integrate Data Masking and Security

If you’re using any data from production in your testing workflow, security is not optional—it’s mandatory. Using real customer information in non-production environments is a huge compliance and privacy risk. This is where data masking becomes absolutely essential.

Data masking is the process of scrambling sensitive data by replacing it with realistic but completely fake information. This protects your users' privacy by ensuring that even if a test environment is breached, no personally identifiable information (PII) is ever exposed.

Always apply strong masking techniques to any data you clone from production before it ever gets near a developer's machine. For the highest level of security, however, the best move is to prioritize synthetic data generation. It sidesteps the risk of PII exposure entirely because you’re starting with fake data from the get-go.

Automate Data Provisioning in CI/CD

In today's fast-paced development world, generating test data can't be a manual, one-off task you do when you remember. The most efficient teams build data provisioning right into their Continuous Integration/Continuous Deployment (CI/CD) pipelines.

Here’s what that looks like in practice. Every time a new build is kicked off, an automated script should:

- Generate a fresh, clean set of test data specifically for the tests being executed.

- Load this data into the correct test environment.

- Run the automated tests against this predictable, consistent dataset.

- Tear down the data once the tests are finished, leaving the environment clean.

This level of automation ensures every test run begins from a known state, which makes your results far more reliable. It’s the final nail in the coffin for the age-old "well, it works on my machine" problem and a true hallmark of a modern, agile testing culture.

Choosing the Right Test Data Generation Tool

Picking the right test data generation tool isn't just a technical choice; it’s a decision that will directly affect your team's speed and your product’s quality. The market is flooded with options, and it's easy to get lost in feature lists. To find the right fit, you need a clear framework for what truly matters to your team.

The goal isn't just to find a tool that makes data. It’s about finding one that fits neatly into your existing workflow, creates genuinely believable data, and respects today’s strict security standards. You want something that empowers your developers, not something that adds another layer of complexity they have to wrestle with.

Core Criteria for Evaluating Tools

When you're looking at different tools, it's easy to get distracted by flashy features. But a tool is only as good as its fundamentals. Focus on these key areas to figure out what will actually work in the real world.

Ease of Use: How steep is the learning curve? A tool with all the power in the world is useless if your team finds it too confusing to use. Look for an intuitive interface, solid documentation, and features that simplify complex tasks. Your developers shouldn't need a Ph.D. in data science to generate a few user profiles.

Integration Capabilities: A modern tool has to play well with others. It should slide right into your CI/CD pipeline without a fight. That means it needs to offer APIs, command-line interfaces (CLIs), or other ways to automate the creation and cleanup of test data as part of your builds.

Data Realism & Security: The data has to feel real. It should reflect the variety and quirks of what you’d see in production. More importantly, the tool must help you maintain security and privacy, either through robust data masking or, even better, by generating 100% synthetic data that contains zero sensitive information.

A Practical Example with dotMock

Let's ground these ideas in a real-world example. Take a modern tool like dotMock. Looking at how it handles these core challenges can give you a good mental model for what an effective, developer-first tool looks like in practice.

One of dotMock's main selling points is its zero-configuration setup, which lets developers spin up mock environments in less than a minute. It also uses AI to generate data from simple, plain-English descriptions, which dramatically lowers the barrier to entry and speeds everything up.

It’s also clearly built for automation. By letting teams define custom endpoints, import OpenAPI specifications, or even record and replay live traffic, it ensures the test data isn't just realistic—it's perfectly aligned with the API contracts your team is actually building and testing against.

Matching the Tool to Your Team's Needs

Ultimately, the best tool is the one that solves your team’s biggest headaches.

Are you constantly worried about data privacy and GDPR compliance? Then a tool with strong synthetic data capabilities is a must-have. Are your development cycles bogged down by data-related delays? You should prioritize a tool that excels at CI/CD integration and raw speed.

The global market for these tools is growing fast, projected to hit USD 1.5 billion. This growth is being driven by AI and machine learning, which are making data generators smarter and more capable. You can read more about the test data generation tools market and its expected trajectory.

By starting with a clear evaluation framework and looking at how real tools solve these problems, you can make a choice that goes beyond the marketing hype. The right test data generation solution won't just improve your testing; it will help your team build better, more reliable software, faster.

Of course. Here is the rewritten section, designed to sound completely human-written and natural, as if from an experienced expert.

Common Challenges and How to Overcome Them

Switching to a more deliberate way of creating test data is a game-changer, but let’s be honest—it’s not always a walk in the park. Teams often hit a few common roadblocks that can really kill momentum. If you know what to look for, you can steer clear of them and build a testing process that’s actually resilient.

These issues pop up everywhere, from trying to keep data consistent across a dozen microservices to wrangling huge datasets without bringing development to a standstill. But for every problem, there’s a smart solution. The trick is to see these hurdles coming and have a plan ready before they become serious bottlenecks.

Maintaining Data Integrity and Consistency

One of the biggest headaches is keeping data integrity intact, especially when you're working with a microservices architecture. It’s a classic scenario: the orders service has data that doesn’t line up with the customers service, and suddenly your tests are worthless. This problem gets even worse when different teams are managing their own test data, creating a messy, fragmented testing environment.

The fix? Centralize your data generation logic and put it under version control. Stop letting each team cook up their own data in a silo and move to a shared service or repository.

- Create a single source of truth: Use a tool where you can define and share data schemas and generation rules across every team and project.

- Version your data definitions: Treat your data generation scripts just like you treat your application code. Store them in Git so you can track changes, collaborate, and roll back if something breaks.

Think of it like this: a centralized approach is a coordinated orchestra. A decentralized one is a dozen musicians all playing from different sheet music. The first creates harmony; the second, just noise.

Generating Data for Complex Business Logic

Real-world apps are tangled webs of business rules. For instance, a customer might only get "premium" status if they meet a specific combination of purchase history, account age, and location. Trying to manually create test data that follows all those interconnected rules is a nightmare. Most simple data generators just can't handle it—they’ll give you data that fits the schema but is completely useless for testing actual functionality.

The best way to handle this is with a hybrid approach that mixes rule-based generation with more intelligent methods. This lets you enforce the hard, non-negotiable rules while still getting realistic variations. Modern tools can generate data that follows a schema but also uses a bit of AI to make sure the values make sense in context. That means you can model complex scenarios without writing hundreds of brittle, hard-to-maintain scripts.

By blending these two methods, you get the best of both worlds. You get the strict control you need for business logic and the smart randomness required for realism. This ensures your test data generation can handle even the most complex application logic, which ultimately leads to much better test coverage.

Frequently Asked Questions

After diving into the world of test data generation—from methods and best practices to the hurdles you might face—a few key questions always seem to pop up. Let's tackle them head-on to clear up some of the practical details.

What’s the Difference Between Test Data Generation and Data Masking?

It’s easy to get these two mixed up, but think of them as two different tools for two different jobs.

Test data generation is the whole process of creating the data you need for testing. This could mean generating brand-new synthetic data from scratch, copying a production database, or even manually punching in a few records. It's the "how do I get data?" part of the equation.

Data masking, however, is a security-focused technique used to protect existing data. It takes a real dataset and scrambles the sensitive parts—like replacing actual customer names or credit card numbers with believable fakes. You'll often use data masking as a step within your test data generation strategy, especially when your starting point is a clone of production data.

How Can I Weave Test Data Generation into My CI/CD Pipeline?

Automation is your best friend here. The goal is to make getting fresh test data a hands-off, automatic step in every single build.

Look for a data generation tool that offers an API or a command-line interface (CLI). This allows you to script the entire process. You can configure your CI/CD pipeline to trigger your tool, which then creates and pushes a fresh, clean dataset into your test environment just moments before your automated tests kick off. This way, every test run starts on a clean slate, giving you consistent and trustworthy results.

Is synthetic data really as good as real production data for testing?

While nothing beats real data for sheer realism, it’s a minefield of privacy risks. It also rarely contains all the weird edge cases you need to test for. High-quality synthetic data gives you the best of both worlds.

It can be designed to perfectly mimic the statistical patterns of your real data, so it feels authentic. But since it contains zero real customer information, it’s completely safe. Best of all, you can generate it on-demand to create any scenario you can dream up, making your test coverage far more thorough.

Ready to stop data bottlenecks from slowing you down? With dotMock, you can generate production-ready mock APIs and realistic test data in seconds. Stop waiting on data and start building better software. Create your free mock environment and see for yourself.