9 Critical Software Testing Best Practices for 2025

In today's fast-paced development cycles, simply 'testing' isn't enough. The difference between a successful launch and a buggy release often comes down to the underlying strategy. Effective software testing is no longer a final-stage gatekeeper; it's an integrated, continuous process that ensures quality from the first line of code. However, many teams still struggle with bottlenecks, flaky tests, and environments that don't mirror production, leading to delays and escaped defects. This article cuts through the noise to deliver 9 software testing best practices that directly address these modern challenges. We'll explore how to build quality in, not bolt it on, with a special focus on leveraging tools like API mocking to create resilient, reliable, and rapid testing environments that accelerate your entire development lifecycle.

This guide is designed for a wide range of professionals, from frontend developers and QA engineers to DevOps specialists and technical architects. We will provide actionable insights into crucial methodologies that foster collaboration, improve code quality, and shorten feedback loops. By implementing these practices, you not only catch bugs earlier but also create a more predictable and efficient development process. This proactive approach is fundamental to achieving significant long-term savings in software development by reducing rework and maintenance costs down the line.

From Test-Driven Development (TDD) and the Test Automation Pyramid to advanced strategies like Risk-Based Testing and efficient Test Environment Management, you will learn how to:

- Integrate testing seamlessly into your CI/CD pipeline.

- Write clearer, more effective tests using frameworks like Behavior-Driven Development (BDD).

- Prioritize testing efforts to focus on the most critical areas of your application.

- Manage test data and environments effectively to eliminate common testing roadblocks.

Each section provides a clear overview, highlights key benefits, and offers practical tips for implementation, ensuring you can apply these principles directly to your projects.

1. Test-Driven Development (TDD)

Test-Driven Development (TDD) flips the traditional development sequence on its head. Instead of writing code and then testing it, TDD is a software testing best practice that mandates writing a failing automated test before writing the production code to satisfy that test. This methodology, popularized by Kent Beck, ensures that every line of code is written with a specific, testable requirement in mind, resulting in a robust, well-documented, and highly reliable codebase from the ground up.

The core of TDD is its simple yet powerful "Red-Green-Refactor" cycle. This iterative process provides a structured rhythm for development, where each small feature enhancement or bug fix follows a precise, quality-focused path. It inherently produces a comprehensive regression suite, giving developers the confidence to make changes without introducing unintended side effects.

The Red-Green-Refactor Cycle

This disciplined approach ensures that testing is not an afterthought but a central driver of the development process.

- Red: Write an automated test for a new feature or improvement. Since the code doesn't exist yet, this test will fail, turning your test runner "red". This step forces you to clearly define the desired behavior and requirements.

- Green: Write the absolute minimum amount of production code necessary to make the failing test pass. The goal here isn't elegant code; it's simply to satisfy the test and turn the test runner "green".

- Refactor: With a passing test as a safety net, clean up the code you just wrote. You can improve its structure, remove duplication, and enhance readability without changing its external behavior. The test suite is run again to ensure the refactoring didn't break anything.

TDD Implementation Tips

Adopting TDD requires discipline but yields significant long-term benefits in code quality and maintainability.

- Focus on Behavior: Write tests that verify what the code should do, not how it does it. This decouples your tests from specific implementation details, making them less brittle.

- Keep Tests Isolated: Each test should be independent and not rely on the state or outcome of other tests. This is crucial for reliable and predictable test runs.

- Use Descriptive Names: Name your tests clearly to describe the scenario and expected outcome, such as

test_calculates_total_for_multiple_items. This makes your test suite serve as living documentation.

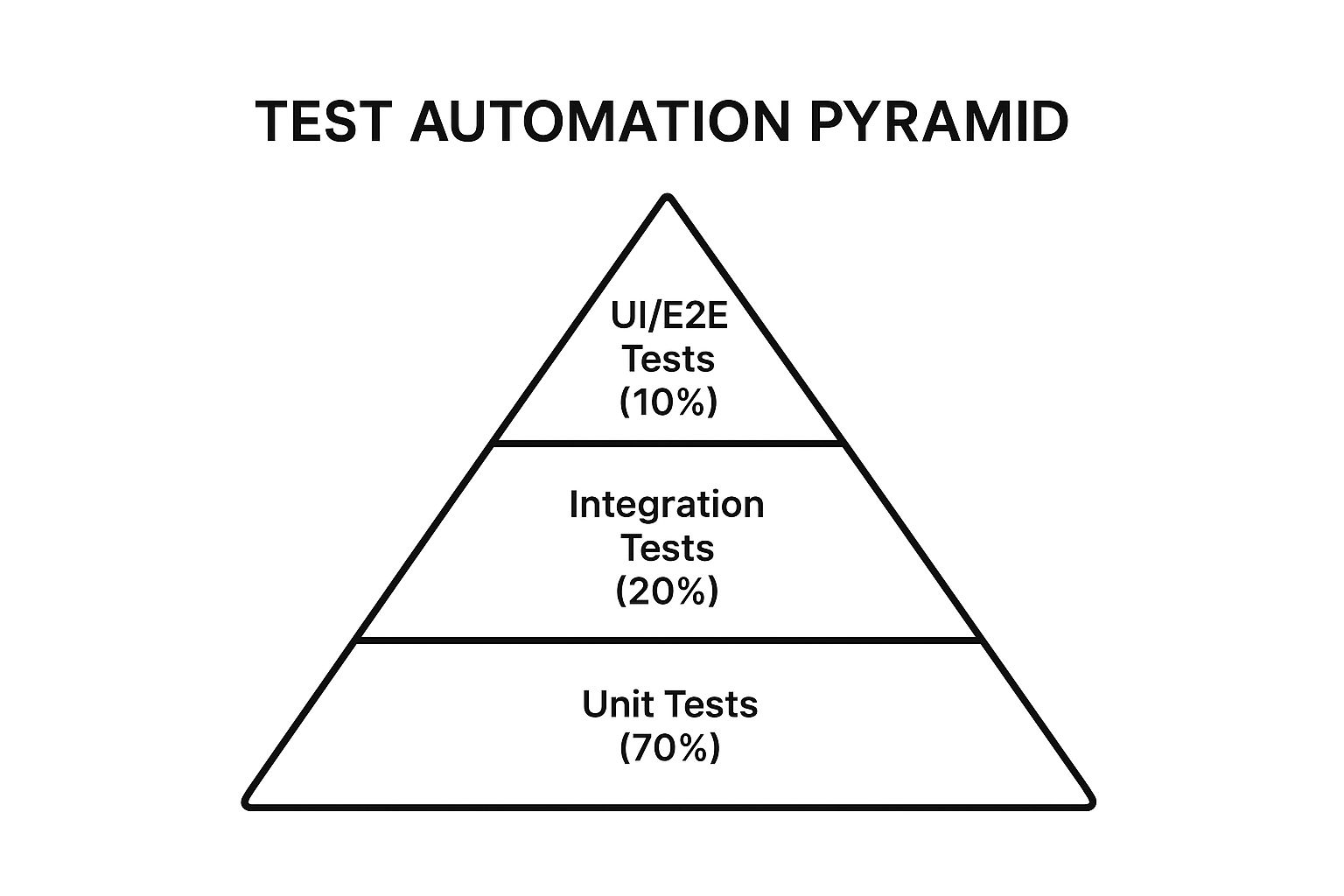

2. Test Automation Pyramid

The Test Automation Pyramid is a strategic framework, popularized by Mike Cohn, that guides one of the most crucial software testing best practices: creating a healthy and efficient automated test suite. It visualizes the ideal distribution of different test types, advocating for a large base of fast, inexpensive unit tests and progressively fewer, slower, and more expensive tests as you move up toward the UI level. This approach ensures rapid feedback, lower maintenance costs, and a more stable testing process overall.

Adopting this model helps teams avoid the "ice cream cone" anti-pattern, where a project relies heavily on slow and brittle end-to-end tests. Companies like Google and Netflix leverage this pyramid structure to maintain high velocity and quality across complex systems. The pyramid provides a clear, hierarchical guide for allocating testing resources effectively.

This infographic illustrates the Test Automation Pyramid, showing the recommended ratio of tests at each level of the software application.

The visualization clearly shows that the vast majority of tests should be focused on the foundational unit level, providing the most stable and cost-effective base.

The Three Tiers of Testing

This model is broken down into three distinct layers, each serving a specific purpose in the quality assurance strategy.

- Unit Tests (Base): Forming the largest part of the pyramid (around 70%), these tests verify individual components or functions in isolation. They are fast, easy to write, and provide precise feedback, allowing developers to catch bugs early in the development cycle.

- Integration Tests (Middle): This layer (around 20%) checks how different modules or services interact with each other. They are crucial for validating business logic and data flow between components, such as API-to-database communication.

- UI/End-to-End Tests (Top): Representing the smallest portion (around 10%), these tests simulate a full user journey through the application's user interface. While valuable for validating the complete system, they are the slowest, most brittle, and most expensive to maintain.

Pyramid Implementation Tips

Building a balanced test suite requires a conscious effort to invest in the lower levels of the pyramid first.

- Aim for a 70/20/10 Ratio: While not a rigid rule, this ratio is an excellent starting point. Monitor defect origins and adjust the distribution based on where bugs are most frequently found.

- Invest in Unit Test Tooling: A strong foundation of unit tests requires fast and reliable tools. Provide developers with the infrastructure they need to write and run these tests effortlessly.

- Reserve E2E for Critical Journeys: Focus UI and end-to-end tests exclusively on critical user workflows like user registration, login, or the checkout process. Avoid testing every possible UI interaction at this level. To learn more about how this fits into a broader strategy, you can explore the principles of automated functional testing on dotmock.com.

3. Shift-Left Testing

Shift-Left Testing is a foundational software testing best practice that involves moving quality assurance activities to earlier stages of the software development lifecycle. Instead of treating testing as a final, isolated phase before release, this approach integrates it from the very beginning, starting with requirements analysis and design. By "shifting left," teams can identify and resolve defects when they are cheapest and easiest to fix, preventing them from escalating into complex, costly problems later on.

This proactive model, championed by early advocates like Larry Smith at IBM and popularized by the Agile and DevOps communities, transforms quality from a departmental responsibility into a shared team-wide commitment. It fosters collaboration between developers, testers, and product owners, ensuring that quality considerations are embedded in every decision. The result is a more efficient development process, faster feedback loops, and a significant reduction in post-release bugs.

Key Principles of Shifting Left

This approach ensures that building a quality product is a continuous, collective effort rather than a final inspection gate.

- Early Involvement: Testers and QA professionals participate in initial planning, requirements gathering, and design meetings to identify potential ambiguities and risks before a single line of code is written.

- Continuous Feedback: Automated testing and static analysis tools are integrated directly into the CI/CD pipeline, providing developers with immediate feedback on code quality and functionality.

- Developer Empowerment: Developers take on more responsibility for quality by writing unit and integration tests, performing peer code reviews, and using tools to check for vulnerabilities and performance issues.

Shift-Left Implementation Tips

Adopting a shift-left mindset requires cultural change and strategic tool implementation to maximize its benefits.

- Involve Testers in Requirements Reviews: Bring QA into planning sessions to help define clear, testable acceptance criteria and identify potential edge cases early.

- Implement Static Code Analysis: Integrate automated tools that scan code for bugs, security vulnerabilities, and style violations as soon as it's committed.

- Establish Quality Gates: Define automated checks within your CI/CD pipeline that must pass before code can be promoted to the next environment, ensuring a consistent quality standard.

4. Risk-Based Testing

Risk-Based Testing (RBT) is a strategic software testing best practice that directs testing efforts and resources toward the areas of an application with the highest potential for failure and impact. Instead of testing everything with equal intensity, RBT prioritizes features based on a thorough risk assessment, ensuring that the most critical, complex, and failure-prone components receive the most rigorous validation. This pragmatic approach, championed by figures like Dorothy Graham, maximizes the effectiveness of limited testing resources.

The core principle of RBT is to focus on preventing or mitigating the most significant business and technical risks. For example, a banking application would prioritize transaction processing and security functions over cosmetic UI elements. Similarly, an e-commerce platform would concentrate testing on its payment gateway and checkout workflow. By aligning testing with risk, teams can deliver a more reliable product faster and with greater confidence.

The Risk Analysis Process

This data-driven approach ensures that testing provides maximum value by focusing on what truly matters to users and the business.

- Risk Identification: Collaborate with business stakeholders, developers, and product managers to identify potential risks. This includes assessing both the technical probability of failure (e.g., code complexity, new technology) and the business impact of a failure (e.g., financial loss, reputational damage, user safety).

- Risk Analysis & Prioritization: Quantify and rank the identified risks. This is often done using a risk matrix that plots the probability of a defect against its potential impact. High-probability, high-impact items become the top testing priorities.

- Test Planning & Execution: Design and execute test cases that specifically target the highest-priority risks. More extensive and in-depth testing techniques, like exploratory and negative testing, are applied to high-risk areas, while lower-risk features may receive lighter smoke testing.

RBT Implementation Tips

Adopting a risk-based approach helps teams make informed decisions about where to invest their testing efforts for the greatest return.

- Involve Business Stakeholders: Collaborate with product owners and business analysts to accurately assess the business impact of potential failures. Their input is crucial for effective prioritization.

- Use Historical Defect Data: Analyze past project data to identify modules or features that have historically been bug-prone. This data provides an objective basis for assessing the probability of future defects.

- Create a Risk Matrix: Visualize risks using a simple matrix with "Probability" on one axis and "Impact" on the other. This creates a clear, shared understanding of priorities for the entire team.

- Regularly Review Risks: Risks are not static. Re-evaluate and update your risk assessment throughout the project lifecycle as new features are added, requirements change, or new information becomes available.

5. Continuous Integration/Continuous Testing

Continuous Integration (CI) and Continuous Testing (CT) represent a cornerstone of modern DevOps and are a pivotal software testing best practice. This methodology involves developers frequently merging their code changes into a central repository, after which automated builds and tests are run. By integrating early and often, teams can detect and locate integration errors more easily, improving overall software quality and accelerating the development lifecycle.

The primary goal of CI/CT is to create a rapid feedback loop, ensuring that new code doesn't break existing functionality. Automated tests, from unit to integration tests, are executed automatically upon every commit, providing immediate validation. This practice significantly reduces the risk associated with large-scale integrations and enables a more fluid and predictable release process, as seen in the highly efficient deployment pipelines of companies like Amazon and Netflix.

The CI/CT Pipeline

This automated workflow is the engine that drives continuous quality assurance and rapid delivery.

- Commit: A developer commits code changes to a shared version control repository like Git.

- Build: A CI server (e.g., Jenkins, GitHub Actions) automatically detects the change, pulls the latest code, and triggers an automated build process.

- Test: The CI server executes a suite of automated tests against the new build. This includes unit, integration, and API tests to validate functionality and check for regressions.

- Report: The results of the build and test stages are immediately reported back to the development team. A failed build or test "breaks the build," prompting an immediate fix.

CI/CT Implementation Tips

A successful CI/CT pipeline requires careful planning and continuous optimization.

- Start Small: Begin by automating a core subset of critical and fast-running tests. You can expand the test suite over time as the pipeline matures.

- Parallelize Tests: Execute tests in parallel to drastically reduce the time it takes to get feedback. This is crucial for maintaining a fast and efficient pipeline.

- Manage Test Environments: Use tools like API mocking and service virtualization to create stable, isolated, and fast test environments, eliminating dependencies on slow or unavailable third-party services.

- Monitor and Optimize: Continuously monitor pipeline performance metrics, such as build duration and test failure rates, to identify bottlenecks and areas for improvement.

6. Behavior-Driven Development (BDD)

Behavior-Driven Development (BDD) is a software testing best practice that evolves the principles of Test-Driven Development (TDD) by emphasizing collaboration between developers, QA, and business stakeholders. It bridges the communication gap by using a shared, natural language format to describe how an application should behave from the end-user's perspective. This approach, pioneered by Dan North, ensures that all team members have a common understanding of the product’s goals before a single line of code is written.

BDD uses simple, structured language to create executable specifications that act as both acceptance criteria and automated tests. This "living documentation" ensures that the software's documentation is always synchronized with its actual behavior, reducing ambiguity and rework. By focusing on business outcomes, BDD helps teams build features that deliver genuine value to the user.

The Gherkin Syntax: Given-When-Then

This collaborative framework is built around scenarios written in a specific format, often using the Gherkin language popularized by tools like Cucumber.

- Given: Describes the initial context or prerequisite state before an action occurs. For example, "Given I am a logged-in user on the cart page".

- When: Describes the specific action or event that the user performs. For example, "When I add a 'Virtual Server' to my cart".

- Then: Describes the expected outcome or result. For example, "Then the cart total should be updated to '$10'".

BDD Implementation Tips

Adopting BDD aligns technical implementation directly with business requirements, ensuring the team builds the right product.

- Focus on Business Value: Scenarios should describe the "why" behind a feature, not the "how" of its technical implementation. Frame discussions around user goals and business outcomes.

- Involve All Stakeholders: The creation of BDD scenarios is a team sport. Involve product managers, testers, and developers in "Three Amigos" sessions to refine requirements and ensure clarity.

- Keep Scenarios Focused: Each scenario should test one distinct behavior or rule. Avoid overly complex scenarios with multiple "When" or "Then" steps, as they become difficult to maintain.

7. Exploratory Testing

Exploratory testing moves beyond rigid scripts to embrace a dynamic and investigative approach to quality assurance. Instead of following predefined test cases, this software testing best practice empowers testers to simultaneously learn about the application, design tests on the fly, and execute them immediately. Championed by experts like Cem Kaner and James Bach, it prioritizes tester freedom, critical thinking, and adaptability to uncover complex defects that structured methods often miss.

This method treats testing as a cognitive, unscripted activity, allowing skilled testers to use their intuition and experience to follow interesting paths and probe for weaknesses. It’s particularly effective for finding usability issues, edge cases, and subtle interaction bugs that aren't easily predicted in a test plan. By combining learning with execution, exploratory testing provides rapid, insightful feedback throughout the development lifecycle.

The Charter and Session-Based Approach

This disciplined yet flexible approach provides structure to the exploration, ensuring it remains focused and productive.

- Create a Charter: Define a clear mission or goal for the testing session. For example, "Investigate the user profile update functionality for security vulnerabilities and data integrity issues." The charter provides direction without prescribing specific steps.

- Time-Box the Session: Allocate a fixed, uninterrupted period for testing (e.g., 90 minutes). This encourages intense focus and prevents the exploration from becoming aimless.

- Document and Debrief: During the session, the tester takes notes on what was tested, any bugs found, and questions that arose. After the session, a debrief is held to review findings and plan the next steps.

Exploratory Testing Implementation Tips

Adopting this methodology requires a shift in mindset from test case execution to skilled investigation.

- Use Mind Maps: Visualize the application's features and potential test paths with a mind map. This can serve as a guide during a session, helping you track coverage and generate new ideas.

- Pair Testers: Have two testers work together at one computer. One person can focus on executing tests while the other observes, takes notes, and suggests different avenues of exploration.

- Focus on Risk: Use risk analysis to guide your charters. Prioritize exploring areas of the application that are most complex, critical to business operations, or have a history of defects.

8. Test Environment Management

Test Environment Management (TEM) is the systematic practice of provisioning, configuring, and maintaining the infrastructure needed for reliable software testing. This software testing best practice ensures that every test runs in an environment that accurately mirrors production, eliminating the "it works on my machine" problem. Effective TEM, popularized by the DevOps community and cloud providers, is the bedrock of consistent, repeatable, and trustworthy test results, preventing environment-related defects from derailing development cycles.

A robust TEM strategy gives development and QA teams on-demand access to stable, isolated, and production-like environments. By standardizing the setup, from infrastructure and data to third-party dependencies, teams can confidently validate new features and bug fixes. This approach is crucial for complex applications, especially those with microservices architectures, where environmental drift can easily lead to elusive and costly bugs.

Key Aspects of TEM

A comprehensive approach to TEM covers the entire lifecycle of a test environment, from creation to decommissioning.

- Provisioning & Configuration: Using automated tools to create and set up environments on-demand. This includes everything from servers and databases to network configurations.

- Data Management: Ensuring environments are populated with realistic, secure, and consistent test data. This often involves data subsetting, masking, and automated refresh cycles.

- Isolation & Stability: Guaranteeing that tests running in one environment do not interfere with another. Stability ensures the environment itself isn't a source of test failures. When third-party services are unavailable, techniques like service virtualization are essential for maintaining isolation and stability.

- Monitoring & Maintenance: Continuously tracking the health, performance, and resource usage of test environments to proactively address issues and optimize costs.

TEM Implementation Tips

Effective test environment management minimizes bottlenecks and empowers teams to test more efficiently.

- Use Infrastructure-as-Code (IaC): Employ tools like Terraform or Ansible to define environments in code. This makes provisioning automated, repeatable, and consistent across all stages.

- Leverage Containerization: Use Docker and Kubernetes to create lightweight, portable, and isolated environments. This drastically reduces setup time and ensures parity between development, testing, and production.

- Establish Clear Governance: Implement an environment booking or scheduling system and define clear usage policies to manage shared resources effectively and prevent conflicts.

- Automate Data Refresh and Masking: Create automated pipelines to refresh test environments with sanitized, production-like data, ensuring tests are always run against relevant datasets.

9. Test Data Management

Effective software testing relies heavily on the quality and availability of data. Test Data Management (TDM) is a critical best practice that encompasses the entire lifecycle of data used for testing, including its creation, provisioning, protection, and maintenance. A solid TDM strategy ensures that testing teams have access to relevant, realistic, and secure data, which is essential for validating application behavior across a wide range of scenarios, from routine operations to complex edge cases.

Without a formal TDM process, teams often resort to using production data, which poses significant security and privacy risks, or manually created data, which is often incomplete and time-consuming to generate. Implementing a structured approach to test data is fundamental to achieving comprehensive and reliable test coverage, especially in regulated industries like finance and healthcare where data privacy is paramount.

Core Pillars of Test Data Management

A robust TDM strategy is built on several key activities that ensure data is fit for purpose throughout the testing lifecycle.

- Data Generation: Create realistic test data from scratch. This can involve using synthetic data generation tools that create fictional yet structurally valid data, or cloning and subsetting production data for non-sensitive testing environments.

- Data Masking & Anonymization: Protect sensitive information by obscuring or replacing real data with fictional, irreversible values. This is crucial for complying with privacy regulations like GDPR and CCPA when using production-derived data sets.

- Data Subsetting: Extract smaller, targeted, and referentially intact sets of data from a large database. This makes test environments more manageable, speeds up test execution, and reduces storage costs.

- Data Refresh & Archiving: Establish automated processes to refresh test environments with up-to-date data and archive old data sets. This keeps the testing scenarios relevant to the current state of the production application.

TDM Implementation Tips

Integrating TDM into your software testing best practices requires careful planning and the right tools to manage data effectively.

- Establish Data Governance: Define clear policies for how test data is requested, created, stored, and retired. Classify data based on sensitivity to apply appropriate security controls.

- Prioritize Synthetic Data: For any tests involving personally identifiable information (PII) or other sensitive data, use synthetic data generation tools to eliminate compliance risks entirely.

- Automate Data Provisioning: Integrate TDM tools into your CI/CD pipeline to automate the provisioning of fresh, relevant data for every test run, ensuring consistency and speed.

- Create Reusable Data Sets: Develop a version-controlled repository of reusable data sets tailored for specific testing purposes, such as regression tests, performance tests, or security scans. For more information on leveraging data for various test scenarios, explore our guide on data-driven testing strategies.

Best Practices Comparison Matrix for Software Testing

| Item | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Test-Driven Development (TDD) | Medium - requires discipline and cultural shift | Moderate - developer time investment | Higher code quality and fewer defects | Code-centric projects needing robust design | Improves code design, reduces debugging |

| Test Automation Pyramid | Medium - needs setup for test tiers | High - infrastructure for multiple test types | Optimized test speed and cost efficiency | Projects requiring balanced automated testing | Faster feedback, lower maintenance costs |

| Shift-Left Testing | High - organizational and cultural changes needed | High - training and early involvement | Early defect detection and faster delivery | Projects aiming for quality integrated early | Reduced defect cost, better collaboration |

| Risk-Based Testing | Medium - requires domain expertise | Moderate - focused resource allocation | Targeted testing on critical features | High-risk or safety-critical systems | Optimizes ROI, focuses on critical risks |

| Continuous Integration/Testing | High - infrastructure and toolchain setup | High - automation tooling and maintenance | Rapid feedback and improved code stability | Teams with frequent code changes needing fast validation | Faster defect detection, improved collaboration |

| Behavior-Driven Development (BDD) | Medium - tooling and scenario writing overhead | Moderate - collaboration effort | Clear requirements and better communication | Stakeholder-inclusive projects | Enhances communication, reduces ambiguity |

| Exploratory Testing | Low to Medium - dependent on tester skill | Low to Moderate - skilled testers | Discover unexpected defects | Complex or evolving systems | Rapid feedback, adaptive defect discovery |

| Test Environment Management | High - infrastructure automation and maintenance | High - infrastructure and monitoring | Reliable and reproducible testing conditions | Environments requiring production-like testing | Consistent tests, fewer environment failures |

| Test Data Management | High - complex data governance and tooling | High - storage, tools, and compliance | Improved test coverage and data privacy | Sensitive data environments | Ensures compliance, supports repeatability |

Ship with Confidence: Putting These Practices into Action

Navigating the landscape of modern software development requires more than just writing code; it demands a deep-seated commitment to quality. The software testing best practices we've explored, from Test-Driven Development and the Test Automation Pyramid to Exploratory Testing and BDD, are not merely isolated techniques. They represent a comprehensive framework for building a resilient, proactive, and efficient quality culture within your organization. Moving beyond the old paradigm of testing as a final, gatekeeping phase is essential for any team aiming for agility and excellence.

The journey begins by internalizing a fundamental shift in mindset. Quality is not someone else's responsibility; it is a shared goal woven into every stage of the software development lifecycle. By adopting a shift-left testing approach, you empower developers to think critically about quality from the first line of code. Similarly, integrating CI/CT pipelines ensures that testing is a continuous, automated feedback loop, not a manual, time-consuming bottleneck. This proactive stance transforms testing from a reactive bug hunt into a strategic pillar of development.

From Theory to Tangible Results

Adopting these practices may seem daunting, but the key is incremental implementation. You don't need to overhaul your entire workflow overnight. Start by identifying your team's most significant pain points and select a practice that directly addresses them.

- Is your team struggling with vague requirements and rework? Introduce Behavior-Driven Development (BDD) to create a shared understanding between technical and non-technical stakeholders.

- Are you finding critical bugs late in the cycle? Implement structured Exploratory Testing sessions to leverage human intuition and creativity alongside your automated suites.

- Are your testing efforts brittle and slow? Re-evaluate your Test Automation Pyramid to ensure you have a solid foundation of fast, reliable unit tests, rather than an over-reliance on slow, flaky end-to-end tests.

Each practice builds upon the others, creating a powerful synergy. TDD naturally supports a healthy unit test base for your pyramid. Risk-Based Testing helps prioritize efforts within your CI/CT pipeline, ensuring that the most critical areas receive the most attention. The common thread is a commitment to early and frequent feedback, which is the cornerstone of agile quality assurance.

The Catalyst for Modern Testing: Environment and Data Management

Perhaps the most significant blockers to implementing these software testing best practices are challenges with test environments and data. Without stable, reliable, and accessible environments, even the best-laid testing plans will falter. This is where modern tooling becomes a game-changer, particularly for teams reliant on complex microservices architectures and third-party APIs.

Effective Test Environment Management and Test Data Management are no longer "nice-to-haves"; they are foundational requirements. The ability to spin up isolated, consistent, and realistic test environments on-demand is critical. This is where API mocking and service virtualization provide immense value, allowing teams to simulate dependencies, test edge cases like network failures or slow responses, and unblock parallel development streams. By mastering your test environments, you create the stable ground upon which all other best practices can be successfully built, enabling your team to test comprehensively and ship with unwavering confidence.

Ready to eliminate environment-related bottlenecks and supercharge your testing strategy? dotMock allows you to create high-fidelity mock APIs and testing environments in seconds, empowering your team to implement these software testing best practices with ease. Explore how you can accelerate your development lifecycle and build more resilient applications at dotMock.