How to Build MVP: From Concept to Launch

At its heart, building a Minimum Viable Product (MVP) is a beautifully simple idea: get the most basic, core version of your product into the hands of real users as quickly as you can. You're not aiming for a polished, feature-rich application. Instead, you're laser-focused on the absolute essential function that solves one specific, painful problem.

This approach lets you gather invaluable user feedback right from the start, test your core business assumptions, and most importantly, avoid the soul-crushing experience of building something nobody actually wants.

Why an MVP Is Your Startup's Secret Weapon

Before we get into the nitty-gritty of building an MVP, it’s crucial to understand why this strategy is so foundational. It’s not just tech jargon; it's a proven framework for taking the risk out of your entire venture. The goal is to ditch long, speculation-heavy development cycles and replace them with a rapid loop of building, measuring, and learning from your users.

This process forces you to be brutally honest about your feature list. You aren't building your dream product on day one. You're building a tool just sharp enough to solve a single problem for a small group of early adopters.

Sidestep the Biggest Startup Killer

Let's be real: the number one reason startups die isn't a lack of funding or a weak team. It's building a product nobody needs. An MVP tackles this head-on by putting your concept in front of real people almost immediately.

This simple act shifts the conversation from, "We think users will love this," to, "Here's the data showing how users are actually using this."

Getting that early validation gives you some serious advantages:

- Faster Learning: You’ll learn more in one week of real-world use than you would in six months of internal brainstorming sessions.

- Resource Efficiency: It stops you from pouring precious time and money into features that sound cool but don't matter to your users.

- Market-Driven Roadmap: Your future development plan is guided by actual user behavior and direct feedback, not by internal guesswork.

The Data-Backed Advantage

This isn't just theory; the numbers back it up. Projects that start with an MVP have been shown to cut startup failure rates by around 60% compared to traditional development methods. That's a massive difference, especially when you consider that about 90% of startups fail globally, and 42% of them point to a lack of market need as the main reason.

When you launch a focused product, you're not just saving money; you're buying information. An MVP is the cheapest, fastest way to find out if your business is built on a solid foundation or just wishful thinking.

To really make the most of this strategy, it pays to dig into specific techniques for MVP development for startups. Doing so helps you transform that big idea into a tangible, market-tested product—starting small, but always thinking big.

Defining the Core Problem and First Features

Before you write a single line of code, your MVP’s success boils down to one thing: solving a real, nagging problem for a specific group of people. It’s easy to get caught up in a grand vision, but the first—and most important—step is to pinpoint that single, acute pain point your product will fix. Your mission is to build a "must-have" tool, not just another "nice-to-have" distraction.

So, how do you find that problem? By talking to actual people. Get away from the screen and have honest conversations with potential users. Don't pitch your solution. Instead, listen. Ask about their daily frustrations, their clunky workflows, and the things they wish they could do better. Even a simple, one-page user persona can be a huge help here, keeping your team focused on who you’re actually building for.

From Wishlist to Launch List

Okay, you’ve zeroed in on the core problem. Now comes the hard part: resisting the urge to build every feature you can dream up. This is a classic trap. An MVP demands ruthless prioritization. You have to cut everything that isn't absolutely essential for solving that initial pain point.

This isn’t about shipping a shoddy or half-baked product. It’s about delivering concentrated value as fast as possible to see if you're on the right track. And the data backs this up. Roughly 72% of startups use the MVP model to test the waters before going all-in. It helps them sidestep the number one startup killer—building something nobody wants, which accounts for about 42% of failures. If you're curious, you can find more startup survival statistics that really drive this point home.

A great tool for this is the MoSCoW analysis. It’s a simple but effective framework for sorting your feature ideas into four buckets:

- Must-Have: These are the non-negotiables. The product simply won't work or solve the core problem without them.

- Should-Have: Important, but not critical for the very first launch. These are often at the top of the list for version two.

- Could-Have: "Nice-to-have" features that would be cool but are only built if you have extra time and resources.

- Won't-Have: Features you explicitly decide not to build for this release to keep the scope tight.

To make this even clearer, many teams I've worked with use a prioritization matrix. It’s a simple grid that helps you visually map out features based on how much value they provide to the user versus how hard they are to build.

MVP Feature Prioritization Matrix Example

| Feature | User Value (1-10) | Implementation Effort (1-10) | Priority (Must-Have / Should-Have) |

|---|---|---|---|

| Simple Task Creation | 9 | 3 | Must-Have |

| Basic Time Tracking | 10 | 4 | Must-Have |

| Client Approval Button | 8 | 5 | Must-Have |

| Advanced Reporting | 6 | 8 | Should-Have |

| Social Media Integration | 4 | 7 | Should-Have |

| Gantt Charts | 5 | 9 | Won't-Have (for now) |

This kind of matrix forces you to have tough but necessary conversations. The top-left quadrant—high value, low effort—is your MVP sweet spot.

A Real-World SaaS Example

Let's put this into practice. Imagine a new SaaS company trying to build a project management tool for small marketing agencies. Their initial brainstorm was a laundry list of 20+ features, from complex reporting dashboards and third-party integrations to Gantt charts.

Then they got serious and applied the MoSCoW method. They realized the real problem for their target users wasn't a lack of charts; it was the daily grind of tracking billable hours and getting client sign-off on small tasks. Everything else was noise.

By focusing on the user's most critical pain point, they transformed a sprawling, complex project into a lean, achievable MVP. This discipline is the difference between launching in three months and getting stuck in development for over a year.

Their "Must-Have" list for the MVP suddenly became incredibly focused and manageable:

- Simple Task Creation: Let users create a task and assign it to someone.

- Time Tracking: Add a basic "start/stop" timer to log hours on a task.

- Client Approval Button: A one-click way for a client to approve a finished task.

That’s it. Everything else—the Gantt charts, the fancy reports—was moved to the "Should-Have" or "Won't-Have" list. This laser focus let them launch quickly, get a product into the hands of real users, and start gathering the feedback needed to build what comes next.

Choosing a Tech Stack That Works for You

Picking the right tech for your MVP feels like a high-stakes decision, and in many ways, it is. You're trying to find that sweet spot between moving fast, keeping costs down, and not painting yourself into a corner later on.

There's no magic bullet here. The "best" tech stack is a myth; the right one is entirely situational. It hinges on what your team is good at and what your product actually needs to do.

If there's one piece of advice to take away, it's this: choose what your team already knows. Speed is everything at this stage. If your developers live and breathe Python, this isn't the moment to start a new project in Go just because it's trendy. Leaning on existing expertise is the single biggest shortcut to getting your product in front of users.

Frontend and Backend Considerations

For the part of your app that users will see and touch, frameworks like React and Vue are popular for a reason. They have huge communities, tons of pre-built components, and let you build UIs incredibly fast. The choice between them often just boils down to which one your team feels more comfortable with.

On the backend, you have solid, battle-tested options like Node.js or Python with frameworks like Django or Flask. Node.js is a natural fit if you're already using JavaScript on the front end, which can simplify things for your team. Python really shines when you're dealing with a lot of data or have plans for machine learning down the road.

Your decision should circle back to the core features you defined earlier. A bit of foresight into common software architecture design patterns can also pay dividends, helping you build something that won’t immediately break when you start to scale.

Comparing MVP Tech Stack Options

To put this in perspective, let's look at a high-level comparison of some common choices. This isn't about which is "better," but which is better for your MVP right now.

| Category | Option A | Option B | Best For MVP If... |

|---|---|---|---|

| Frontend | React | Vue | Your team has strong JavaScript skills and wants access to a massive library ecosystem (React) or prefers a slightly gentler learning curve (Vue). |

| Backend | Node.js | Python (Django/Flask) | You want a single language (JavaScript) across your stack (Node.js) or your app is data-intensive and you want to leverage powerful data science libraries (Python). |

| Database | SQL (Postgres) | NoSQL (MongoDB) | Your data has clear, predictable relationships and structure (SQL) or you need flexible, fast-evolving data schemas (NoSQL). |

Ultimately, the best choice is the one that minimizes friction and maximizes your team's output. Don't over-index on what might be perfect a year from now; focus on what gets you to launch fastest.

The best tech stack for your MVP is the one that lets you ship a viable product the fastest. Don't chase trends or technologies you think you should be using. Prioritize immediate execution over hypothetical future needs.

A Powerful Shortcut: Backend-as-a-Service (BaaS)

Want to really accelerate development? A Backend-as-a-Service (BaaS) platform can be a game-changer. Tools like Firebase or Supabase are designed to handle the messy backend stuff for you.

Think about what you get right out of the box:

- Authentication: Forget building user login and sign-up flows from scratch. It’s already done.

- Databases: Get a fully managed database without needing a dedicated backend or DevOps engineer to set it up and maintain it.

- Hosting: Deploying your app becomes a simple, often one-command, process.

Using a BaaS can literally shave weeks or even months off your development timeline. It lets a small team punch way above their weight, building something that feels solid and feature-complete in a fraction of the time. This frees you up to obsess over what matters most: the user experience. It's a pragmatic move that truly embraces the MVP philosophy.

Using API Mocking to Shave Weeks Off Your Development Timeline

One of the biggest hurdles I see teams face when building an MVP is the classic standoff between frontend and backend. The frontend team has the designs and is ready to go, but they're completely blocked, waiting for the backend folks to spin up the APIs. It's a sequential, waterfall-style process that just kills momentum and wastes precious time.

This is exactly where API mocking comes in. Think of it as creating a stand-in for your real API. It’s a simulation that returns the data your frontend will eventually get, allowing your UI developers to build and test features as if the backend were already complete. It's a simple concept, but it fundamentally changes how you build software by enabling true parallel development.

Let Your Teams Work in Parallel, Not in Sequence

With a tool like dotMock, your frontend developers can get to work on day one. They don't need to wait for a single line of backend code.

Let’s say you’re building a new user dashboard. The backend is still in the planning stages. No problem. The frontend team can agree on the JSON structure for user profiles, activity feeds, and so on. They can then use a mock API to serve up that fake data and build out the entire dashboard. By the time the real API is ready, the UI is already built, tested, and waiting to be connected.

If you're new to the backend side of things, this guide on how to make an API provides some great foundational knowledge that helps paint a clearer picture.

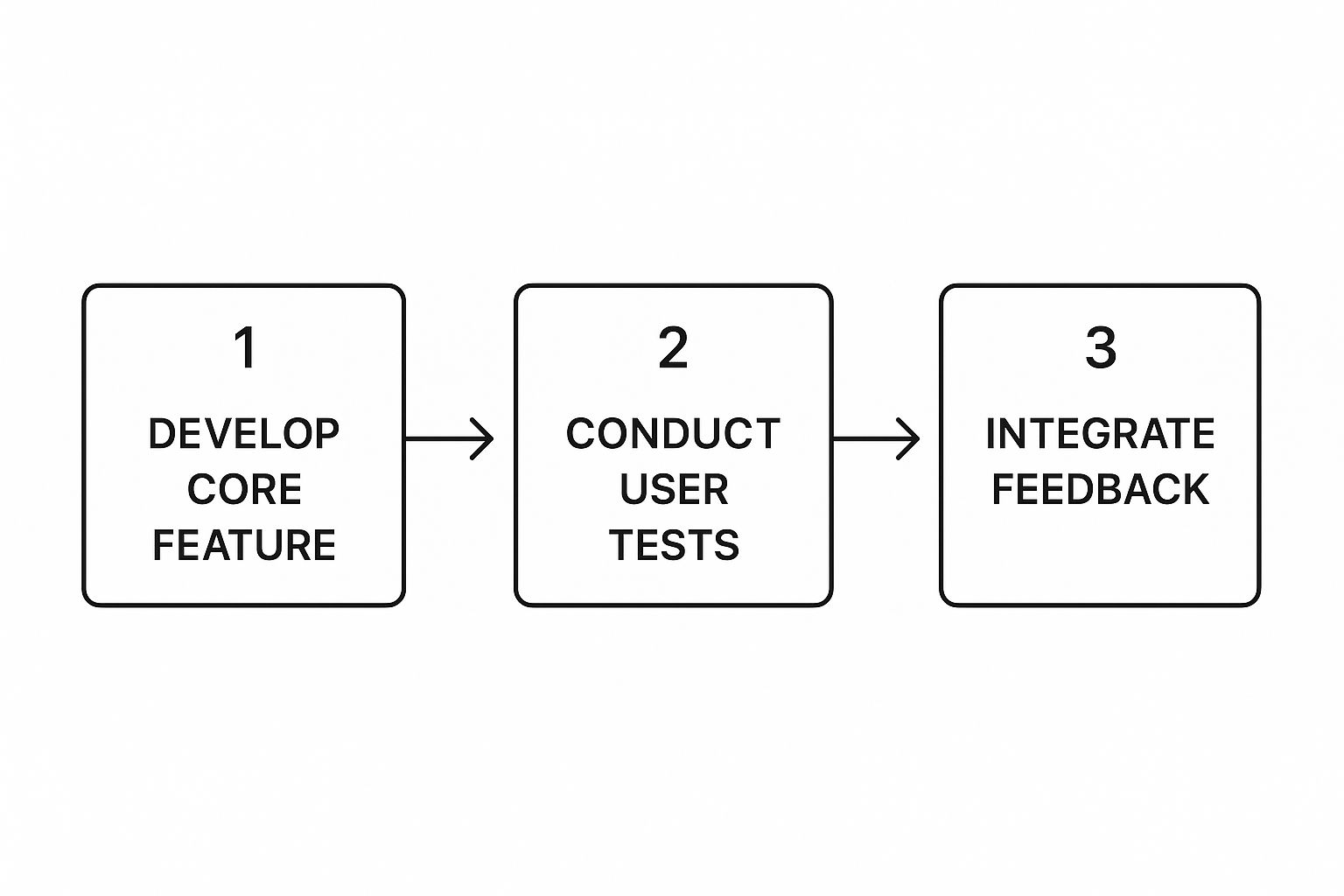

This iterative cycle is the heart of MVP development, and removing dependencies is the key to keeping that cycle moving fast.

As you can see, the process is a loop. The faster you can move through developing, testing, and getting feedback, the better. API mocking is a huge accelerator for that loop.

Don't Just Test the Happy Path—Simulate the Real World

A good mock API does more than just return a 200 OK response with perfect data. That's not how the real world works. APIs fail, networks lag, and servers throw errors. A huge benefit of mocking is the ability to simulate these messy, real-world scenarios in a controlled environment.

With a platform like dotMock, you can easily configure your mock endpoints to test for edge cases that are otherwise a nightmare to reproduce:

- Server Errors: What happens when the API returns a

500 Internal Server Error? Does your app crash or show a helpful message? You can force this response to find out. - Slow Connections: Add a delay to a response to see how your UI handles it. Does it freeze, or does it show a nice loading spinner?

- Bad Data: Send back malformed or incomplete JSON to make sure your application's data parsing is resilient.

Finding out your app just shows a blank white screen when an API fails after you launch is a painful—and completely avoidable—mistake. Building a robust UI means planning for failure from the start, and mocking is the best way to do it.

By introducing API mocking when you build your MVP, you’re not just saving time. You’re eliminating dependencies, reducing friction between teams, and ultimately shipping a much more polished and resilient product. It’s a practical switch that turns your development process from a slow-moving assembly line into a high-speed, parallel operation.

Measuring Success and Planning Your Next Move

Alright, you've launched your MVP. It’s easy to feel like you’ve just crossed the finish line, but in reality, you've just heard the starting gun. This is where the real work begins. The entire point of building an MVP was to learn, and that learning process is fueled by hard data. It’s time to shift your focus from building features to measuring their actual impact.

The big question you're trying to answer is simple: are you solving a real problem for real people? The answer isn't in how many people signed up on day one. It’s in what they do after they sign up. This means looking right past the vanity metrics and digging for actionable insights.

Beyond Signups: Key Metrics to Watch

To figure out if your product actually has legs, you need to get beyond surface-level numbers. Your attention should be squarely on engagement and retention—these are the truest indicators that you're on your way to product-market fit.

Start by treating these as your product's vital signs:

- User Engagement: Are people actually using the core feature you poured all that effort into? A mountain of sign-ups with a valley of engagement is a massive red flag. It tells you the initial promise might be there, but the solution isn't compelling enough.

- Feature Adoption Rate: Of all the people who signed up, what percentage is using that key feature on a regular basis? This is how you know if you correctly identified the "must-have" part of your idea.

- Cohort Retention: Take a look at the users who joined in a specific week. How many of them are still around a week later? What about a month later? This is the metric that reveals your product's stickiness, or lack thereof.

As a general rule of thumb, a successful MVP often sees a monthly active user (MAU) rate of around 30% of its total sign-ups. Even more telling are early-stage retention rates. If you can keep 20-30% of your users coming back over a three-month period, that's a powerful signal that you’ve built something people genuinely want.

Your MVP isn't a pass/fail test judged by launch day hype. Think of it as a scientific instrument you've built to collect data. Every user click, every session, every piece of feedback is a data point that helps you prove or disprove your core business assumptions.

Closing the Build-Measure-Learn Loop

All the data you're collecting is completely worthless if it doesn't guide your next move. This is the heart of the "Build, Measure, Learn" feedback loop that the Lean Startup methodology is famous for. You’ve done the "build" part, and you're in the middle of "measure." Now comes the most important phase: "learn."

This learning process should directly shape your product roadmap. For instance, if you see sky-high adoption for one feature but crickets for another, that's an incredibly clear signal telling you where to invest your precious development time.

Ultimately, the insights you gather will push you down one of three paths:

- Persevere: The numbers look good. Users are engaged, they're sticking around, and the feedback is positive. Your plan is to double down, squash bugs, and start building the next most-requested features.

- Iterate: The data is a mixed bag. People are signing up, but they're not really engaging or they're dropping off quickly. This tells you it's time to refine what you have or improve the user experience based on what you're seeing and hearing.

- Pivot: The data is brutally honest: your core hypothesis was wrong. Users aren't touching the main feature, and retention is near zero. It’s time to make a major strategic change based on what you’ve learned.

Effectively analyzing this feedback is a skill. Understanding different testing techniques in software can give you a more structured way to validate your assumptions and make sure your next version is even better. And don't be afraid of the pivot. It can feel like a failure, but it’s actually a huge success—you’ve just used an MVP to avoid wasting years building something nobody wanted.

Got Questions About Building Your MVP? We've Got Answers

Even the most buttoned-up plan can hit a few snags when you get into the nitty-gritty of building an MVP. It's totally normal. Let's walk through some of the questions I see pop up all the time with development teams. Nailing these down early will save you a ton of headaches later.

The whole game is about finding that sweet spot. Go too lean, and your product feels cheap or broken. Go too big, and you've just built a mini-version of a full product, defeating the whole purpose of the MVP approach.

So, How “Minimal” Is Minimal, Really?

The most important letter in MVP is V for viable. Your product has to actually work and solve the core problem for the user. It can be basic, but it can't be a frustrating, buggy mess.

Here’s a simple test I use: can a user get from A to B and accomplish the single most important task? If you cut a feature and that core journey falls apart, it has to stay. Anything else—all the "wouldn't it be cool if" ideas—gets put on the backlog for V2. You're aiming for a solid, valuable tool, not a broken demo.

What’s the Difference Between an MVP and a Prototype?

This one trips people up all the time, but the distinction is critical.

A prototype is essentially a sketch. It might be an interactive wireframe or a non-functional mockup. You build a prototype to test a design, a user flow, or an idea, usually with your internal team or a handful of friendly users. It’s all about exploring how something could look and feel.

An MVP, on the other hand, is a real, functional piece of software that you release to actual users out in the wild. You build an MVP to test a business idea and see if people will actually use it—and maybe even pay for it.

A prototype helps you figure out the solution. An MVP helps you validate the problem. A prototype asks, "Can we build this?" An MVP asks, "Should we have built this?"

How Much Is This Going to Cost Me?

There’s no magic number here. I've seen MVPs built for under $10,000 and others that climb past $100,000. The final cost really boils down to a few key things:

- Complexity of Features: A straightforward app with a few screens is one thing. A multi-faceted platform with third-party integrations is a completely different ballgame.

- Your Tech Stack: The specific technologies you pick will directly influence development timelines and costs.

- The Team You Hire: Developer rates and location are always going to be a major factor in the budget.

Honestly, the best way to keep costs in check is to be absolutely ruthless with your scope. Keep your focus lasered in on the essential features needed to prove your core hypothesis with real people.

Ready to build your MVP faster by cutting out API delays? With dotMock, your frontend team can get to work right away, simulating any API they need in seconds. Start building a more resilient product today. Get started for free at dotmock.com.