Top 9 Best Practices for Software Testing in 2025

In today's fast-paced development cycles, delivering flawless software isn't just a goal; it's a fundamental requirement. Subpar testing can lead directly to buggy releases, frustrated users, and a damaged brand reputation. The key differentiator for elite engineering teams isn't just talent, it's their disciplined adoption of proven, modern testing strategies. This guide moves beyond generic advice to provide a definitive roundup of the best practices for software testing that high-performing teams use to build resilient, high-quality applications.

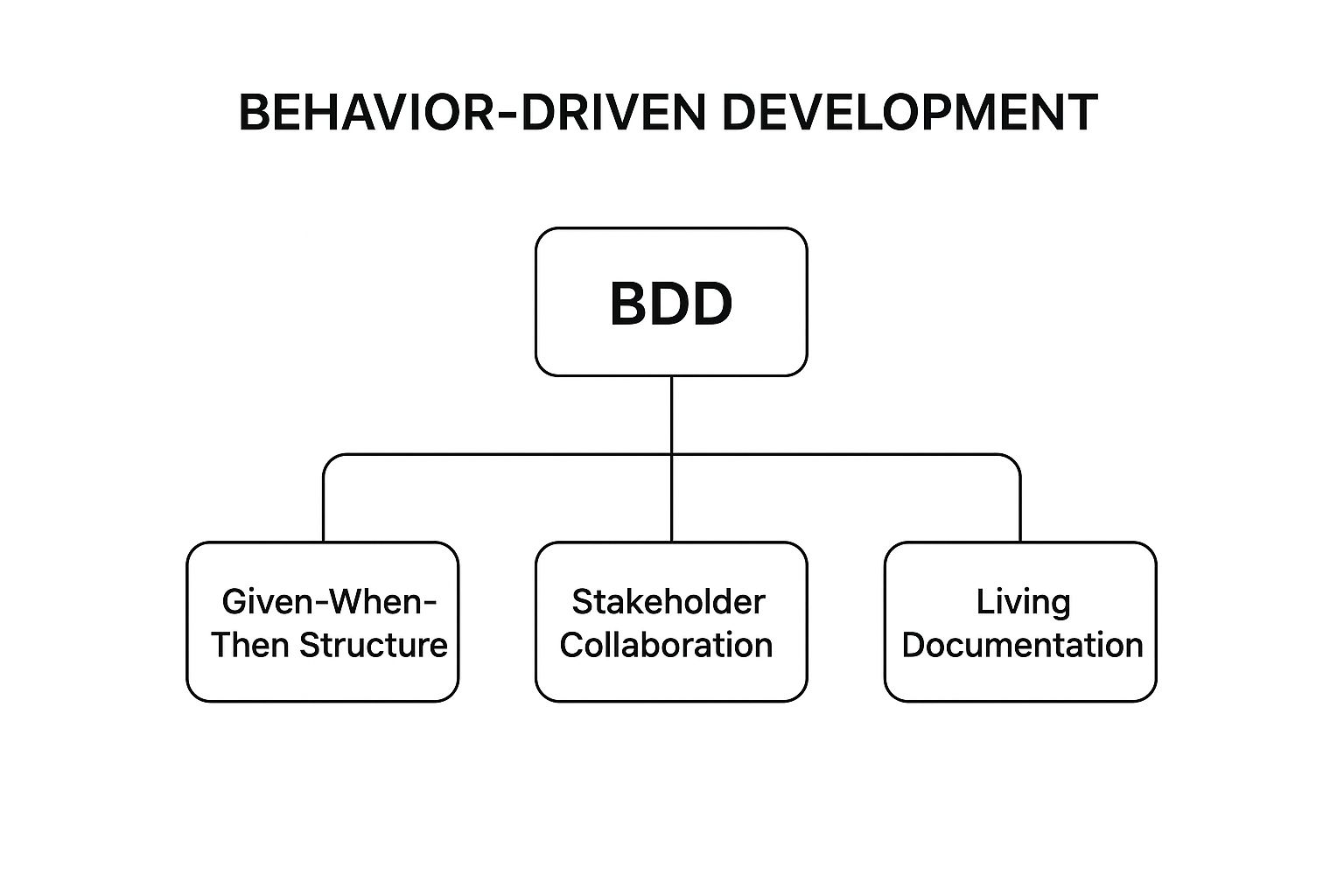

We will explore nine essential methodologies, from Test-Driven Development (TDD) and Continuous Integration (CI/CD) to Risk-Based Testing and Behavior-Driven Development (BDD). Each section offers actionable steps and real-world examples to help you refine your quality assurance process and deliver software with confidence. To continually enhance your testing approach, consider strategies for optimizing your Quality Assurance processes to ensure long-term success. Whether you're a developer, QA engineer, or technical lead, these practices will provide a structured framework for elevating your team’s output. Let’s dive into the techniques that transform good software into great software.

1. Test-Driven Development (TDD)

Test-Driven Development (TDD) flips the traditional development process on its head. Instead of writing code and then testing it, TDD requires you to write a failing test before you write any production code. This approach, one of the most impactful best practices for software testing, is built on a simple yet powerful cycle: Red, Green, Refactor. First, you write a test that fails (Red). Next, you write the minimum amount of code required to make that test pass (Green). Finally, you clean up and improve the code without changing its behavior (Refactor).

This methodology forces a shift from reactive bug-fixing to proactive design, resulting in a robust, self-documenting test suite that covers every line of code. It encourages developers to think critically about requirements before implementation. Major tech companies have successfully adopted TDD for mission-critical systems. For instance, Amazon uses it to ensure the reliability of its payment systems, while Netflix leverages it in their microservices architecture to maintain stability and enable independent deployments.

How to Implement TDD

To effectively integrate TDD into your workflow, focus on small, incremental steps. Following these actionable tips will help you get started:

- Start with Simple Unit Tests: Begin with the smallest piece of functionality. Focus on one specific behavior and write a test that clearly defines its expected outcome.

- Keep Cycles Short: Aim for a Red-Green-Refactor cycle that lasts between 5-10 minutes. This rapid feedback loop keeps you focused and prevents overly complex implementations.

- Write Minimal Code: In the "Green" phase, resist the urge to over-engineer. Write only the code necessary to pass the current failing test.

- Refactor with Confidence: With a comprehensive test suite in place, you can refactor your code aggressively, knowing that your tests will immediately catch any regressions.

2. Continuous Integration/Continuous Deployment (CI/CD) Testing

Continuous Integration/Continuous Deployment (CI/CD) Testing automates the validation process throughout the entire software delivery pipeline. This best practice for software testing ensures that tests are automatically executed every time a developer commits a code change. This frequent, automated approach provides rapid feedback, catching integration issues and bugs early before they escalate, enabling teams to deliver high-quality software faster and more reliably.

By embedding testing into the development lifecycle, CI/CD creates a safety net that supports agility and innovation. Companies like Facebook rely on CI/CD to handle thousands of releases daily, while Spotify uses an extensive automated testing pipeline to maintain the stability of its streaming platform. Similarly, Etsy's robust deployment pipeline includes comprehensive test automation, allowing for frequent and safe updates. This approach is crucial for modern development, especially in microservices architectures where different components must interact seamlessly. For more advanced strategies in these environments, discover more about contract testing on dotmock.com.

How to Implement CI/CD Testing

Integrating testing into your CI/CD pipeline requires a strategic, phased approach. Use these tips to build a resilient and efficient automated testing process:

- Start with Smoke Tests: Begin by adding a small, fast set of tests (smoke tests) to your CI pipeline. These initial checks quickly verify the most critical functionalities of an application build.

- Implement Fail-Fast Mechanisms: Configure your pipeline to stop immediately upon the first test failure. This provides quick feedback to developers and prevents wasting computational resources on a broken build.

- Use Containerization: Employ tools like Docker to create consistent and reproducible test environments. This eliminates the "it works on my machine" problem by ensuring tests run the same way everywhere.

- Monitor and Optimize Test Execution: Continuously track the performance of your test suite. Identify and optimize slow-running tests to keep the feedback loop as short as possible.

3. Risk-Based Testing

Risk-Based Testing (RBT) is a strategic approach that allocates testing resources to the parts of an application that pose the greatest risk. Instead of aiming for exhaustive testing, which is often impossible, RBT prioritizes test efforts based on the probability of failure and the potential impact of that failure on business objectives. This practical methodology ensures that the most critical functionalities receive the most rigorous attention, making it one of the most efficient best practices for software testing.

This approach is crucial for projects with tight deadlines and limited resources. It enables teams to make informed decisions about where to focus testing efforts for maximum impact. For instance, an e-commerce platform would prioritize testing its checkout and payment processing flows over its "About Us" page. Similarly, a banking application would focus intensely on transaction security and accuracy rather than on a marketing banner display. This targeted strategy, championed by figures like Rex Black and integrated into standards like ISTQB, directly minimizes business risk.

How to Implement Risk-Based Testing

Integrating RBT requires a collaborative effort to identify and assess risks before testing begins. Following these actionable tips will help you apply this strategy effectively:

- Involve Business Stakeholders: Collaborate with product managers and business analysts to identify key business risks and critical user journeys.

- Create a Risk Matrix: Develop a matrix to rate features based on their likelihood of failure and business impact. Use this to prioritize testing activities.

- Leverage Historical Data: Analyze past defect reports and production incidents to identify recurring problem areas and inform your risk assessment.

- Continuously Re-evaluate Risks: Risks are not static. Regularly review and update your risk assessment throughout the project lifecycle as new features are added or requirements change.

4. Shift-Left Testing

Shift-Left Testing is a foundational principle in modern software development that involves moving testing activities to earlier stages of the lifecycle. Instead of treating testing as a final phase before release, this approach integrates quality assurance from the very beginning, starting with requirements and design. This practice is one of the most effective best practices for software testing because it focuses on preventing defects rather than just finding them late in the process. By testing earlier and more frequently, teams can identify and resolve issues when they are exponentially cheaper and easier to fix.

This methodology transforms quality from a siloed responsibility into a collective team effort, improving collaboration between developers, testers, and product owners. Major technology leaders have embraced this philosophy to accelerate delivery without sacrificing quality. For example, Microsoft integrated shift-left principles into its Office 365 development to manage continuous updates and maintain high reliability. Similarly, IBM applies early testing integration across its cloud services to ensure robust performance and security from the ground up, proving the model's effectiveness at an enterprise scale. These strategies often involve running tests in parallel with development to maximize efficiency.

How to Implement Shift-Left Testing

To successfully shift testing left, your team must adopt a proactive, quality-first mindset and integrate specific practices into your daily workflow.

- Involve Testers in Requirement Reviews: Bring QA engineers into planning and design meetings to identify ambiguities, potential risks, and non-testable requirements before any code is written.

- Implement Static Analysis Tools: Integrate automated static code analyzers directly into the IDE to catch bugs, security vulnerabilities, and style issues in real-time as developers write code.

- Use Behavior-Driven Development (BDD): Write acceptance criteria in a clear, human-readable format (like Gherkin) to create a shared understanding of features and generate executable specifications.

- Establish Early Quality Gates: Set up automated checks in your CI/CD pipeline, such as unit test coverage thresholds and code quality scans, that must pass before code can be merged.

5. Exploratory Testing

Exploratory testing is a powerful approach that combines learning, test design, and test execution into a simultaneous, interactive process. Unlike scripted testing, which follows predefined test cases, exploratory testing leverages the tester's creativity, intuition, and domain expertise to dynamically investigate software. This methodology, one of the most effective best practices for software testing, excels at uncovering defects that automated scripts and rigid test plans often miss, such as complex usability issues and unexpected edge cases.

Pioneered by testing experts like Cem Kaner and James Bach, this technique champions unscripted investigation to understand the application's true behavior. Tech giants heavily rely on this human-centric approach. For example, Google applies exploratory testing to discover usability flaws in the Chrome browser, while Microsoft has historically used it to improve the user experience of its Windows operating systems. Atlassian also uses structured exploratory sessions to ensure new features in JIRA are intuitive and robust before release.

How to Implement Exploratory Testing

Effective exploratory testing is structured, not chaotic. Integrating it successfully requires a framework to guide discovery and capture insights.

- Create Charters to Guide Exploration: Start each session with a clear mission or "charter." For example, a charter might be: "Explore the new checkout process to identify any potential sources of user confusion." This provides focus without being overly restrictive.

- Use Session-Based Test Management (SBTM): Conduct testing in short, time-boxed sessions (e.g., 60-90 minutes). During the session, testers take detailed notes on what they tested, any bugs found, and new ideas for future tests.

- Document Findings Immediately: As you test, document your actions, observations, and any defects in real time. This ensures valuable insights are not lost and can be easily reproduced.

- Combine with Scripted Testing: Exploratory testing should complement, not replace, scripted and automated testing. Use it to explore areas of high risk or new functionality while relying on automation for regression coverage.

6. Behavior-Driven Development (BDD)

Behavior-Driven Development (BDD) extends Test-Driven Development by focusing on the behavior of the software from the user's perspective. It fosters collaboration between developers, testers, and business stakeholders by using a common, natural language to describe how the application should behave. This approach, a cornerstone among best practices for software testing, uses a simple "Given-When-Then" format to define acceptance criteria, ensuring everyone has a shared understanding of the requirements before any code is written.

BDD ensures that development efforts are directly tied to business outcomes. It translates abstract requirements into concrete examples, creating executable specifications that validate the system's functionality. The BBC successfully uses BDD to align its large, cross-functional teams on the development of its digital media platforms, ensuring new features meet audience expectations. Similarly, the John Lewis Partnership leverages BDD to build and refine its e-commerce platform, ensuring a seamless and predictable customer journey from browsing to checkout.

How to Implement BDD

Adopting BDD involves a cultural shift towards collaboration and communication. Integrating it successfully relies on creating a shared language and focusing on user outcomes.

- Start with High-Value User Journeys: Begin by defining scenarios for the most critical user paths in your application. This ensures your initial efforts deliver the most significant business impact.

- Keep Scenarios Focused: Each scenario should test one specific behavior. Avoid cramming multiple "When-Then" pairs into a single scenario to maintain clarity and ease of maintenance.

- Involve Business Stakeholders: Actively involve product owners, business analysts, and other non-technical stakeholders in writing and reviewing scenarios. Their input is crucial for defining correct system behavior.

- Automate with BDD Tools: Use frameworks like Cucumber or SpecFlow to turn your Gherkin scenarios into automated tests, creating a living documentation of your system that is always up-to-date.

7. Test Automation Pyramid

The Test Automation Pyramid is a strategic framework that guides the allocation of automated tests across different layers of an application. Popularized by thought leaders like Mike Cohn and Martin Fowler, this model prioritizes efficiency by structuring tests in three tiers. The base consists of a large number of fast and inexpensive unit tests. The middle layer contains fewer, slightly slower integration tests, and the top is reserved for a minimal number of slow, brittle, and expensive end-to-end (UI) tests. This approach is a core tenet of effective software testing best practices.

Adopting this hierarchical model helps teams achieve faster feedback, lower maintenance costs, and more reliable test suites. For instance, Google famously implements a 70/20/10 split, dedicating the majority of their effort to unit tests to ensure foundational code quality. Similarly, Spotify leverages this model to test its microservices architecture, enabling independent and rapid deployments while maintaining system-wide stability. This structure ensures that most defects are caught early and cheaply at the unit level.

How to Implement the Test Automation Pyramid

To apply this framework effectively, your team must strategically balance test types and invest in the right infrastructure. These actionable tips will guide your implementation:

- Follow the 70/20/10 Guideline: Aim for a test distribution where approximately 70% are unit tests, 20% are integration tests, and only 10% are end-to-end UI tests. This ratio provides optimal coverage and speed.

- Invest in Unit Test Infrastructure: A solid foundation of unit tests is crucial. Ensure your team has the tools and training to write them efficiently and effectively.

- Use Test Doubles and Mocks: Isolate components during unit and integration testing by using mocks, stubs, and fakes. This practice reduces dependencies and speeds up test execution.

- Reserve UI Tests for Critical Journeys: Limit slow end-to-end tests to validating critical user workflows, such as the checkout process or user login. You can learn more about the Test Automation Pyramid on dotmock.com and its role in functional testing.

8. Defect Prevention and Root Cause Analysis

Defect Prevention and Root Cause Analysis (RCA) shifts the focus from reactive bug-finding to proactive quality assurance. Instead of just identifying and fixing defects, this approach digs deeper to understand why they occurred in the first place, allowing teams to eliminate the source. This methodology, a cornerstone of mature software testing best practices, involves systematically investigating defect patterns and implementing process changes to prevent their recurrence. The goal is to build quality into the development lifecycle, not just inspect it at the end.

This proactive strategy leads to a significant reduction in long-term development costs and improves overall product stability. Tech giants have long embraced these principles. For example, Toyota famously applies RCA not just in manufacturing but also in its software development to maintain high reliability. Similarly, Intel integrates these process improvement methodologies to ensure the quality of its complex software systems, preventing costly issues from reaching the market.

How to Implement Defect Prevention and RCA

To effectively integrate this practice, foster a culture where defects are seen as learning opportunities rather than failures. These actionable tips will help you establish a systematic approach:

- Use Proven Techniques: Employ methods like the 5 Whys (asking "why" repeatedly to drill down to the source) and fishbone (Ishikawa) diagrams to visually map potential causes of a problem.

- Establish Defect Review Meetings: Hold regular, blameless meetings to analyze critical or recurring defects. The focus should be on process, not people.

- Track Defect Trends: Monitor metrics like defect density, origin, and type over time. Use this data to identify patterns and pinpoint areas in your process that need improvement.

- Create a Knowledge Repository: Document the findings from your root cause analyses. This creates a valuable resource that helps developers avoid repeating past mistakes.

9. Cross-Browser and Cross-Platform Testing

Cross-Browser and Cross-Platform Testing is a comprehensive strategy that ensures software functions consistently across different browsers, operating systems, and devices. This practice directly addresses the fragmented digital landscape, validating a uniform user experience regardless of how a user accesses the application. It’s an essential part of a modern quality assurance process, preventing functionality gaps and visual inconsistencies that can alienate segments of your user base.

This methodology is crucial for maintaining brand reputation and reaching the widest possible audience. For example, Netflix tests its streaming platform rigorously across countless smart TVs, mobile devices, and browsers to guarantee a seamless viewing experience for everyone. Similarly, PayPal validates its payment systems across global device variations to ensure reliability and trust. These efforts highlight why cross-compatibility is one of the most critical best practices for software testing today.

How to Implement Cross-Browser and Cross-Platform Testing

To effectively implement this strategy without getting overwhelmed, focus on a prioritized and automated approach. Following these actionable tips will help you get started:

- Prioritize Based on Analytics: Use user analytics and market share data to identify the most popular browsers, devices, and operating systems among your target audience. Focus your primary testing efforts there.

- Create a Testing Matrix: Develop a clear device and browser compatibility matrix. This document should outline which combinations will be tested manually, which will be automated, and which will receive lower-priority spot checks.

- Leverage Cloud-Based Platforms: Use services like BrowserStack or Sauce Labs to access a vast array of real and virtual devices and browsers without maintaining a physical lab. This approach provides scalability and cost-efficiency.

- Automate Compatibility Tests: Automate repetitive compatibility checks for core functionalities using frameworks like Selenium or Cypress. This frees up manual testers to focus on exploratory testing and complex user scenarios.

Best Practices Testing Methods Comparison

| Testing Methodology | Implementation Complexity (🔄) | Resource Requirements (⚡) | Expected Outcomes (📊) | Ideal Use Cases (💡) | Key Advantages (⭐) |

|---|---|---|---|---|---|

| Test-Driven Development (TDD) | Medium 🔄 (requires discipline) | Moderate ⚡ (dev time upfront) | Higher code quality, reduced debugging 📊 | Critical systems, new features, complex code | Improved quality, better design, living docs ⭐ |

| Continuous Integration/Deployment (CI/CD) Testing | High 🔄 (setup and maintenance) | High ⚡ (automation infra & tools) | Rapid feedback, faster releases 📊 | Frequent code commits, large teams | Early bug detection, faster time-to-market ⭐ |

| Risk-Based Testing | Medium 🔄 (analysis heavy) | Low-Moderate ⚡ (planning focused) | Focused testing on critical risks 📊 | Limited resources, risk-sensitive projects | Resource optimization, business alignment ⭐ |

| Shift-Left Testing | Medium-High 🔄 (culture change) | Moderate ⚡ (early QA involvement) | Reduced defect cost, shorter cycles 📊 | Agile teams, DevOps, quality-critical projects | Early defect detection, improved collaboration ⭐ |

| Exploratory Testing | Low-Medium 🔄 (skill-dependent) | Low ⚡ (human-centric approach) | Finds unexpected, usability issues 📊 | Usability, complex UI, changing requirements | Human intuition, adaptability, minimal docs ⭐ |

| Behavior-Driven Development (BDD) | Medium 🔄 (requires collaboration) | Moderate ⚡ (scenario writing/automation) | Clear requirements, reduced ambiguity 📊 | Business-driven projects, stakeholder alignment | Shared understanding, living documentation ⭐ |

| Test Automation Pyramid | High 🔄 (discipline and balance) | High ⚡ (automation infrastructure) | Fast feedback, cost-effective coverage 📊 | Large test suites, automated environments | Efficient, reliable testing layers ⭐ |

| Defect Prevention & Root Cause Analysis | Medium-High 🔄 (process driven) | Moderate ⚡ (time and analysis) | Long-term defect reduction, process improvement 📊 | Quality-focused orgs, mature processes | Sustained quality, learning culture ⭐ |

| Cross-Browser & Cross-Platform Testing | High 🔄 (complex environments) | High ⚡ (infrastructure & tools) | Broad compatibility, user satisfaction 📊 | Web apps, multi-device/platform targets | Wider reach, reduced platform issues ⭐ |

Building a Culture of Quality

The journey through the core tenets of modern software testing reveals a powerful, unifying theme: quality is not an afterthought, but a foundational pillar of the entire development lifecycle. The best practices for software testing we've explored, from Test-Driven Development (TDD) and Behavior-Driven Development (BDD) to the strategic implementation of the Test Automation Pyramid, are more than just technical processes. They represent a fundamental cultural shift towards shared ownership and proactive quality assurance.

Moving beyond siloed testing phases, the principles of Shift-Left Testing and Continuous Integration (CI/CD) empower every team member, from developers to DevOps engineers, to become a champion of quality. This collaborative approach transforms testing from a final gatekeeper into an ongoing, integrated activity. The goal is no longer just to find bugs but to prevent them entirely, a mission reinforced by robust defect prevention and root cause analysis strategies.

From Practice to Culture: Your Actionable Next Steps

Adopting these methodologies requires a concerted effort and a clear roadmap. To transition from theory to practice, consider these immediate steps:

- Start Small and Iterate: Don't attempt to implement all nine practices at once. Choose one, like introducing exploratory testing sessions for a specific feature or applying risk-based testing to prioritize your next regression suite.

- Invest in Education and Alignment: Ensure everyone understands the "why" behind each practice. A developer who grasps the value of BDD is more likely to write effective feature files. Clearly defining QA team roles and responsibilities is crucial for fostering this robust quality culture and ensuring everyone knows how they contribute.

- Empower with the Right Tools: True transformation is achieved when powerful practices are supported by efficient tools. For teams developing against APIs, a significant bottleneck is often waiting for backend services to be ready. This is where modern tooling becomes a game-changer.

Ultimately, mastering these best practices for software testing is about building resilient, high-performing teams that consistently deliver exceptional products. By embedding quality into your team’s DNA, you create a sustainable framework for innovation, reduce time-to-market, and build software that truly meets user expectations. This commitment doesn't just improve code; it builds customer trust, enhances brand reputation, and drives business success in a competitive digital landscape. The result is a development ecosystem where quality is everyone’s responsibility, leading to more robust applications and a more confident, efficient team.

Ready to eliminate API dependencies and accelerate your testing cycles? See how dotMock can provide your team with instant, zero-configuration mock APIs to test every edge case and failure scenario without waiting. Try dotMock today and start building a true culture of quality.