API Rate Limit A Developer's Survival Guide

Think of an API rate limit as the friendly bouncer at a popular nightclub. It’s a control system that dictates how many requests a client can send to a server in a specific window of time. The goal isn't to be restrictive; it's to make sure the venue doesn't get so overcrowded that nobody can have a good time. This simple rule is absolutely essential for keeping digital services stable and fair for everyone.

What Is An API Rate Limit And Why It Matters

At its heart, an Application Programming Interface (API) acts like a messenger. It takes a request from an application, delivers it to the server, and brings back the response. Now, imagine thousands—or even millions—of applications all sending messages at once. Without some form of traffic control, the server would quickly get overwhelmed, leading to sluggish performance or, worse, a complete crash.

An API rate limit is that traffic management system. It’s not a penalty; it’s a necessary safeguard. It establishes a clear, acceptable rate of requests from a single source, whether that's a specific user, an IP address, or an application key.

When a client crosses this line, the server gently pushes back, typically by sending a 429 Too Many Requests status code. It’s a clear signal: "Hey, you need to slow down a bit."

The Core Purpose of Rate Limiting

So, why is managing this flow of requests so critical? The reasons go far beyond just preventing a server from falling over. A smart rate-limiting strategy is the bedrock of any secure, dependable, and scalable service. Without one, an API is left wide open to everything from accidental glitches to deliberate attacks.

This table breaks down the main reasons why API providers put rate limits in place.

Key Reasons for Implementing API Rate Limits

| Reason | Benefit for the API Provider | Benefit for the API Consumer |

|---|---|---|

| Ensure Fair Usage | Prevents resource monopolization, guaranteeing a consistent service level for all users. | Provides a predictable and reliable API experience without performance drops. |

| Prevent System Overload | Protects infrastructure from traffic surges, preventing slowdowns and costly downtime. | The service remains available and responsive, even during peak usage periods. |

| Bolster Security | Acts as a first line of defense against DoS attacks, brute-force attempts, and web scraping. | Protects user data and application integrity from common cyber threats. |

| Manage Operational Costs | Controls resource consumption (CPU, memory, bandwidth), leading to predictable infrastructure spending. | Often results in more transparent and fair pricing models based on usage tiers. |

By setting these clear boundaries, providers create a predictable and stable environment. This reliability is just as crucial for the developers building on top of the API as it is for the company maintaining it.

Ultimately, an API rate limit isn't a barrier. It’s a vital piece of a well-designed system that balances open access with long-term sustainability. It ensures the digital services we all depend on stay responsive, secure, and available. To get a better feel for this, it helps to understand the relationship between APIs and endpoints, which are the building blocks of these digital conversations.

A Look at Common Rate Limiting Algorithms

When an API provider needs to manage traffic, they don't just pull a random number out of a hat. They rely on specific strategies, or algorithms, each with its own way of counting and capping requests. Think of it like a bouncer at a club—some let people in one-by-one, some manage a queue, and others have a guest list.

Understanding these core algorithms is crucial. It helps you anticipate how an API will behave under pressure and even gives you the insight to choose the right strategy if you're building your own service. Let's break down the most common methods to see what makes them tick.

Token Bucket

Imagine you're given a bucket that can hold 100 tokens. This bucket represents your "burst capacity"—the total number of requests you can fire off in a short burst. Every API call you make costs one token.

At the same time, new tokens are being added back into the bucket at a steady, predictable pace, say one token every second. If your bucket is already full, any new tokens just spill over and are lost. As long as you have at least one token, your API call goes through. If the bucket is empty, you have to wait for a new token to arrive.

This approach is fantastic for handling unpredictable, "bursty" traffic. It lets a client make a flurry of requests when needed but still enforces a reasonable average rate over the long haul.

The Token Bucket algorithm shines when traffic patterns are spiky. Think of an e-commerce site during a flash sale, a social media platform when a post goes viral, or a streaming service during prime time.

Its flexibility is why it’s a go-to choice for so many modern APIs.

Leaky Bucket

Now, let's switch analogies. Picture a bucket with a small hole in the bottom. API requests are like water being poured into it. It doesn't matter how fast you pour the water in; it can only leak out of the hole at a slow, constant rate.

If requests flood in faster than the bucket can drain, they start to queue up. If the queue gets too long and the bucket overflows, any new requests are simply dropped. This algorithm is all about smoothing out erratic bursts of requests into a perfectly consistent and predictable stream for the server.

Unlike the Token Bucket, the Leaky Bucket algorithm doesn’t accommodate bursts. It’s a strict enforcer of a constant output rate, making it perfect for services that need an incredibly stable load on their backend systems.

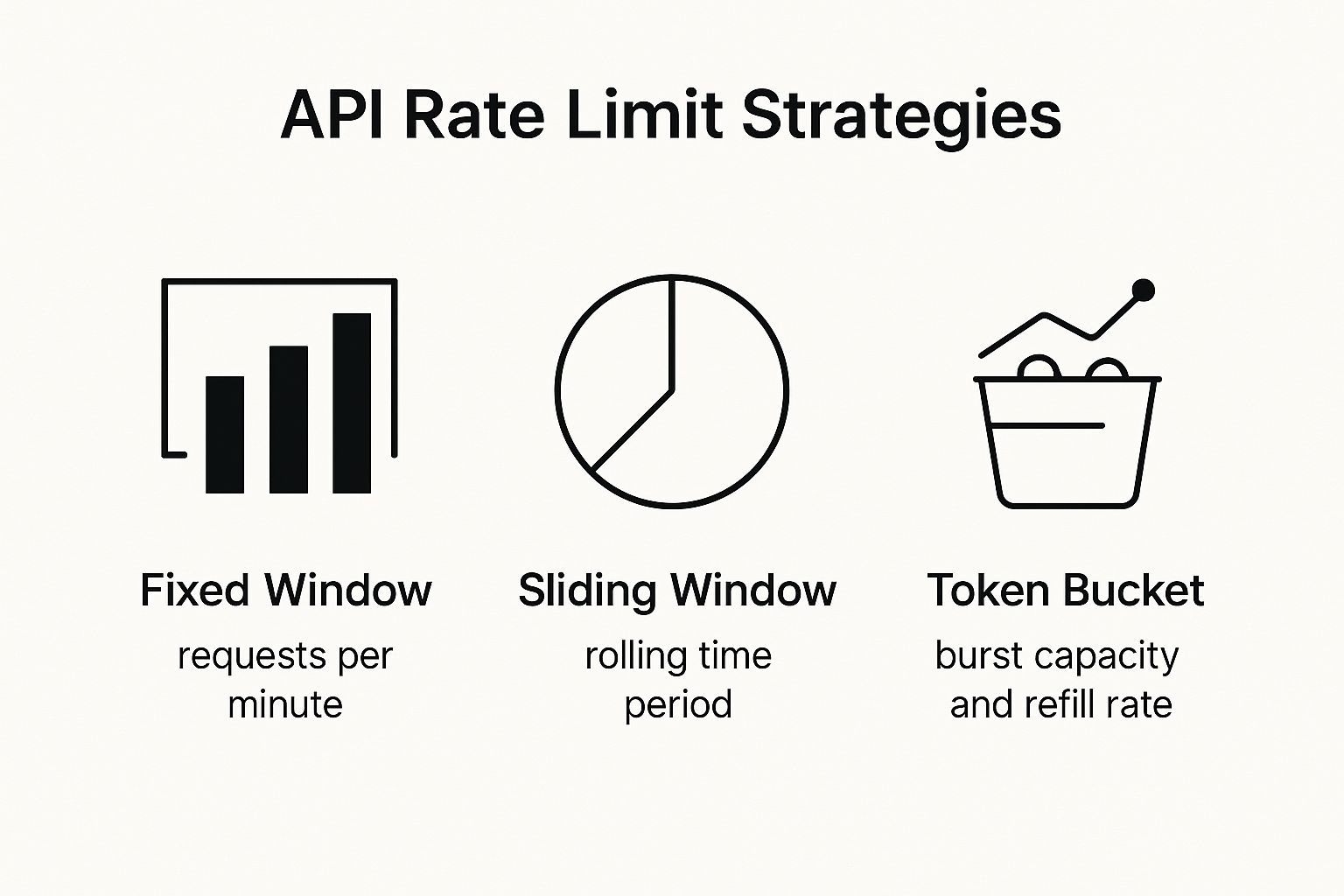

The infographic below helps visualize the differences between the Token Bucket, Fixed Window, and Sliding Window approaches.

The takeaway here is simple: Fixed Window is easy to implement but can be cheated. Sliding Window is fairer. And Token Bucket offers the best of both worlds, handling bursts while maintaining control.

Fixed Window Counter

This is probably the most straightforward rate-limiting method to grasp. The system simply sets up a counter for a fixed period—let’s say one hour—and allows a certain number of requests within that window, like 1,000 requests per hour.

When a request arrives, the system checks the counter. If it’s under 1,000, the request is approved and the counter goes up by one. If it’s already at 1,000, the request is denied. When the clock strikes the next hour, the counter resets to zero. Simple.

But there’s a big problem: the "thundering herd" effect. A user could burn through all 1,000 requests in the last minute of the hour, then immediately make another 1,000 requests in the first minute of the next hour. This creates a massive, concentrated traffic spike that can easily overwhelm a server.

Sliding Window Log

To fix the Fixed Window's glaring issue, we have the Sliding Window Log. Instead of just one counter, this algorithm maintains a log of timestamps for every single request from a user.

When a new request comes in, the system looks at its log and throws out any timestamps older than the current time window (e.g., anything from more than 60 seconds ago). It then just counts the remaining timestamps. If that number is below the limit, the new request is accepted, and its timestamp is added to the log. This method delivers a much more accurate and fair rate limit.

Sliding Window Counter

The Sliding Window Counter is a clever hybrid. It blends the memory efficiency of the Fixed Window with the improved fairness of the Sliding Window Log. It works by estimating the request rate based on the count from the previous window and the current one.

This balanced approach makes it a strong choice for many large-scale systems that need a good mix of performance and accuracy. You’ll see this in action with high-stakes services like financial APIs, where managing high volumes of time-sensitive data is critical. For instance, some brokerage APIs limit users to 120 requests per minute and provide real-time feedback through HTTP headers like X-Ratelimit-Allowed and X-Ratelimit-Used. You can see how these systems work by digging into their developer documentation.

Comparison of Rate Limiting Algorithms

To make sense of these different strategies, it helps to see them side-by-side. Each has its place, and the "best" one really depends on the specific needs of the API and its users.

| Algorithm | How It Works | Pros | Cons |

|---|---|---|---|

| Token Bucket | A bucket is refilled with tokens at a fixed rate. Each request consumes one token. Allows for bursts up to the bucket's capacity. | Handles bursty traffic well; very flexible. | Can be slightly more complex to implement than simpler counters. |

| Leaky Bucket | Requests are processed at a steady, fixed rate, like water leaking from a bucket. Excess requests are queued or dropped. | Smooths traffic into a predictable stream; protects backend services from load spikes. | Bursts of traffic are delayed or dropped, which may not be ideal for all applications. |

| Fixed Window Counter | Counts requests within a static time window (e.g., per hour). The counter resets at the start of each new window. | Simple to implement and understand; low memory usage. | Vulnerable to traffic spikes at the window's edge (the "thundering herd" problem). |

| Sliding Window Log | Keeps a log of timestamps for each request. The rate is calculated over a rolling time window. | Highly accurate and fair; solves the edge-spike issue of the Fixed Window. | Can be memory-intensive as it needs to store a timestamp for every request. |

| Sliding Window Counter | A hybrid that estimates the rate based on the previous and current window counters. | A good balance of accuracy and performance; less memory-heavy than the Sliding Window Log. | More complex to implement than a Fixed Window and can be slightly less accurate than a log. |

Ultimately, choosing the right algorithm involves a trade-off between implementation complexity, performance overhead, and the kind of traffic behavior you want to encourage or prevent.

How API Providers Implement Rate Limiting

Putting an effective API rate limit in place is a real balancing act. On one hand, API providers need to shield their infrastructure from getting swamped. On the other, they have to deliver a smooth, reliable experience for the developers using their service. The whole process really kicks off with one simple question: how do you know who’s making a request?

That single decision sets the stage for the entire rate-limiting framework. There’s no magic bullet here; the best approach depends entirely on the API's purpose, security requirements, and even its business model.

Choosing an Identification Method

Before a server can count a single request, it has to know who to chalk it up to. Most providers land on one of three common ways to track usage.

By IP Address: This is the most straightforward method. Since it doesn’t require any authentication, it's often used for public APIs where anyone can make a call. But it's also the least precise—multiple users behind a corporate firewall all share the same IP, and a single bad actor can easily rotate through different ones.

By User Account or ID: When a user logs in, their authenticated session becomes a perfect identifier for tracking API calls. This is a huge step up in accuracy from IP tracking, ensuring limits are tied to a specific person, not just a machine.

By API Key: This is the gold standard for most modern services. Each developer or application gets a unique key that has to be sent with every single request. This gives providers incredible control, letting them set different limits for different apps and instantly revoke access if a key is ever misused.

Selecting the Right Algorithm

Once you know who is making the calls, the next puzzle piece is choosing the logic to enforce the rules. As we’ve covered, different algorithms like Token Bucket or Sliding Window Counter come with their own trade-offs in performance, precision, and complexity.

Think about a social media API. It might lean toward a Token Bucket approach. This lets an application handle a sudden burst of activity—like a user uploading a whole album of photos at once—while still keeping the average rate fair over time.

Now, contrast that with a financial data API streaming real-time stock quotes. That service would likely go for a much stricter Sliding Window algorithm. This guarantees a smooth, predictable flow of requests, which is critical for protecting its highly sensitive and time-critical backend systems. It’s all about matching the algorithm to the real-world usage patterns you expect.

The Importance of Clear Communication

Let's be honest: a rate limit that developers don't know about is just a bug waiting to happen. Clear, upfront communication isn't just a nice-to-have; it's essential for a good developer experience.

The most user-friendly APIs don’t just enforce limits; they actively help developers stay within them. This transparency builds trust and prevents applications from breaking unexpectedly.

This kind of communication really happens in two key places:

Thorough Documentation: API docs must spell out the limits for every endpoint in plain English. That means the number of requests allowed, the time window (per second, minute, or hour), and how different pricing tiers might change those limits.

HTTP Response Headers: This is where developers get real-time feedback. A well-designed API sends back specific headers with every single response to tell a client exactly where they stand. You'll often see headers like

X-RateLimit-Limit(the total requests allowed),X-RateLimit-Remaining(how many you have left), andX-RateLimit-Reset(the time when your quota refreshes).

Exploring Advanced Strategies

Beyond the basics, many providers get creative with more sophisticated techniques to manage access and tie rate limits directly to their business goals. These strategies offer a lot more flexibility and help the system scale.

A popular one is tiered rate limiting, which links API access directly to a subscription plan.

- Free Tier: Offers basic access with a low request limit. It’s perfect for hobbyists or developers just kicking the tires.

- Pro Tier: Provides a much higher limit for growing apps that are starting to see real traffic.

- Enterprise Tier: Often includes custom, high-volume limits and dedicated support for massive-scale operations.

Another powerful strategy is dynamic rate limiting. Instead of a static number, the limits can actually adjust on the fly based on current server load. If the system is getting hammered, the limits might temporarily tighten to keep things stable for everyone. On the flip side, during quiet periods, the limits could loosen up. This adaptive approach helps ensure the API stays resilient and performant, no matter what’s thrown at it.

Best Practices For Handling Rate Limits As A Client

Knowing an API rate limit exists is one thing. Actually navigating it without your application crashing is another skill entirely. As a client consuming an API, your goal should be to act like a "good citizen"—making efficient, respectful requests that prevent errors and keep everything running smoothly. This means you need to stop waiting for errors to happen and start designing your system to avoid them in the first place.

Think of the API documentation as your map. Before you write a single line of code, get familiar with the rules of the road. Any well-documented API will tell you its limits upfront, giving you the information you need to build your application's logic correctly from day one.

Read Rate Limit Headers Religiously

The most powerful tool an API provider gives you is real-time feedback, and it comes in the form of HTTP headers. After every single API call, your application should be inspecting the response for headers that tell you exactly where you stand with your current quota.

You'll usually see a few common ones:

X-RateLimit-Limit: The maximum number of requests allowed in the current time window.X-RateLimit-Remaining: How many requests you have left before you hit the wall.X-RateLimit-Reset: The time (often a UTC epoch timestamp) when your quota will be refilled.

By reading these headers on every response, your application can intelligently slow itself down as it gets closer to the limit. This technique, known as client-side throttling, is way better than blindly firing off requests until you get smacked with a 429 Too Many Requests error.

Implement Caching To Reduce Redundant Calls

One of the fastest ways to burn through your request quota is by asking for the same information over and over again. If the data you’re fetching doesn’t change that often, caching is your best friend. Storing responses locally, even for a short period, can slash your API call volume.

For instance, if you're pulling a user's profile information that only gets updated once in a while, you could cache it for five minutes. Any request for that profile in the next five minutes can be served straight from your local cache instead of making another network trip. This doesn't just preserve your rate limit quota; it makes your application feel snappier and more responsive to the user.

Use Exponential Backoff With Jitter For Retries

No matter how carefully you plan, you're going to hit a rate limit eventually. When you get that 429 error, your first instinct might be to retry the request immediately. Don't do it. That's a classic mistake that can create a vicious cycle, keeping you locked out even longer.

The gold standard for handling retries is exponential backoff with jitter. Instead of retrying after a fixed delay, you progressively increase the wait time between each failed attempt. You also add a small, random delay (the "jitter") to prevent multiple clients from creating a "thundering herd" and hammering the server all at once.

A simple retry sequence looks like this:

- First failure: Wait 1 second + a few random milliseconds.

- Second failure: Wait 2 seconds + a few random milliseconds.

- Third failure: Wait 4 seconds + a few random milliseconds.

- ...and so on, up to a reasonable maximum.

This strategy gives the API the breathing room it needs while dramatically increasing your chances of a successful retry. It's a foundational technique for building resilient, well-behaved API clients.

Optimize Your API Requests

Finally, pay close attention to what and how you ask for data. Stop fetching massive, unfiltered datasets when all you need is one or two pieces of information. If the API offers them, use parameters for filtering, pagination, and field selection. For example, some APIs, especially for historical data, impose strict caps. Interactive Brokers’ TWS API, for instance, once limited clients to 50 simultaneous historical data requests to ensure server stability.

Being surgical with your requests reduces data transfer, lightens the load on the server, and, most importantly, conserves your precious rate limit quota. This all ties into a bigger strategy of truly understanding the API's contract—a key concept you can explore further in our guide on what contract testing is and why it matters. When you put all these practices together, your application stops being a noisy neighbor and starts acting like a valued partner in the API ecosystem.

Real-World Examples of API Rate Limiting

The theory behind rate limiting is solid, but things really click when you see how it’s applied in the real world. Major platforms don't just pick a number out of a hat; they fine-tune their strategies to match their business models and technical constraints. Looking at how they do it offers some great lessons in balancing an open platform with a stable system.

Think about social media. A platform might cap users at 200 posts per hour. This is a simple but effective way to stop spambots in their tracks without getting in the way of even the most active human users. It’s a classic fixed-window counter approach that keeps the public feed clean for everyone.

Financial data services play a completely different game. An API serving up historical stock data might set a firm limit of 100 requests per minute for each API key. This isn't just about server load; it's about protecting incredibly valuable data and ensuring that massive data-scraping operations don't slow down the system for traders who need real-time information.

Balancing Access and Protection

Web analytics platforms are another great example of this balancing act. A service like Plausible Analytics, which is all about privacy, sets its default rate limit at 600 requests per hour per API key.

This limit is generous enough for most developers to pull all the stats they need without hitting a wall. But what if you’re a power user? Plausible allows you to request a higher limit. This shows a smart, tiered approach to managing resources.

This flexible model is becoming more and more common. It allows a service to serve everyone from a solo developer on a passion project to a massive enterprise, simply by tying API access to their actual needs or subscription plan.

Tiered Strategies in Practice

Marketing automation tools often take things a step further with layered rate limits. For instance, they might have a broad daily cap on API calls, say 50,000 per day, but also enforce a much stricter limit on resource-heavy actions like sending emails, maybe 100 per minute.

This two-pronged strategy is clever for a couple of reasons:

- The big daily limit helps manage overall infrastructure costs and usually scales with the customer's subscription tier.

- The tighter per-minute limit prevents sudden, massive spikes in traffic from degrading performance for everyone else on the shared platform.

These examples make it clear that there's no single "best" way to set an API rate limit. The right strategy always depends on what the service is trying to achieve—whether that’s stopping spam, protecting proprietary data, or just keeping operational costs in check.

Understanding these patterns is a huge advantage. You can even get a firsthand look at your own API's traffic patterns with tools that offer live HTTP traffic capture and analysis. By seeing how the big players do it, you'll be in a much better position to design your own rules or work more effectively with the APIs you depend on.

Frequently Asked Questions About API Rate Limits

https://www.youtube.com/embed/8gm6oshBpHA

Even after you get the hang of rate limiting, some specific questions always seem to come up during development. Getting straight answers to these common problems can save you hours of painful debugging and help you build tougher, more reliable applications from the get-go.

Let's dive into some of the most common questions developers run into when dealing with an API rate limit. These insights come straight from real-world experience and will help you handle these constraints like a pro.

What Happens When I Exceed an API Rate Limit?

So, your application got a little too chatty and sent a flood of requests. What now? The API server won't just ignore you; it will actively reject your request and send back a specific HTTP status code telling you to back off.

The most common signal you'll see is a 429 Too Many Requests error. Think of it as the API's polite but firm way of saying, "Hey, you need to slow down."

A well-designed API won't just block you—it will give you a path to recovery. Keep an eye out for a

Retry-Afterheader in the response. This little gem tells you exactly how many seconds to wait before trying again, giving you the key to a smart retry strategy.

A classic rookie mistake is to ignore this header and just hammer the API again. That's a great way to get yourself temporarily banned or locked out for even longer.

How Can I Test My Application's Rate Limit Handling?

You definitely don't want to find out your app breaks under pressure during a real-world production outage. Testing how your code handles a 429 error before it happens is a non-negotiable part of building resilient software.

Fortunately, you have a few great options for simulating these failures in a safe development environment:

- Use a Mock Server: Tools like dotMock are perfect for this. You can set up a mock API endpoint and configure it to start throwing

429errors after a specific number of calls. It’s a completely predictable way to trigger and test your application's retry logic. - Write Unit Tests: Go directly to the source. Isolate the functions in your code that make API calls and write unit tests that force a rate limit error. This is the best way to confirm that your exponential backoff or other retry mechanisms kick in exactly when they should.

- Leverage API Sandboxes: Many API providers offer a "sandbox" or development environment. These sandboxes often have much lower rate limits than the production API, making them the perfect playground for testing how your app behaves under realistic constraints.

Are API Rate Limits the Same for Everyone?

Not at all. Rate limits are almost never a one-size-fits-all deal. In fact, they're often a central part of an API's business model. Different pricing tiers usually come with different usage limits, allowing providers to support everyone from solo developers to massive enterprise companies.

You’ll typically see limits that change based on who is making the request:

- Unauthenticated Requests: If you make a call without an API key, you’ll be subject to the strictest limits, which are usually tied to your IP address.

- Free Tier Users: Once you sign up for a key, even on a free plan, you'll get a more generous allowance. This is usually plenty for development, testing, and small-scale projects.

- Paid or Enterprise Tiers: This is where the real power is. Paying customers get significantly higher limits, ensuring their high-traffic applications have the resources they need to run smoothly.

This tiered model makes sense for everyone. It gives developers a free on-ramp and creates a sustainable business for the provider by asking the heaviest users to help cover the costs.

Ready to build resilient applications? With dotMock, you can simulate API rate limits, timeouts, and other failure scenarios in seconds, without any complex configuration. Stop waiting for production errors and start testing for resilience today. Get your production-ready mock API at https://dotmock.com.